Are you running a  conjoint experiment

conjoint experiment and struggling with design considerations? Unsure about how many respondents, trials or levels for a variable are needed? We have all been there. Don’t despair — we are here to help you! #twittorial

and struggling with design considerations? Unsure about how many respondents, trials or levels for a variable are needed? We have all been there. Don’t despair — we are here to help you! #twittorial  1/22

1/22

conjoint experiment

conjoint experiment and struggling with design considerations? Unsure about how many respondents, trials or levels for a variable are needed? We have all been there. Don’t despair — we are here to help you! #twittorial

and struggling with design considerations? Unsure about how many respondents, trials or levels for a variable are needed? We have all been there. Don’t despair — we are here to help you! #twittorial  1/22

1/22

Alberto Stefanelli ( @sergsagara) and I have been working hard over the last few months, running a large simulation study  to measure statistical power of common conjoint experiment designs. 2/22

to measure statistical power of common conjoint experiment designs. 2/22

to measure statistical power of common conjoint experiment designs. 2/22

to measure statistical power of common conjoint experiment designs. 2/22

Using a simulation approach, we improved upon existing literature on sample size required for choice experiments, avoiding inaccurate “rules of thumb”  and properly testing for specific hypotheses based on expected effect size. 3/22

and properly testing for specific hypotheses based on expected effect size. 3/22

and properly testing for specific hypotheses based on expected effect size. 3/22

and properly testing for specific hypotheses based on expected effect size. 3/22

We started surveying recent literature in polsci  and found 61 articles that used conjoint experiments using Heinmueller et al 2014. We looked at the # of resp’s, # of tasks, how many levels their variables had, and the minimum sig effect they found 4/22 http://doi.org/10.1093/pan/mpt024

and found 61 articles that used conjoint experiments using Heinmueller et al 2014. We looked at the # of resp’s, # of tasks, how many levels their variables had, and the minimum sig effect they found 4/22 http://doi.org/10.1093/pan/mpt024

and found 61 articles that used conjoint experiments using Heinmueller et al 2014. We looked at the # of resp’s, # of tasks, how many levels their variables had, and the minimum sig effect they found 4/22 http://doi.org/10.1093/pan/mpt024

and found 61 articles that used conjoint experiments using Heinmueller et al 2014. We looked at the # of resp’s, # of tasks, how many levels their variables had, and the minimum sig effect they found 4/22 http://doi.org/10.1093/pan/mpt024

Studies recruited between 800-2100 respondents and asked them to do 3-8 tasks. The min significant effect discovered ranged between 0.03-0.06. Attributes with highest # of levels ranged between 4-9. There were outliers, but that remains for a separate discussion. 5/22

The power of a CJ design depends on the sample (# of respondents and # of tasks) and effect size. With increasing sample size — be it # of respondents or # of trials – its statistical power increases. However, to discover very small effects, we need a substantial sample. 6/22

Cost considerations are an important aspect with CJ experiments and asking a resp. for more tasks is cheaper than recruiting more resp's. Exploring the power of different combinations of # of respondents and tasks can give you a good sense about what power they can provide. 7/22

Something that is often forgotten & omitted in CJ experiments is that the # of levels of a variable can impact your statistical power To show this, we varied the number of levels of a variable of interest. With increasing number of levels, the statistical power decreases! 8/22

To show this, we varied the number of levels of a variable of interest. With increasing number of levels, the statistical power decreases! 8/22

To show this, we varied the number of levels of a variable of interest. With increasing number of levels, the statistical power decreases! 8/22

To show this, we varied the number of levels of a variable of interest. With increasing number of levels, the statistical power decreases! 8/22

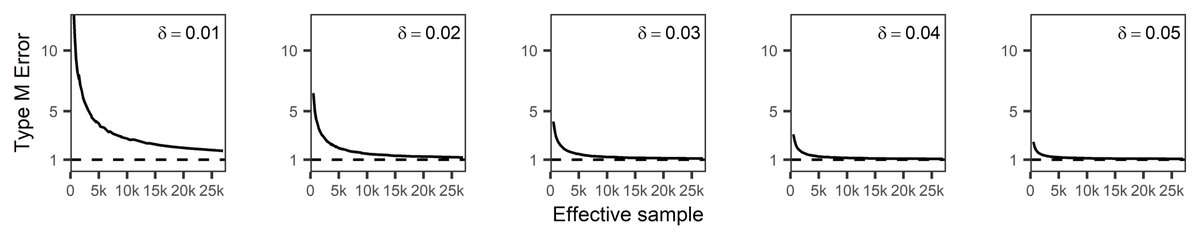

There are other important measures than statistical power, so we measured and analysed both Type S and Type M errors too (see Gelman and Carlin, 2014). In short, S means the prob you will see an effect of the opposite sign, M is the exaggeration ratio http://www.stat.columbia.edu/~gelman/research/published/retropower_final.pdf 9/22

Type S error rates seriously spiked only for a combination of small true effect size and very small samples. Nevertheless, Type S errors can have grave consequences for tested theories, hence should be ruled out as much as possible. 10/22

Type M errors seemed to behave according to our expectations. Using a small sample in a CJ experiment is a sure way to come to overhyped conclusions about small (and potentially inconsequential) effects. Lit review showed some extreme examples of estimated effects

“So, how the heck am I supposed to design my experiment?!” Thanks for asking! It took us months to code and run this awfully big simulation study, so we thought it would be great if you don’t have to do it again

12/22

12/22

12/22

12/22

We built  a tool that you can use to estimate the statistical power of your conjoint experiment design

a tool that you can use to estimate the statistical power of your conjoint experiment design : https://mblukac.shinyapps.io/conjoints-power-shiny/ Feel free to experiment and explore the results. The link may change soon though as we consider moving it to a different domain. 13/22

: https://mblukac.shinyapps.io/conjoints-power-shiny/ Feel free to experiment and explore the results. The link may change soon though as we consider moving it to a different domain. 13/22

a tool that you can use to estimate the statistical power of your conjoint experiment design

a tool that you can use to estimate the statistical power of your conjoint experiment design : https://mblukac.shinyapps.io/conjoints-power-shiny/ Feel free to experiment and explore the results. The link may change soon though as we consider moving it to a different domain. 13/22

: https://mblukac.shinyapps.io/conjoints-power-shiny/ Feel free to experiment and explore the results. The link may change soon though as we consider moving it to a different domain. 13/22

The method behind the simulation: we used a multilevel LPM  to generate probabilities for selecting individual profiles. Next, we transformed these probabilities into the probability of selecting P1, given the attributes shown in P1 and P2. 14/22

to generate probabilities for selecting individual profiles. Next, we transformed these probabilities into the probability of selecting P1, given the attributes shown in P1 and P2. 14/22

to generate probabilities for selecting individual profiles. Next, we transformed these probabilities into the probability of selecting P1, given the attributes shown in P1 and P2. 14/22

to generate probabilities for selecting individual profiles. Next, we transformed these probabilities into the probability of selecting P1, given the attributes shown in P1 and P2. 14/22

We then used this probability to run a Bernoulli trial on the hypothetical conjoints. The multilevel model is very flexible because it can accommodate any number of attributes, levels, or even incorporate treatment heterogeneity for the simulation!  15/22

15/22

15/22

15/22

Our simulation used a full factorial design, which was informed by previous literature review  and even slightly extended, so that it can cover all plausible designs and expected effects for future research

and even slightly extended, so that it can cover all plausible designs and expected effects for future research  16/22

16/22

and even slightly extended, so that it can cover all plausible designs and expected effects for future research

and even slightly extended, so that it can cover all plausible designs and expected effects for future research  16/22

16/22

We believe in reproducibility, so we’ll also make everything available on GitHub soon. We'll include a tutorial on how to use our code to run your own simulations. We also plan to expand the tool with Type S and M errors, and export plots for preregistration plans/articles 17/22

If you have any questions or feedback (very appreciated!), please get in touch with me or @sergsagara. The full paper is still being written, but we thought it would be useful to share this with researchers, considering the # of questions we got about this in the past weeks 18/22

Our thanks goes to all the awesome people that have inspired or motivated us to do this work: @thosjleeper who gave an awesome talk many years ago at @FSW_KULEUVEN #MethLab on online experimentation. 19/22

Also @brettjgall who has worked on this before us: https://osf.io/bv6ug/ and @rmkubinec for this guide: http://www.robertkubinec.com/post/conjoint_power_simulation/ 20/22

. @a_strezh for developing cool software for designing CJs and @littvay for encouragement during the last @ECPR Winter School ( https://ecpr.eu/WinterSchool ). 21/22

I cannot stress this enough,  please

please do get in touch with your feedback or questions. It will be *immensely* helpful for us to hear what the community thinks about this before we submit, so that we can all develop something useful for everyone.

do get in touch with your feedback or questions. It will be *immensely* helpful for us to hear what the community thinks about this before we submit, so that we can all develop something useful for everyone.  end

end

please

please do get in touch with your feedback or questions. It will be *immensely* helpful for us to hear what the community thinks about this before we submit, so that we can all develop something useful for everyone.

do get in touch with your feedback or questions. It will be *immensely* helpful for us to hear what the community thinks about this before we submit, so that we can all develop something useful for everyone.  end

end

Read on Twitter

Read on Twitter