Want to hear a secret?

Regardless of your experience, here is an area of Machine Learning where you can have a huge impact:

Feature Engineering

Feature Engineering

It sounds fancy because people love to complicate things, but let's make it simple:

Regardless of your experience, here is an area of Machine Learning where you can have a huge impact:

Feature Engineering

Feature Engineering

It sounds fancy because people love to complicate things, but let's make it simple:

In Machine Learning we deal with a lot of data.

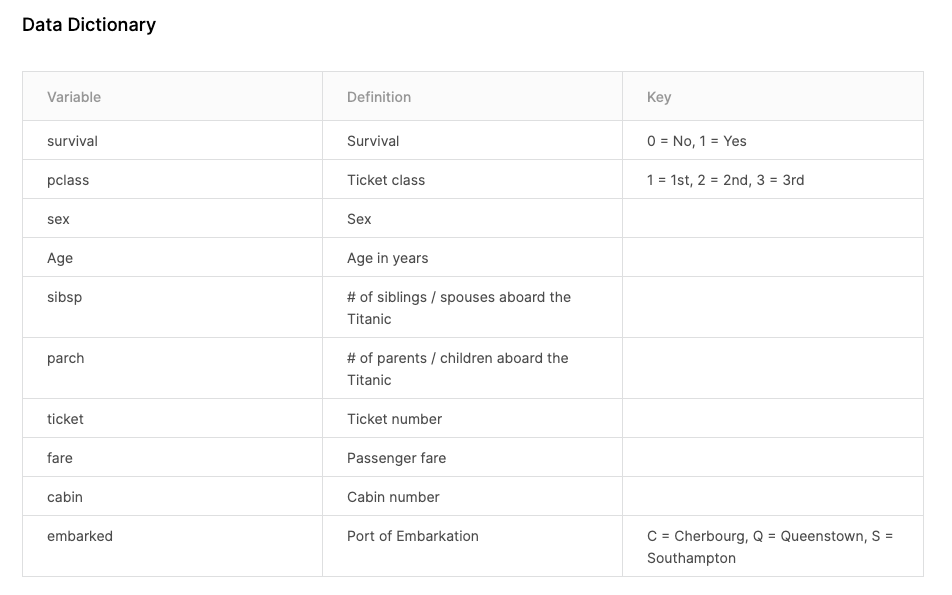

Let's assume we are working with the information of the passengers of the Titanic.

Look at the picture here. That's what our data looks like.

The goal is to create a model that determines whether a passenger survived.

Let's assume we are working with the information of the passengers of the Titanic.

Look at the picture here. That's what our data looks like.

The goal is to create a model that determines whether a passenger survived.

Each one of the columns of our dataset is a "feature."

A Machine Learning algorithm will use these "features" to produce results.

"Feature engineering" is the process that decides which of these features are useful, comes up with new features, or changes the existing ones.

A Machine Learning algorithm will use these "features" to produce results.

"Feature engineering" is the process that decides which of these features are useful, comes up with new features, or changes the existing ones.

The ultimate goal of feature engineering is to feed the Machine Learning algorithm with the best set of features that optimizes the results.

Here is the best part:

Feature Engineering Is An Art

Feature Engineering Is An Art

Your creativity plays a huge role here!

Here is the best part:

Feature Engineering Is An Art

Feature Engineering Is An Art

Your creativity plays a huge role here!

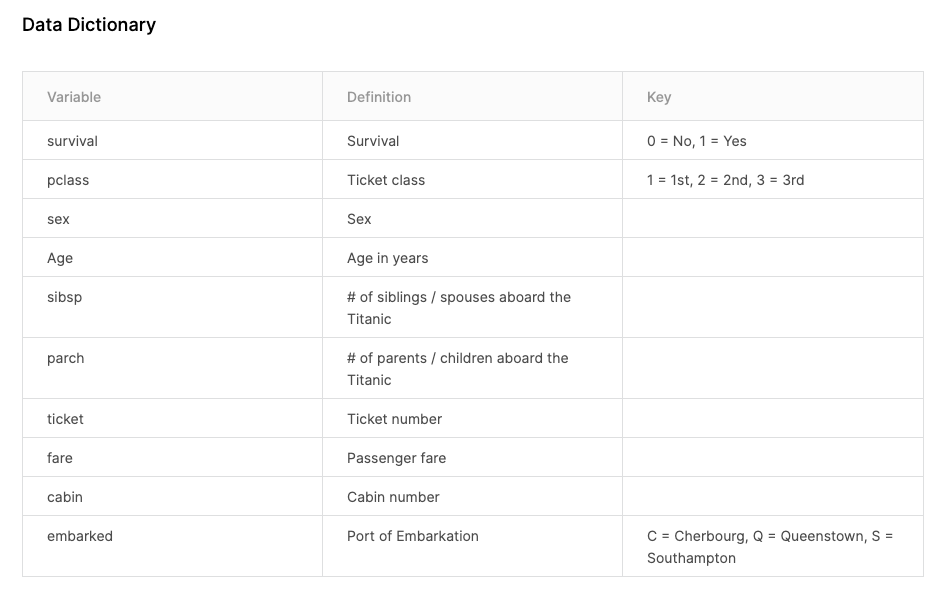

I'm attaching the list of features again to this tweet.

Look at them, and let's talk about a couple of examples of how we could transform the data in a way that could produce much better results.

Look at them, and let's talk about a couple of examples of how we could transform the data in a way that could produce much better results.

Age

AgeDo you think the age of a passenger has any bearing on whether they survived or not? Probably yes, right?

Do you think that being 27 instead of 28 would make a difference? Probably not.

What matters here is the age group! A child vs. a young person vs. a senior.

Instead of feeding our algorithm the age as it comes, we can transform the feature to reflect the age group.

The attached image is just a pseudocode showing a way to create the age group.

The attached image is just a pseudocode showing a way to create the age group.

Ticket Number

Ticket NumberDo you think that the ticket number will make a difference to determine whether a person survived the wreck?

I don't see how, so we can just drop this particular feature.

What you don't feed to your algorithm is as important as what you give to it.

Cabin

CabinWhat do you think about the cabin number?

I think that we mostly care about whether or not the passenger had a cabin regardless of its number.

In this case, we could transform this feature in a simple 0 (doesn't have a cabin) or 1 (does have a cabin.)

Another way to look at this cabin feature:

If the cabin number encodes the floor in the ship where the cabin was located, it would be interesting to retain that information.

(I'm assuming that people on the top floors had a better chance of survival.)

If the cabin number encodes the floor in the ship where the cabin was located, it would be interesting to retain that information.

(I'm assuming that people on the top floors had a better chance of survival.)

sibsp and parch

sibsp and parchThese two features tell us the number of siblings + spouses and parents + children respectively.

Does this matter?

Well, here is a theory: people with family on the ship might have had a different chance of survival than those that were traveling alone.

Maybe some people couldn't save themselves because they had to protect others.

Maybe some people survived because others protected them.

Either way, we could combine these two features into a single 0 (no family) or 1 (family) value.

Maybe some people survived because others protected them.

Either way, we could combine these two features into a single 0 (no family) or 1 (family) value.

Do you get the idea of how the process works?

We started with a set of features, and step by step we have transformed it into something that may give the algorithm a better chance to make good predictions.

This is feature engineering.

We started with a set of features, and step by step we have transformed it into something that may give the algorithm a better chance to make good predictions.

This is feature engineering.

Regardless of who you are, and how much experience you have building Machine Learning, "feature engineering" is an area that can get you started in the field.

Here, the best is not whoever knows the most, but whoever is capable of thinking creatively.

Here, the best is not whoever knows the most, but whoever is capable of thinking creatively.

Read on Twitter

Read on Twitter