Thread on our latest preprint ‘On the realness of people who do not exist : the social processing of artificial faces’ with @RaffaeleTuccia @NezaVehar & @manos_tsakiris Can people tell the difference between Real & GAN faces ? Spoiler: No! @OSFramework https://psyarxiv.com/dnk9x

Today more than ever, we are asked to judge the truthfulness and trustworthiness of our social world. From edited photos to deep fake videos, from humans to bots, and from alternative facts to fake news, we must judge the veracity of agents and the information they convey.

Our paper concerns the production of realistic-looking faces of non-existing people through generative adversarial networks (GAN). GAN faces are increasingly used in marketing, journalism, social media, political propaganda & infowars. https://apnews.com/article/bc2f19097a4c4fffaa00de6770b8a60d

What are GAN faces ? Generative adversarial networks (GANs) faces are realistic-looking faces of non-existing people https://www.thispersondoesnotexist.com/

How good are you at distinguishing between a real and a GAN face? Try it here in this site developed by @callin_bull @CT_Bergstrom and @JevinWest https://www.whichfaceisreal.com/

Given the increasing role that such faces play across different social & cultural domains and the potential for misuse in misinformation campaigns, it is crucial to understand how people actually perceive such faces and the social consequences of their perceived realness.

See articles by @jjvincent on the broader issues https://www.theverge.com/2020/7/7/21315861/ai-generated-headshots-profile-pictures-fake-journalists-daily-beast-investigation

Across 3 pre-registered studies we asked whether we can distinguish between GAN & Real faces, the social consequences of our (in)ability to distinguish between GAN & Real faces, & the role of knowledge about the presence of GAN faces in the erosion of trust. Key findings

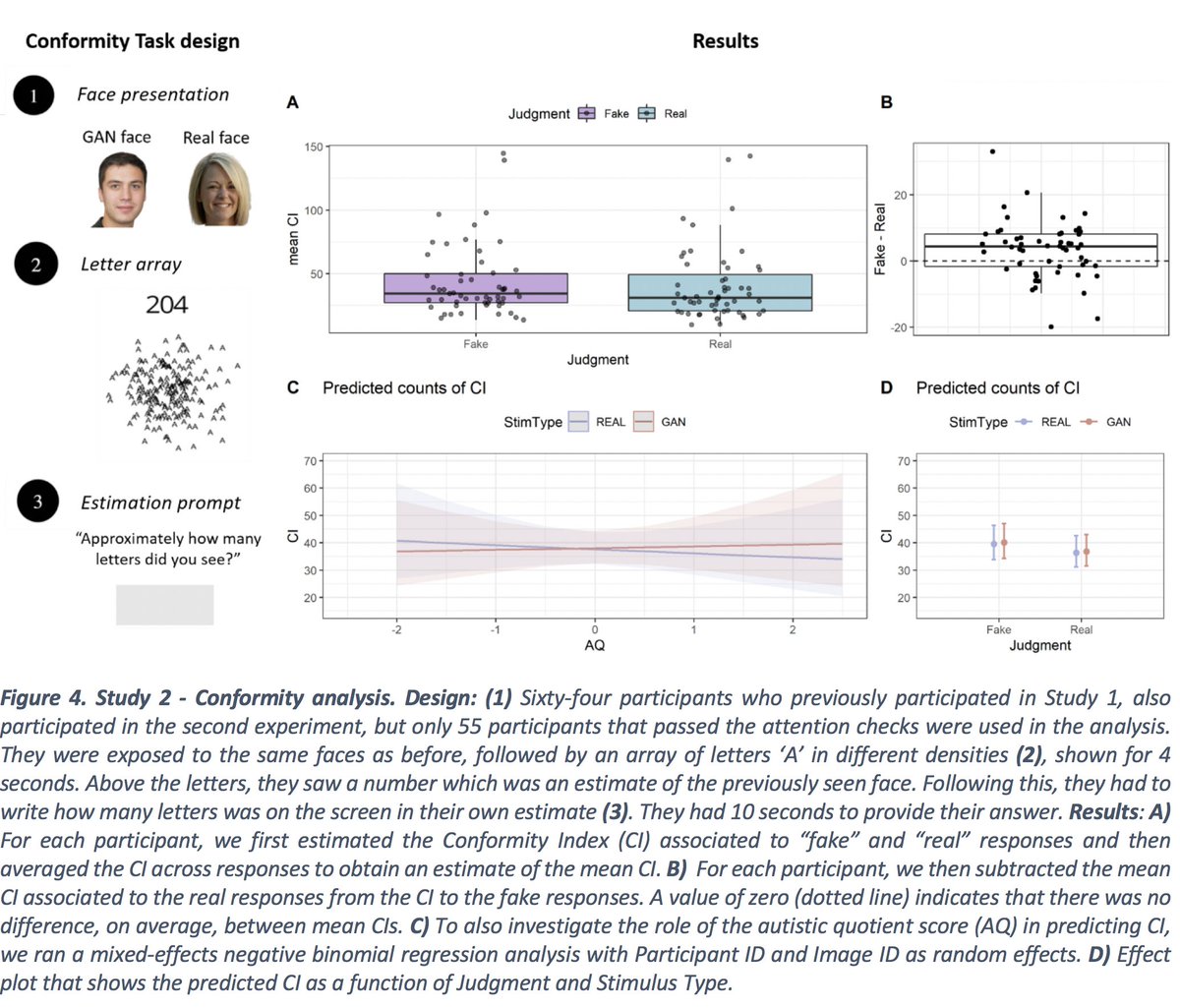

Study 2: People are more likely to conform, indicative of higher trust, to faces that they had judged to be real, rather than to Real faces per se

Study 3: Perhaps the biggest casualty to Artificial Intelligence will be the erosion of trust in what we see and hear. To understand how knowledge about the nature & presence of such GAN stimuli may impact trust, we explicitly manipulated knowledge about the presence of GAN faces

Study 3: Informing people about the existence and presence of GAN faces lowered conformity (and we argue trust), yet still people conform more to faces they judge to be real, rather than to just Real faces per se.

The current context of “fake news” seems to counteract our truth-default state (our tendency to believe and trust people). The widespread activity of “fake agents” poses the question of how much their presence can alter our truth-default state, eventually eroding social trust.

Having knowledge about the presence of fake agents does decrease trust. That by itself seems like a positive consequence whereby people may become more suspicious and less reluctant to trust in an environment where fake agents operate.

At the same time, the observation that there are situations in which these fake agents are also the ones that are more likely to be perceived as real, and possibly more trustworthy, points to the complex social consequences that generative technology and its (mis)use may have.

Read on Twitter

Read on Twitter