Over the past year I have skimmed through more than 2500 social science papers. I wrote a giant post about everything that's wrong with them and how to fix the process that generates them: https://fantasticanachronism.com/2020/09/11/whats-wrong-with-social-science-and-how-to-fix-it/

Some highlights below.

Some highlights below.

Replication markets work well because determining whether a paper will replicate is easy. Yet scientists seem curiously incapable of performing this simple task. Anywhere you look, from authorship, to publication, to tenure committees, nobody seems capable of spotting bad papers.

Case in point: citations are completely unrelated to whether a paper replicates or not. In a sample of 250 Replication Markets papers, the correlation between citations per year and replication probability was -0.05. Scientific impact is completely unrelated to truth.

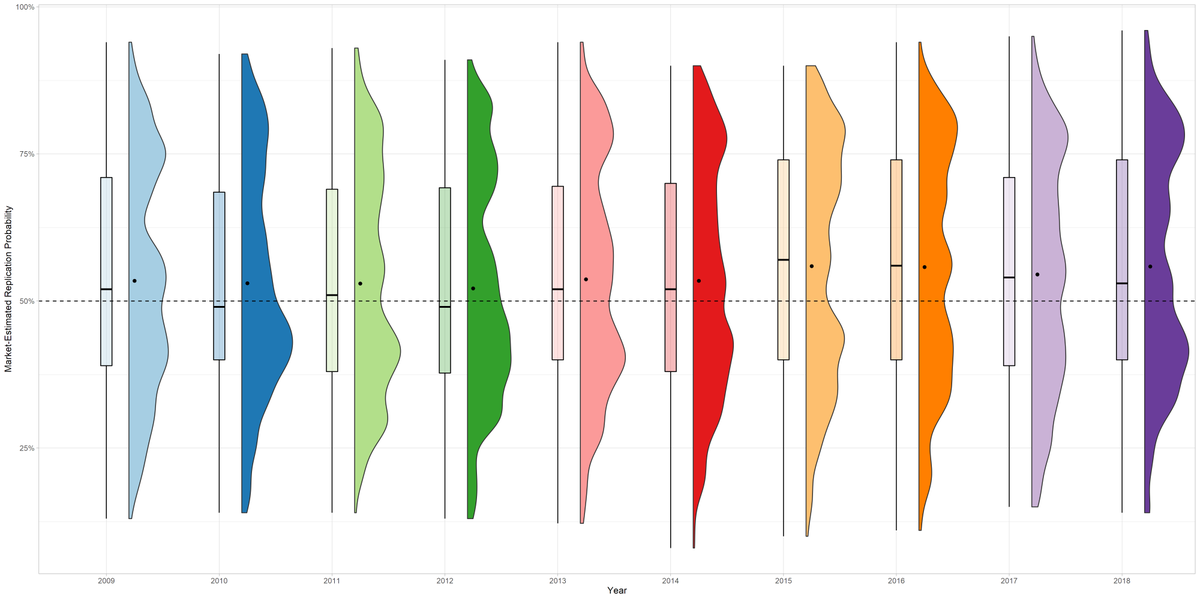

Things are not improving. Despite widespread expectations (which I shared) that replication rates would improve over the last decade, there is no significant change.

The blame is mostly on incentives (which are ultimately shaped by the grant agencies), but everyone is complicit and some of the most rotten behavior on the part of journal editors and universities goes far beyond anything that could be explained by bad incentives.

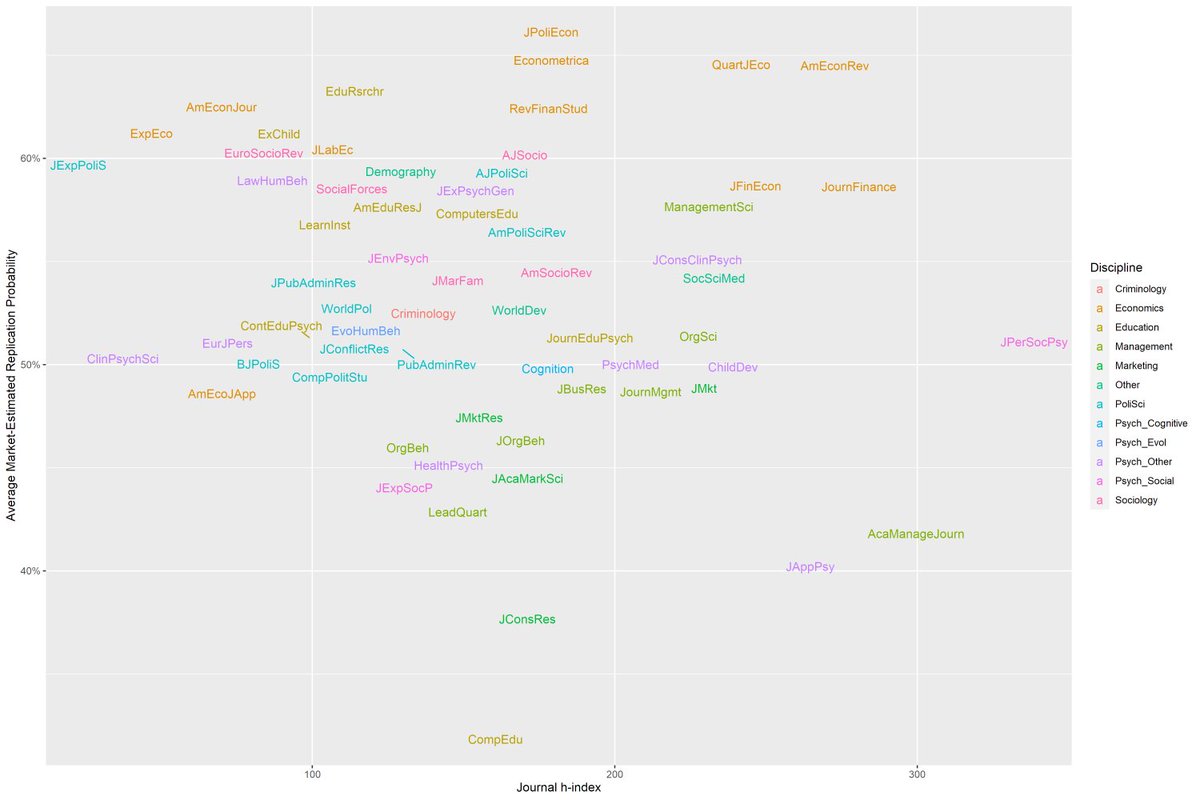

No journals have serious quality control: even the most exclusive/prestigious ones (eg QJE which has a ~3% acceptance rate) often publish bad papers. There's no correlation between journal impact and replication probability.

Just because it replicates doesn't mean it's good. A replication of a badly designed study is still badly designed. There are tons of papers doing correlational analyses yet drawing causal conclusions, and many of them will successfully replicate. Doesn't mean they're justified.

Statistical power is (surprisingly) not that bad, I think that on average the power in social science is above 60%. Improving it wouldn't do much. OTOH lowering the significance threshold to .005 would drastically decrease the publication of false positives.

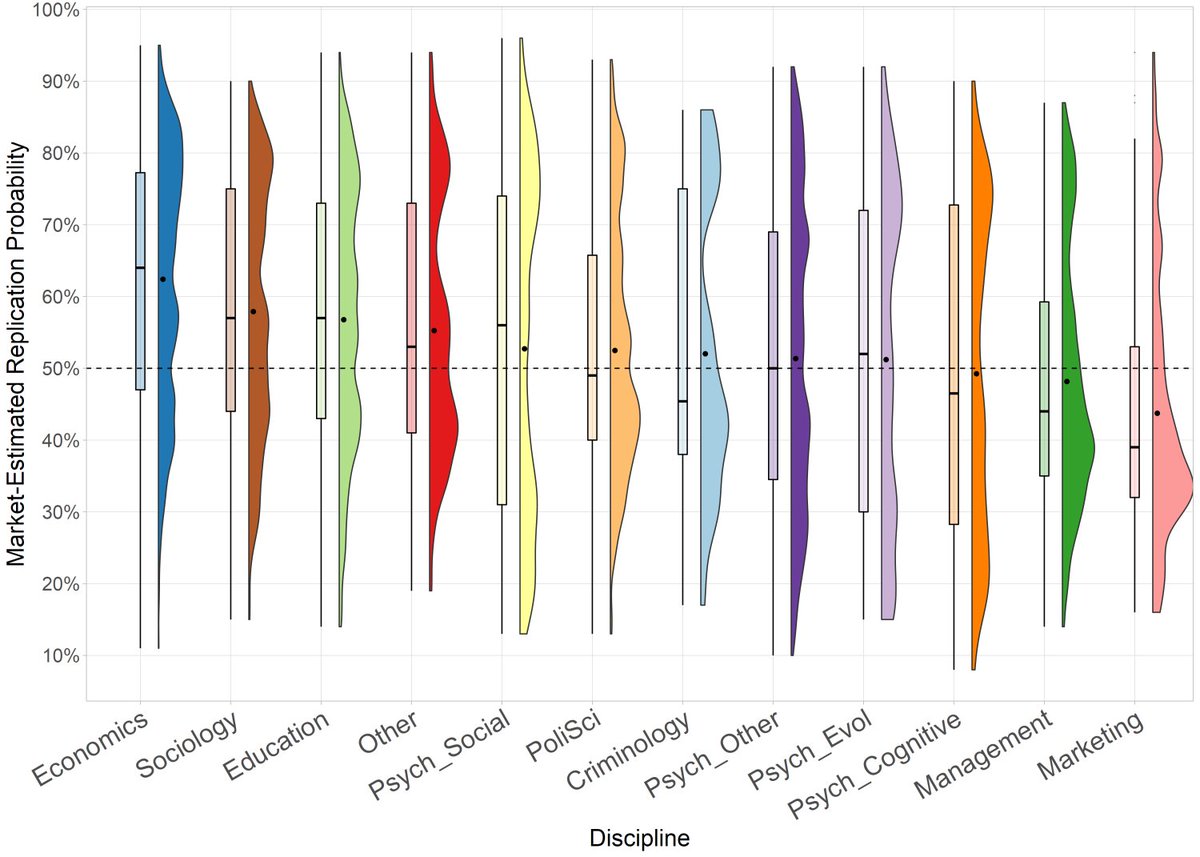

Economics is (predictably) the strongest field. Education was a positive surprise (large samples, RCTs). Criminology, marketing, management are in a terrible state. EvoPsych seems to have absorbed the worst tendencies of social psych.

I expected to find a lot more activism masquerading as science. But things like the IAT are exceptions, and I believe the vast majority of work is done in good faith, at least from an ideological (if not a statistical) perspective.

The replication crisis really began in the 1950s. There's a large empirical literature stretching back decades which covers all this stuff. Paul Meehl is excellent, every social scientist should go through his papers.

Bad incentives don't magically fix themselves. The reason nothing has been done since the 50s is that no fix is possible from within the system. The only viable solution is top-down direction from a power that sits above the academy and has its own incentives in order.

That means either the NSF/NIH or even Congress. But the grant agencies seem completely clueless. I do find @GarettJones arguments on the efficacy of independent agencies convincing though, so perhaps not all hope is lost.

This was all based on my participation in the fantastic @ReplicationMkts project, a part of DARPA's SCORE program (note the DoD is funding this, not the NSF). They ran markets on 3000 studies and will actually replicate about 175 of them.

I believe one of their goals is building a ML model that can estimate whether a paper will replicate or not. If successful and widely deployed, it could have a huge impact.

Read on Twitter

Read on Twitter