If you are a developer this question may sound weird, but hear me out:

— When you are working on a problem, how do you know you solved it?

This thread will help you understand a big paradigm shift that happens when you get into Machine Learning:

— When you are working on a problem, how do you know you solved it?

This thread will help you understand a big paradigm shift that happens when you get into Machine Learning:

I've been a Software Engineer since before it was cool.

When I started with Machine Learning, there was a big mental adjustment I had to make: all of the sudden, "solving a problem" had a completely different meaning.

Still confused? Let's break it down step by step.

When I started with Machine Learning, there was a big mental adjustment I had to make: all of the sudden, "solving a problem" had a completely different meaning.

Still confused? Let's break it down step by step.

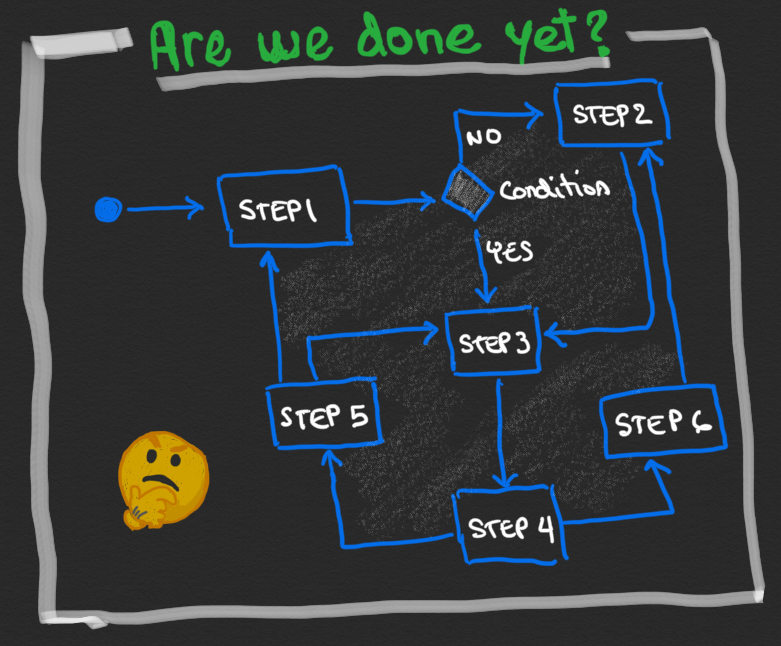

As a software developer, most problems have a known, specific solution. At any given point in time, you can easily determine whether or not you are done.

You can even write tests to ensure that you indeed have a solution!

Life is very nice!

You can even write tests to ensure that you indeed have a solution!

Life is very nice!

Now, let's think about a Machine Learning model that's used to make predictions.

Theoretically speaking, if we create a model that makes the correct prediction 100% of the time, we would have solved the problem.

But 100% is impossible to achieve!

Do you know why?

Theoretically speaking, if we create a model that makes the correct prediction 100% of the time, we would have solved the problem.

But 100% is impossible to achieve!

Do you know why?

There may be multiple reasons:

Noise in the data

Noise in the data

The data doesn't fully represent the domain

The data doesn't fully represent the domain

The stochastic nature of the algorithms

The stochastic nature of the algorithms

So, if we can't achieve 100%, then what? What's the best we can do? How do we know we are done and solved the problem?

Noise in the data

Noise in the data The data doesn't fully represent the domain

The data doesn't fully represent the domain The stochastic nature of the algorithms

The stochastic nature of the algorithmsSo, if we can't achieve 100%, then what? What's the best we can do? How do we know we are done and solved the problem?

First, we create a baseline model using the simplest method that we can find.

This baseline will be the measuring stick that we'll use to evaluate all the other models.

If we can't beat the baseline, we need to keep working.

But what happens if we beat it? Are we done?

This baseline will be the measuring stick that we'll use to evaluate all the other models.

If we can't beat the baseline, we need to keep working.

But what happens if we beat it? Are we done?

Well, it depends.

We know that we are better than the dumbest possible solution (our baseline), and we know that 100% is the upper limit (although not achievable.)

Deciding on whether to continue and for how long represents an interesting decision for us to make.

We know that we are better than the dumbest possible solution (our baseline), and we know that 100% is the upper limit (although not achievable.)

Deciding on whether to continue and for how long represents an interesting decision for us to make.

There are multiple ways to decide how to move forward.

I'll show you here one simple and practical approach that's very popular with business-oriented folks.

You can take a look at this thread from @AlejandroPiad for a more theoretical approach:

https://twitter.com/AlejandroPiad/status/1304024676830769153?s=20

I'll show you here one simple and practical approach that's very popular with business-oriented folks.

You can take a look at this thread from @AlejandroPiad for a more theoretical approach:

https://twitter.com/AlejandroPiad/status/1304024676830769153?s=20

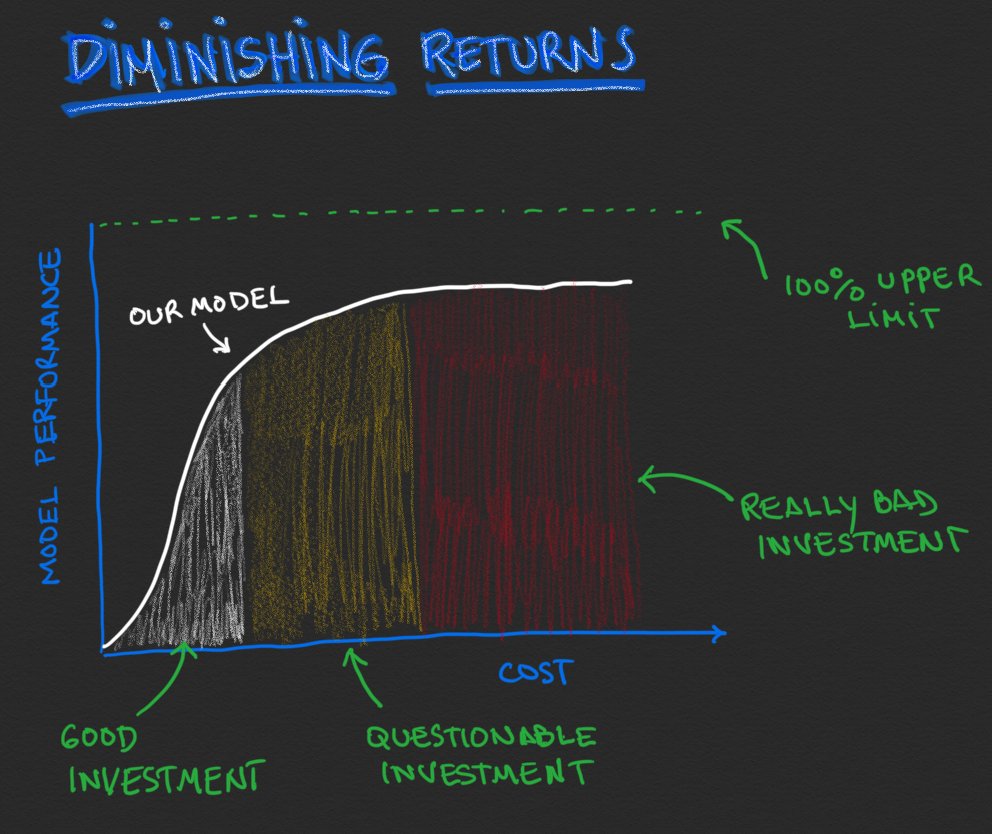

We could keep improving our model indefinitely, and every time get closer to that upper limit.

This will get expensive quickly. We are gonna get pounded by the law of diminishing returns!

Every percentage point will be much more costly! (And businesses don't like that)

This will get expensive quickly. We are gonna get pounded by the law of diminishing returns!

Every percentage point will be much more costly! (And businesses don't like that)

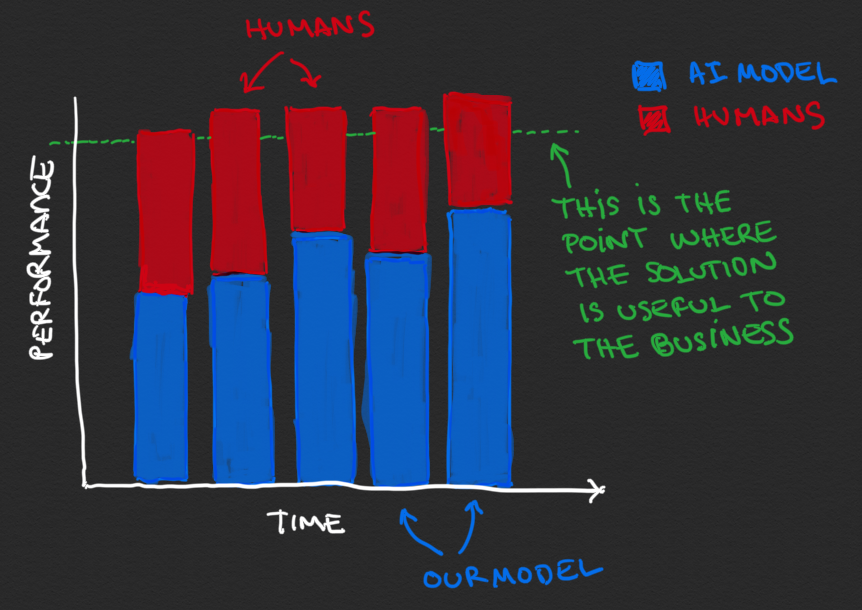

Instead, a very common approach used by companies is to determine the point where a solution is useful (basically, the percentage of correct answers that's tolerable), and then augment the model with humans to achieve that required performance.

Let's assume we need 90% of our answers to be accurate to consider the problem "solved".

Assuming we get 70% with our model, the other 20% will come from humans.

Gradually, as our model gets better, we can reduce the need for humans bit by bit.

Assuming we get 70% with our model, the other 20% will come from humans.

Gradually, as our model gets better, we can reduce the need for humans bit by bit.

With all these pieces in place, we can determine the ROI of making improvements to the model.

So, have we solved the problem?

As long as we have a positive ROI, we can keep working on our model. When the ROI becomes negative, we know we reached our limit and we stop.

So, have we solved the problem?

As long as we have a positive ROI, we can keep working on our model. When the ROI becomes negative, we know we reached our limit and we stop.

This way of thinking is fundamentally different from what I was used to.

It's hardly black or white anymore. Instead, you'd be forced to take into account a lot of different variables to make every decision.

It sounds harder. It sounds weird. But it's so much fun!

It's hardly black or white anymore. Instead, you'd be forced to take into account a lot of different variables to make every decision.

It sounds harder. It sounds weird. But it's so much fun!

Read on Twitter

Read on Twitter