TWEETORIAL on why the number of additional detected cases is a bad choice of primary outcome for trials of AI interventions testing physician with vs without AI support.

This was intended as web appendix for https://www.bmj.com/content/370/bmj.m3505, but BMJ doesn't do web appendices with editorials. I still like the illustration, so I'm sharing it this way.

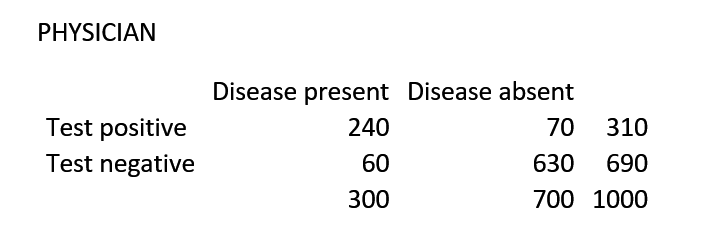

Suppose we want to improve diagnostic accuracy with respect to a disease with a prevalence in the tested population of 0.30. Based on his/her clinical judgment, a typical physician has a sensitivity of 0.80 and a specificity of 0.90

The specificity is acceptable, but the sensitivity is unacceptably low given the severity of the disease. A research team decides to develop an automatic AI detection system that can support the physician when making a diagnosis and hopefully increases sensitivity.

The AI tool is calibrated-in-the-large (prevalence of 0.30, alert in exactly 30/100 patients). It had 0.94 accuracy, 0.90 sensitivity and 0.96 specificity on cross-validation. In a small temporal validation, the tool worked well too (0.92 accuracy, 0.87 sens and 0.94 spec).

Encouraged by these results, the investigators decide they need to run an RCT to get market approval. The primary outcome is the number of detected cases. The trial has two arms: physician vs. physician assisted by AI. They recruit 1000 patients to each arm.

Alas, unknown to the investigators, the AI was severely influenced by label leakage (i.e.input data unintentionally contained information related to the true disease status that is unavailable when that the diagnosis is made in practice (eg markings or notes, prescriptions)

In reality, the tools’ alerts are unrelated to the true disease status. This is depicted in the table below, where the rows and the columns are independent. Remember that there is no AI only arm in the trial. This truth is known to you, the reader, but not to the researchers.

In the physician assisted by AI arm, the physician takes a biopsy whenever (s)he suspects disease, and with every alert given by the AI system. The performance in the physician+AI arm can be obtained with some probability theory or a quick computer simulation.

The sensitivity has increased from 0.80 in the physician arm to 0.86 in the physician+AI arm, this is statistically significant and deemed clinically relevant, as 18 additional cases are detected per 1000 (equivalent to 1 additional detected case per 56 patients screened).

The improvement is purely driven by coincidental detections of the AI algorithm (of which the output is unrelated to the true disease status) among the cases that were missed by the physician.

The conclusion of the trial based on the primary outcome would be that AI improves detection rates, but this comes at the cost of a decreased specificity (0.63).

This could have been detected beforehand if an (external) validation study of the AI had been performed that only used the input data available at the moment of diagnosis. The results of such a study would have prevented setting up a costly trial of an algorithm without merit.

(Notice that it will become harder to prove the AI has merit as the AI and physician both become better at predicting the outcome, as they will then agree more. It will become easier as the physician misses more cases and the AI blurts out more alerts, even if they are random)

(Yes, I know of at least one published trial of phys with vs without AI that use detected cases as primary outcome, without mentioning false alerts in the abstract. False alerts were rare in this published case, but to me, the outcome choice is still odd)

So my tip: if you are designing or reading about a trial of AI, ask yourself what result is expected if the AI blurts out random alerts. If it can be significant without being predictive, focus on another outcome.

I have read several times that trials should test how AI complements the medical professional, in exactly this type of set up. That's fine (and probably true), but chose your outcome wisely.

Read on Twitter

Read on Twitter