There’s been a lot of discussion this week @atlaslivingaust about data quality. It turns out this problem gets weirder the more I look at it, so let’s see how deep the rabbit hole goes...

A thread

A thread

First, a caveat; I’m at t=20 days into this job, so this thread is not authoritative, but my impressions so far. Interpret with caution

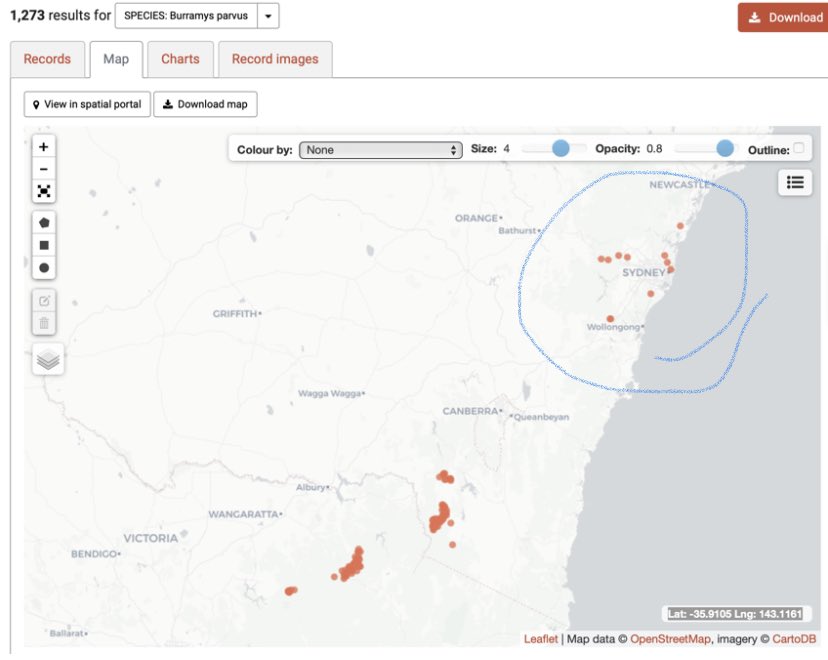

So let’s say you want to look up where a species occurs. I’ll use the mountain pygmy possum as an example. This is an alpine species; but there are some records around Sydney

From the locality info, we can see what other records are present nearby. 16 obs of the Eastern Pygmy Possum suggests our MPP might be a case of mistaken identity. What now?

Every record has a ‘flag an issue’ button; this generates a ‘user assertion’, that is passed back to the data provider. Awesome!

How many of these assertions are there? 3856 unreviewed issues, with a further 93 with responses. So 2% of queries have been addressed, and only 0.004% of all records have queries. Why are these numbers so low?

One thing that might be happening – it’s not clear exactly – is that dataset updates might be wiping earlier user assertions. Certainly we think both numbers are underestimates of the total errors/fixes in the database

Alternatively, maybe people just aren’t flagging these issues with ALA. I.e. they download data, fix it on their machine, then never tell us how to update the records

A final issue is that the data ‘owner’ might not respond, either because they’re busy, or because they never receive the notification. We’re not sure how often this happens, but it could be a lot, especially for individual submitters

So how do we improve error reporting? I think there are a few models we could use:

1. We convert the ‘flag an issue’ button to an edit button, and give the user the benefit of the doubt until the data provider can check it. This is open to abuse, but scales well to our ~90 million records

2. ALA curation - we check the edits that users make and send a suggestion to the data holder. This is ‘safe’, but very expensive for us

3. ALA validation - we systematically check every data point and include only those that pass stringent testing

(This is *way* outside our capabilities and budget, but has been suggested by some people)

(This is *way* outside our capabilities and budget, but has been suggested by some people)

I don’t know what model we’ll end up with, but we are working on it. What would you prefer?

Read on Twitter

Read on Twitter