During my physics undergrad, I have never heard of Singular Value Decomposition (SVD).

Why?

Almost all matrices in physics are symmetric, and in that case SVD reduces to eigenvalue decomposition. 1/n

Why?

Almost all matrices in physics are symmetric, and in that case SVD reduces to eigenvalue decomposition. 1/n

But for non-symmetric, or even non-square matrices, SVD is really the fundamental tool, and data-scientists have known this well.

https://twitter.com/WomenInStat/status/1285611042446413824

(Original thread by @daniela_witten)

https://twitter.com/WomenInStat/status/1285611042446413824

(Original thread by @daniela_witten)

The physicists' bias towards eigenvalue decomposition vs SVD has spilled over into theoretical neuroscience. The Dayan and Abbott book for instance never mentions SVD!

So over the recent years, part of the theoretical neuroscience community (and that part includes me) has been rediscovering how useful SVD is, eg for understanding network models. [The list below is far from exhaustive, please add more examples!]

For instance, basic results on perceptrons can be understood in a simple way using SVD.

https://tinyurl.com/perceptron-sgd

https://tinyurl.com/perceptron-sgd

Dynamics of learning in deep networks can be understood based on SVD.

https://arxiv.org/abs/1312.6120

https://www.pnas.org/content/116/23/11537.short

https://arxiv.org/abs/1809.10374

https://arxiv.org/abs/1312.6120

https://www.pnas.org/content/116/23/11537.short

https://arxiv.org/abs/1809.10374

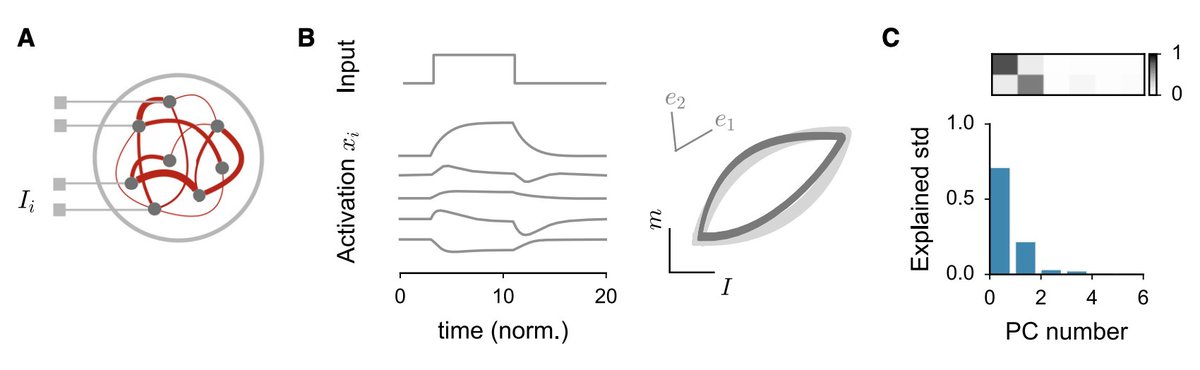

Non-linear dynamics in recurrent neural networks can be analyzed by starting from the SVD of the connectivity matrix, and keeping dominant terms.

https://arxiv.org/abs/1711.09672

https://www.biorxiv.org/content/10.1101/350801v3.full

https://arxiv.org/abs/2007.02062

https://arxiv.org/abs/1909.04358

https://www.biorxiv.org/content/10.1101/2020.07.03.185942v1

https://arxiv.org/abs/1711.09672

https://www.biorxiv.org/content/10.1101/350801v3.full

https://arxiv.org/abs/2007.02062

https://arxiv.org/abs/1909.04358

https://www.biorxiv.org/content/10.1101/2020.07.03.185942v1

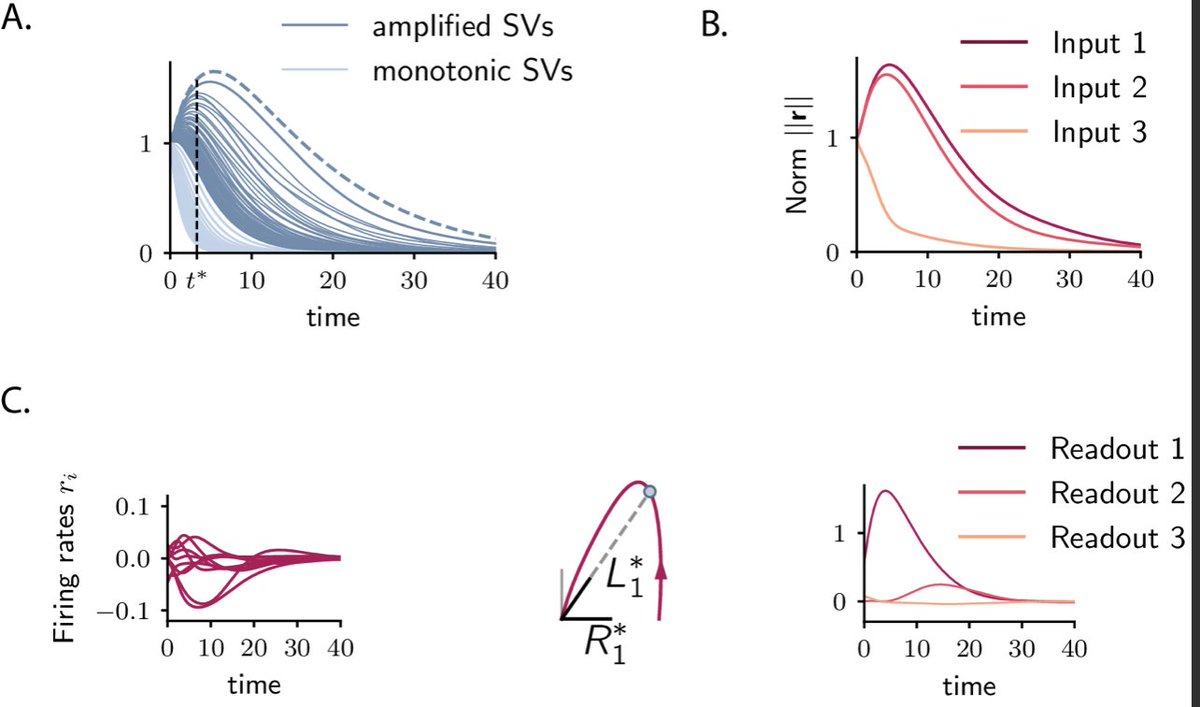

Non-normal transient dynamics in recurrent networks

https://www.sciencedirect.com/science/article/pii/S0896627314003602

https://arxiv.org/abs/1811.07592

https://www.sciencedirect.com/science/article/pii/S0896627314003602

https://arxiv.org/abs/1811.07592

Of course neuroscientists with a stat/data-science background have been using SVD for ever, in particular for dimensionality reduction, but there has been a bit of a gap with physicists.

https://twitter.com/WomenInStat/status/1285615926042406913

(Original tweet by @daniela_witten)

https://twitter.com/WomenInStat/status/1285615926042406913

(Original tweet by @daniela_witten)

At this point, it looks like it’s time to reposition SVD at the basis of the comp neuro curriculum. If you need to catch up, here is a great crash course by @eigensteve https://www.youtube.com/playlist?list=PLMrJAkhIeNNSVjnsviglFoY2nXildDCcv

Read on Twitter

Read on Twitter