Imaging of live cells and clinical tissue can be limited by lack of labels. Why not measure their intrinsic density and anisotropy? Our work on label-free analysis of biological architecture @czbiohub is published. Congrats @symguo, @LiHao_Yeh , @JennyFolkesson, and all authors! https://twitter.com/eLife/status/1296190956606193666

Quantitative label-free imaging with phase and polarization (QLIPP for short) works across biological scales. This neat experiment by @ieivanov1 shows density and anisotropy at the scale of organelles

Above movie shows a time-lapse at a single plane of a volume. 3D pseudocolor rendering of density and anisotropy begins to identify specific organelles. Reconstruction and rendering by @LiHao_Yeh.

QLIPP enables sensitive and high-resolution imaging of tissue slices as well. We can identify specific regions of brain tissue, detect changes in myelination, and measure orientation of axons - all without label. Figure by @LiHao_Yeh and @symguo.

We are excited for the impact this method can have on study of archival brain tissue. Data in collaboration with @LabNowakowski

https://twitter.com/LabNowakowski/status/1296229354398076928?s=20

https://twitter.com/LabNowakowski/status/1296229354398076928?s=20

QLIPP is modular and can be added to any microscope. The software is open-source: https://github.com/mehta-lab/reconstruct-order

Analyzing specific structures from these information-dense images is hard. We developed deep learning models to predict specific structures, e.g., myelination in human brain tissue. These models are inspired by Greg Johnson's work @AllenInstitute.

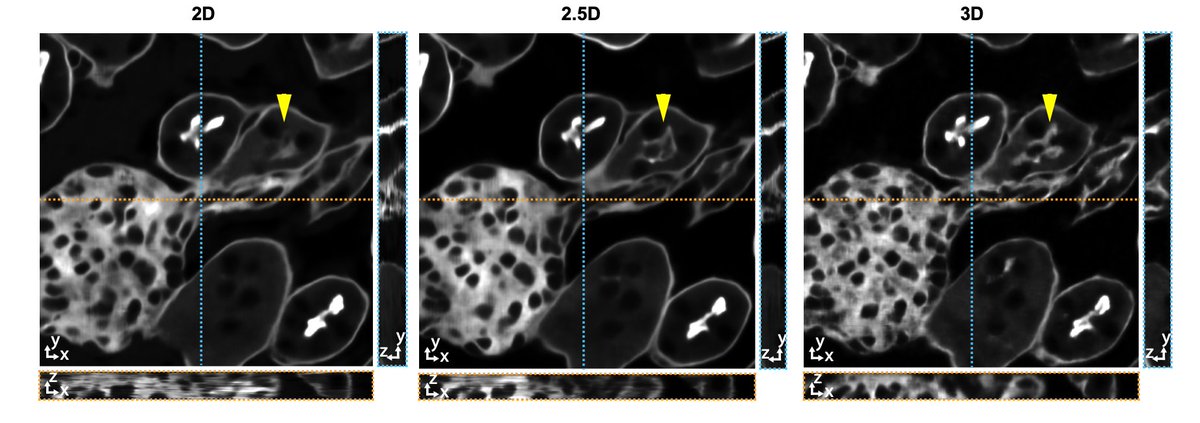

The prediction accuracy with our 2.5D U-Net architecture approaches the accuracy of 3D U-Net architecture, but requires significantly less memory to train. Results by @symguo

and @JennyFolkesson.

and @JennyFolkesson.

The data used for training the models ( https://www.ebi.ac.uk/biostudies/BioImages/studies/S-BIAD25) and the code to train the models ( https://github.com/czbiohub/microDL) are available.

We hope above methods are broadly useful. Let us know if you need inputs to implement the computational imaging method or image translation models. (End)

Read on Twitter

Read on Twitter