My thoughts on #GPT3  after @OpenAI and @gdb gave me access a few weeks ago.

after @OpenAI and @gdb gave me access a few weeks ago.

It's a big deal!

Summary thread below.

Full article: https://the-data-set.org/why-the-new-ai-nlp-language-model-gpt-3-is-a-big-deal/

after @OpenAI and @gdb gave me access a few weeks ago.

after @OpenAI and @gdb gave me access a few weeks ago.It's a big deal!

Summary thread below.

Full article: https://the-data-set.org/why-the-new-ai-nlp-language-model-gpt-3-is-a-big-deal/

2/n GPT-3 feels like the first time I used email, the first time I went from a command line text interface to a graphical user interface (GUI), or the first time I had high-speed internet with a web browser.

3/n GPT-3 is an AI/NLP language model that allows users to generate text such as news articles, recommendations, or even responses from a smart virtual assistant. Its importance is like the invention of email or bitcoin, or the availability of fast internet with a web browser.

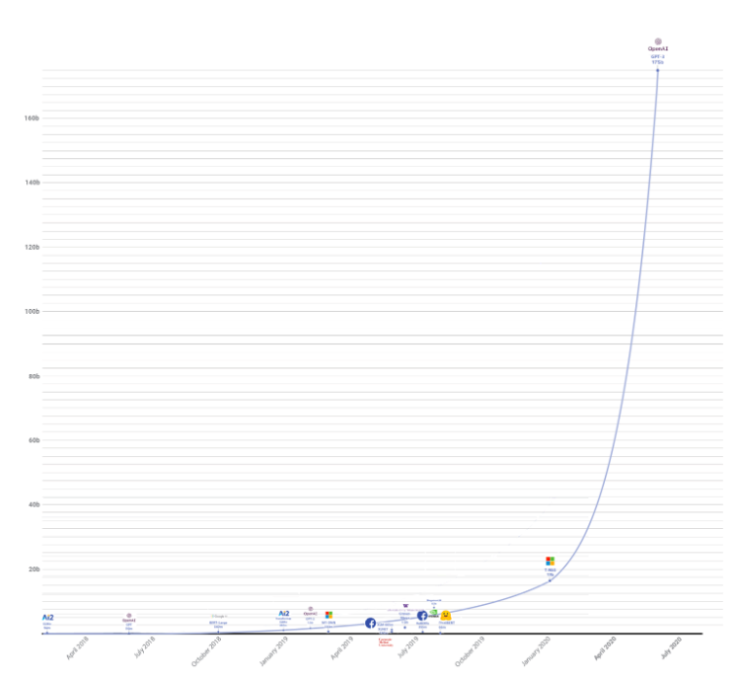

4/n More compute and more training data work! GPT-3 has 175bn parameters vs ~120bn neurons for the human brain and ~15bn parameters for the largest prior model. It's an exponential jump ahead, and shows we have a few more easy jumps to do. @FutureJurvetson

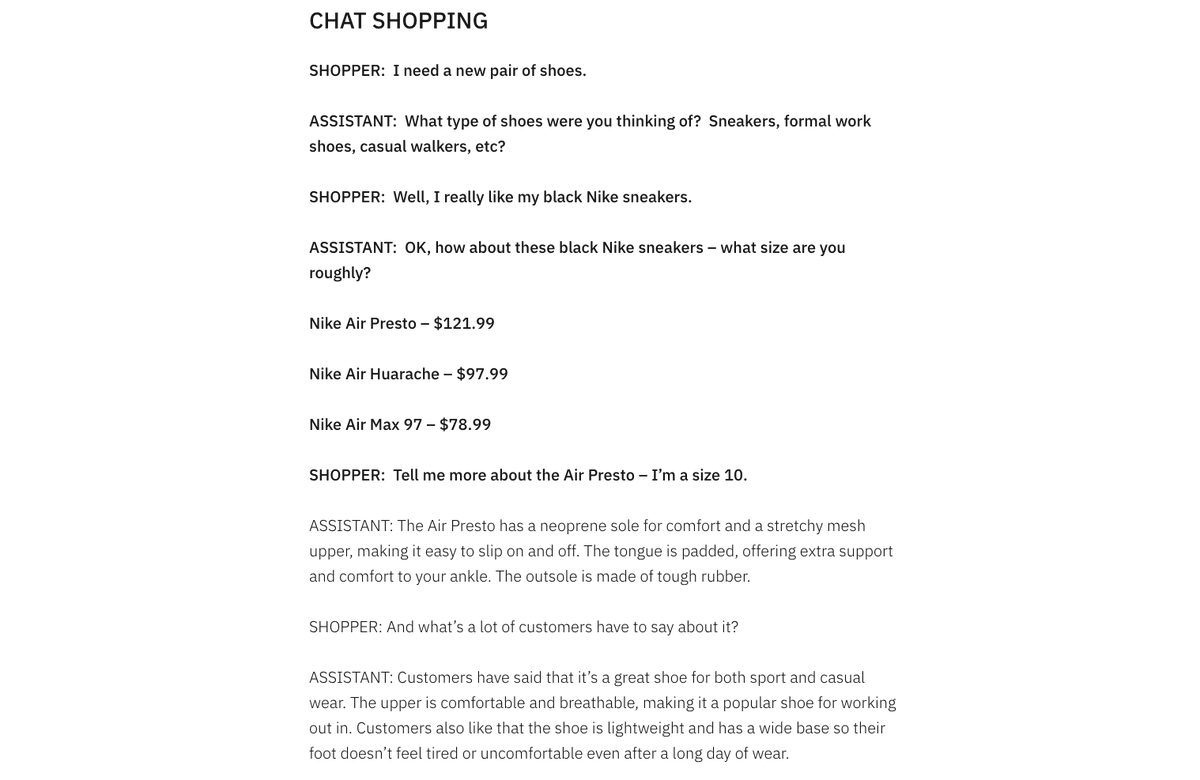

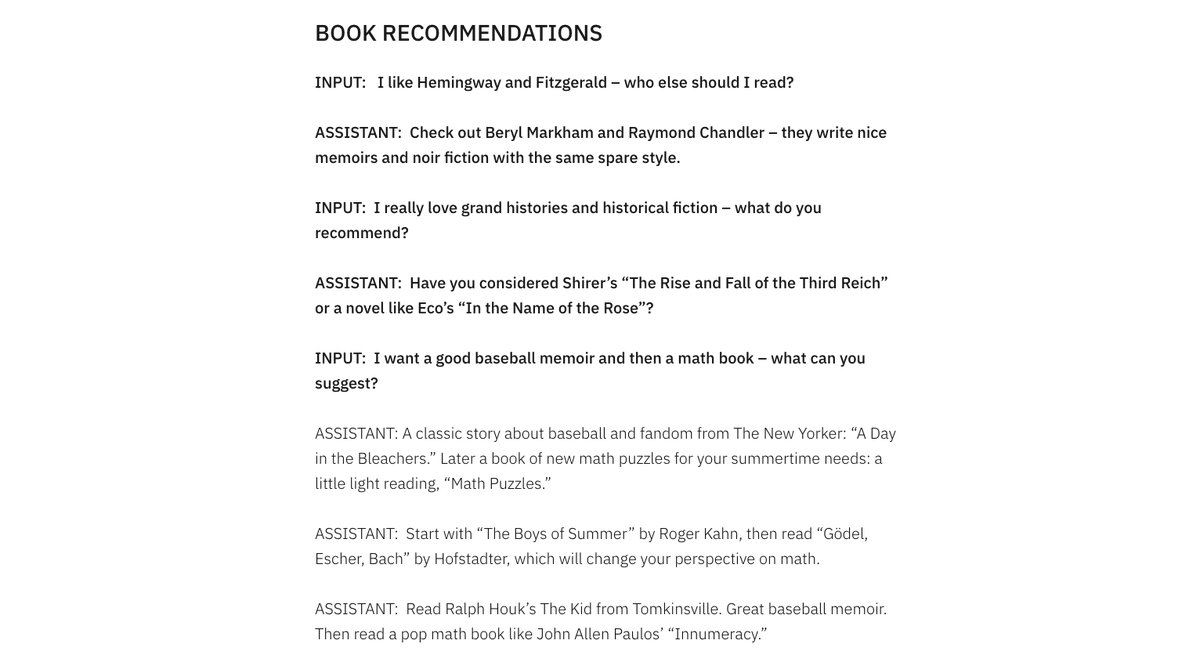

5/n Specifically, being able to use GPT-3 with simple priming (and no fine tuning), plus getting access to it via an API, are really nice form factor advances. It's can be productized.

6/n Top use cases I can openly discuss:

* shopping/digital retail

* code complete/natural language coding

* email generation and completion

* recommendations

* customer service chat.

All are multi-billion dollar industries.

* shopping/digital retail

* code complete/natural language coding

* email generation and completion

* recommendations

* customer service chat.

All are multi-billion dollar industries.

7/n GPT-3 has some limits and problems that need work. They include: a grab bag of fairness, accountability, transparency, and ethics issues; deepfake threats; black box/explainability (but don't humans too?); fine-tuning vs priming for niche uses; SLAs.

Read on Twitter

Read on Twitter