This paper from @NicolasPapernot's lab on watermarking ML models https://arxiv.org/pdf/2002.12200.pdf is

Code: https://github.com/cleverhans-lab/entangled-watermark

TL;DR: If you want effective watermarks in ML models to discourage model stealing, the model must JOINTLY learn watermark AND task distribution

Code: https://github.com/cleverhans-lab/entangled-watermark

TL;DR: If you want effective watermarks in ML models to discourage model stealing, the model must JOINTLY learn watermark AND task distribution

Model Stealing is real. Recently, researchers showed how they replicated ML models hosted on Microsoft API, IBM, Google Auto ML API, IBM, and even @clarifai.

It only cost them < $10, to replicate upto 85% accuracy of original model 2/

https://www.ndss-symposium.org/ndss-paper/cloudleak-large-scale-deep-learning-models-stealing-through-adversarial-examples/

It only cost them < $10, to replicate upto 85% accuracy of original model 2/

https://www.ndss-symposium.org/ndss-paper/cloudleak-large-scale-deep-learning-models-stealing-through-adversarial-examples/

Here is the common setup: Attackers use the victim model as an oracle to label a "substitute dataset" -- query the model based on the inputs of their choice.

By training on the the substitute dataset, attackers learn a new function which is now the "stolen copy" 3/

By training on the the substitute dataset, attackers learn a new function which is now the "stolen copy" 3/

Preventing these kinds of attacks in the past involved restricting/modifying information sent out by model

- Return only most likely label

- Return confidence vector with lower precision

Obviously, all of these tradeoff utility of user to for protection against adversary

4/

- Return only most likely label

- Return confidence vector with lower precision

Obviously, all of these tradeoff utility of user to for protection against adversary

4/

Watermarks intuition:

- Defender includes outliers pairs (x, y) during training.

- To claim ownership, the defender shows the specific inputs x, that have knowledgee of the surprising prediction f(x) = y

5/

- Defender includes outliers pairs (x, y) during training.

- To claim ownership, the defender shows the specific inputs x, that have knowledgee of the surprising prediction f(x) = y

5/

E.g: During training of your custom cat/dog classifier, you train the ML model to recognize a TV as icecream.

So, now the idea is that to claim ownership, if you believe someone as stolen your ML model, you query their model and if TV is classified as icecream, boom!

6/

So, now the idea is that to claim ownership, if you believe someone as stolen your ML model, you query their model and if TV is classified as icecream, boom!

6/

But, as @NicolasPapernot lab shows, naive watermarking dont work!

Why? Because the watermark have been defined as outliers. So, if the adversary only queries the inputs on the task distribution, the stolen model will also only mimic the task distribution, bypassing watermarks!

Why? Because the watermark have been defined as outliers. So, if the adversary only queries the inputs on the task distribution, the stolen model will also only mimic the task distribution, bypassing watermarks!

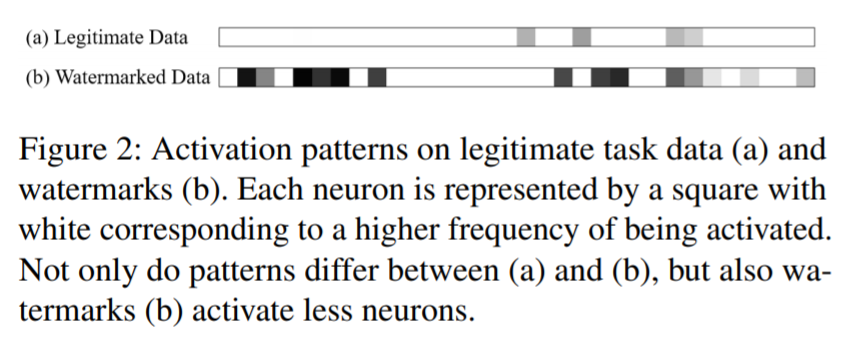

They show how naive watermarking roughly split the model's parameter set into two subsets, the first encodes the task distribution while the second overfits to the outliers (watermarks).

What you want, they argue, is a model that jointly learns the watermarks with task 7/

What you want, they argue, is a model that jointly learns the watermarks with task 7/

They propose a new technique called "Entangled Watermarks Embeddings" wherein they use Soft Nearest Neighbor Loss (SNNL) for the model to learn watermarks and task jointly 8/

(Side note: This thread by @nickfrosst reviews SNNL and gives you the intution https://twitter.com/nickfrosst/status/1093581702453231623)

(Side note: This thread by @nickfrosst reviews SNNL and gives you the intution https://twitter.com/nickfrosst/status/1093581702453231623)

Essentially, SNNL is the opposite of margins in SVMs.

When SNNL is maximized, points from two clusters are closer to each other than the average distance between points. This is "entangled"

9/

When SNNL is maximized, points from two clusters are closer to each other than the average distance between points. This is "entangled"

9/

During training phase, SNNL is added to the loss function in the following way:

-- If there are only points from task distribution in mini batch, you void out SNNL

-- If there are points from task + watermark, max SNNL

10/

-- If there are only points from task distribution in mini batch, you void out SNNL

-- If there are points from task + watermark, max SNNL

10/

Obviously, there is a tradeoff here. Afterall, you are bringing together points from different "classes" together which is good for watermark, but not so good for accuracy.

But not so bad in practice based on experiments in the paper.

11/

But not so bad in practice based on experiments in the paper.

11/

One of my favorite aspects of this paper is how they compare watermarks to cryptographic trap door functions.

"Given watermark, it is easy to verify if the model is watermaked. But if you know a model is watermarked, you cannot ascertain which data point is watermarked"

Neat!

"Given watermark, it is easy to verify if the model is watermaked. But if you know a model is watermarked, you cannot ascertain which data point is watermarked"

Neat!

Another I missed: @mcjagielski has a neat theorem showing how checking whether two networks with domains {0,1} d are functionally equivalent is NP-hard.

=> Identifying whether two ML models are identical can be reduced to an NP-hard problem

https://arxiv.org/pdf/1909.01838.pdf

12/

=> Identifying whether two ML models are identical can be reduced to an NP-hard problem

https://arxiv.org/pdf/1909.01838.pdf

12/

Also, the authors remind us that watermarking ML models is related to

a) backdooring ML models (a la @moyix) but "used for

good by the defender rather than an attacker"

b) poisoing ML models (a la @mcjagielski @AlinaMOprea

@biggiobattista) https://arxiv.org/abs/1804.00308

a) backdooring ML models (a la @moyix) but "used for

good by the defender rather than an attacker"

b) poisoing ML models (a la @mcjagielski @AlinaMOprea

@biggiobattista) https://arxiv.org/abs/1804.00308

Read on Twitter

Read on Twitter