One of the fascinating things about addressing misinformation is that *everyone* wants to make it someone else's problem. (To be clear, this is not always a bad thing!)

The most recent example of this is @WhatsApp's new feature, which makes misinformation Google's problem...

feature, which makes misinformation Google's problem...

The most recent example of this is @WhatsApp's new

feature, which makes misinformation Google's problem...

feature, which makes misinformation Google's problem...

When a message has been forwarded many times, WhatsApp shows a magnifying glass  next to the message. When tapped, it searches Google for the contents of the message.

next to the message. When tapped, it searches Google for the contents of the message.

This is a "data void" grifters paradise! https://datasociety.net/library/data-voids/

next to the message. When tapped, it searches Google for the contents of the message.

next to the message. When tapped, it searches Google for the contents of the message.This is a "data void" grifters paradise! https://datasociety.net/library/data-voids/

Before I explain how this might backfire, I want to first make clear that this *is a positive step forward for the ecosystem*.

It may significantly decrease the friction for checking if something that being forwarded is false.

We need more of this type of thinking, not less!

It may significantly decrease the friction for checking if something that being forwarded is false.

We need more of this type of thinking, not less!

To elaborate, this is essentially the type of thing that web literacy experts like Michael Caulfield ( @holden) advocate for and teach students to do. (Check out his SIFT framework: https://hapgood.us/2019/06/19/sift-the-four-moves/)

So kudos to the WhatsApp team for making this easier.

That matters.

So kudos to the WhatsApp team for making this easier.

That matters.

But...there are a *lot* of issues.

Lets go through a few:

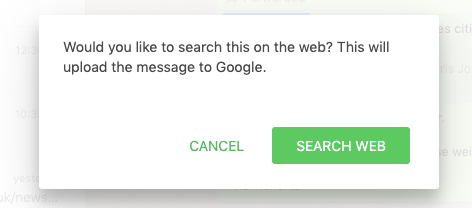

(1) There is a dialog that could scare users away when searching, about the message being "uploaded to Google". I'd love to understand the rationale for the world "upload" vs. shared (what was user research feedback?).

Lets go through a few:

(1) There is a dialog that could scare users away when searching, about the message being "uploaded to Google". I'd love to understand the rationale for the world "upload" vs. shared (what was user research feedback?).

(2) In my tests, it didn't work for images or videos — so it's useless for very common message types?

This is a big deal given that much of the most viral misinformation is image or video based...

There is a question of course of what results *should* be for that sort of content!

This is a big deal given that much of the most viral misinformation is image or video based...

There is a question of course of what results *should* be for that sort of content!

(3) It seems to only search the first 50ish characters (~12 words). Often there are  ZERO

ZERO  results for that search phrase.

results for that search phrase.

This makes it easy to intentionally construct a message where the search results lead people to more misinformation!

ZERO

ZERO  results for that search phrase.

results for that search phrase.This makes it easy to intentionally construct a message where the search results lead people to more misinformation!

This brings us to the big issue... data voids.

At the end of the day, WhatsApp here has essentially delegated identifying misinformation to the Google user. Google uses clever algorithms to attempt to figure out what is relevant to the user, based off what it finds online.

At the end of the day, WhatsApp here has essentially delegated identifying misinformation to the Google user. Google uses clever algorithms to attempt to figure out what is relevant to the user, based off what it finds online.

But it turns out anyone can put anything online...and anyone who can do basic search engine optimization (SEO) can take advantage of this feature.

They can bring people down further rabbit holes by focusing on the specific phrases used by the frequently forwarded messages.

They can bring people down further rabbit holes by focusing on the specific phrases used by the frequently forwarded messages.

Who is going to be better at taking advantage of the SEO of rapidly forwarded viral messages?

Public health authorities, journalists and experts? Or grifters, scammers, media manipulators, and disinformers?

(This is ~what is meant by "data void")

Public health authorities, journalists and experts? Or grifters, scammers, media manipulators, and disinformers?

(This is ~what is meant by "data void")

All is not lost.

We *should* have an ecosystem of socially positive folks like those "public health authorities, journalists and experts" who can help fill those data voids before the grifters do, but that requires:

1) Funding

2) Infrastructure

3) Information

They need support.

We *should* have an ecosystem of socially positive folks like those "public health authorities, journalists and experts" who can help fill those data voids before the grifters do, but that requires:

1) Funding

2) Infrastructure

3) Information

They need support.

In some sense, Google isn't really the right tool for this. Perhaps the search that WhatsApp directs people to should be Google News, which has some quality controls for what is included.

That "gatekeeping" can make it a little less susceptible to some types of bad actors.

That "gatekeeping" can make it a little less susceptible to some types of bad actors.

Of course, that gatekeeping also has lots of other negative side effects (and might excluded non-"newsy" content that people are sharing a lot).

Perhaps we need an entirely new sort of "search engine" for navigating information quality. (Yes!)

All that said...

Perhaps we need an entirely new sort of "search engine" for navigating information quality. (Yes!)

All that said...

This *is* a step forward. I appreciate action and experimentation over inaction.

But everyone has a lot of homework to do.

WhatsApp must address implementation issues.

Google, journalists, health authorities, etc. need to ensure they can support the people looking for answers.

But everyone has a lot of homework to do.

WhatsApp must address implementation issues.

Google, journalists, health authorities, etc. need to ensure they can support the people looking for answers.

Correction: This wan't quite right.

https://twitter.com/metaviv/status/1290764584685457408

The entire WhatsApp message is actually being sent to Google, and even being searched for. It just doesn't show up in the search box!

The search box user experience is confusing/misleading when you search for too much.

https://twitter.com/metaviv/status/1290764584685457408

The entire WhatsApp message is actually being sent to Google, and even being searched for. It just doesn't show up in the search box!

The search box user experience is confusing/misleading when you search for too much.

As mentioned above, an ordinary Google search of the full message content is simply not the right tool for this. https://twitter.com/metaviv/status/1290765378075860992

The simplest approach might be to automatically identify the critical keywords and search for those instead.

Example from @otter_ai:

The simplest approach might be to automatically identify the critical keywords and search for those instead.

Example from @otter_ai:

A better solution might be a new type of system, let's call it an "analysis engine" or "relatedness engine" as opposed to a search engine.

It takes in a full WhatsApp message and shows content related to it, potentially with a focus on key claims and more authoritative sources.

It takes in a full WhatsApp message and shows content related to it, potentially with a focus on key claims and more authoritative sources.

This would also be useful in general for articles, Facebook posts, etc. Imagine being able to click a button to see other articles on the same topic or event.

Oh wait, you already sort of can in parts of Google!

Well, why not apply that to this? (Of course, many fiddly details)

Oh wait, you already sort of can in parts of Google!

Well, why not apply that to this? (Of course, many fiddly details)

Read on Twitter

Read on Twitter