Details on @GoogleAI's DNN supercomputer based on TPUv3. Nice writeup released while first numbers of TPUv4 show up as mlperf results (2.5-3.7x faster)! Some key points and comments below. Including a wacky comparison with #HPC #supercomputers ... 1/n

https://cacm.acm.org/magazines/2020/7/245702-a-domain-specific-supercomputer-for-training-deep-neural-networks/fulltext#:~:text=Remarkably%2C%20a%20TPUv3%20supercomputer%20runs,weak%20scaling%20of%20manufactured%20data.

https://cacm.acm.org/magazines/2020/7/245702-a-domain-specific-supercomputer-for-training-deep-neural-networks/fulltext#:~:text=Remarkably%2C%20a%20TPUv3%20supercomputer%20runs,weak%20scaling%20of%20manufactured%20data.

and a a fun story about workloads shifting away from (over?) specialized hardware and late addition of support for batch normalization (which is being replaced now with layer normalization ;-) ). "Your beautiful DSA can fail if [] algorithms change, rendering it [] obsolete." :)

Network is a 16x16 torus, they insist on bisection bandwidth which is not relavant in #DeepLearning. Global bandwidth may be but rings are key. The "2.5x over [] cluster" seems bogus, just have five lanes per link going into five switches for a 5x speedup. It's really about cost!

"Asynchronous training introduces [] parameter servers that eventually limit parallelization, as

the weights get sharded and the bandwidth [] to workers becomes a bottleneck." - what about asynchronous distributed training? https://arxiv.org/abs/1908.04207 or https://arxiv.org/abs/2005.00124

the weights get sharded and the bandwidth [] to workers becomes a bottleneck." - what about asynchronous distributed training? https://arxiv.org/abs/1908.04207 or https://arxiv.org/abs/2005.00124

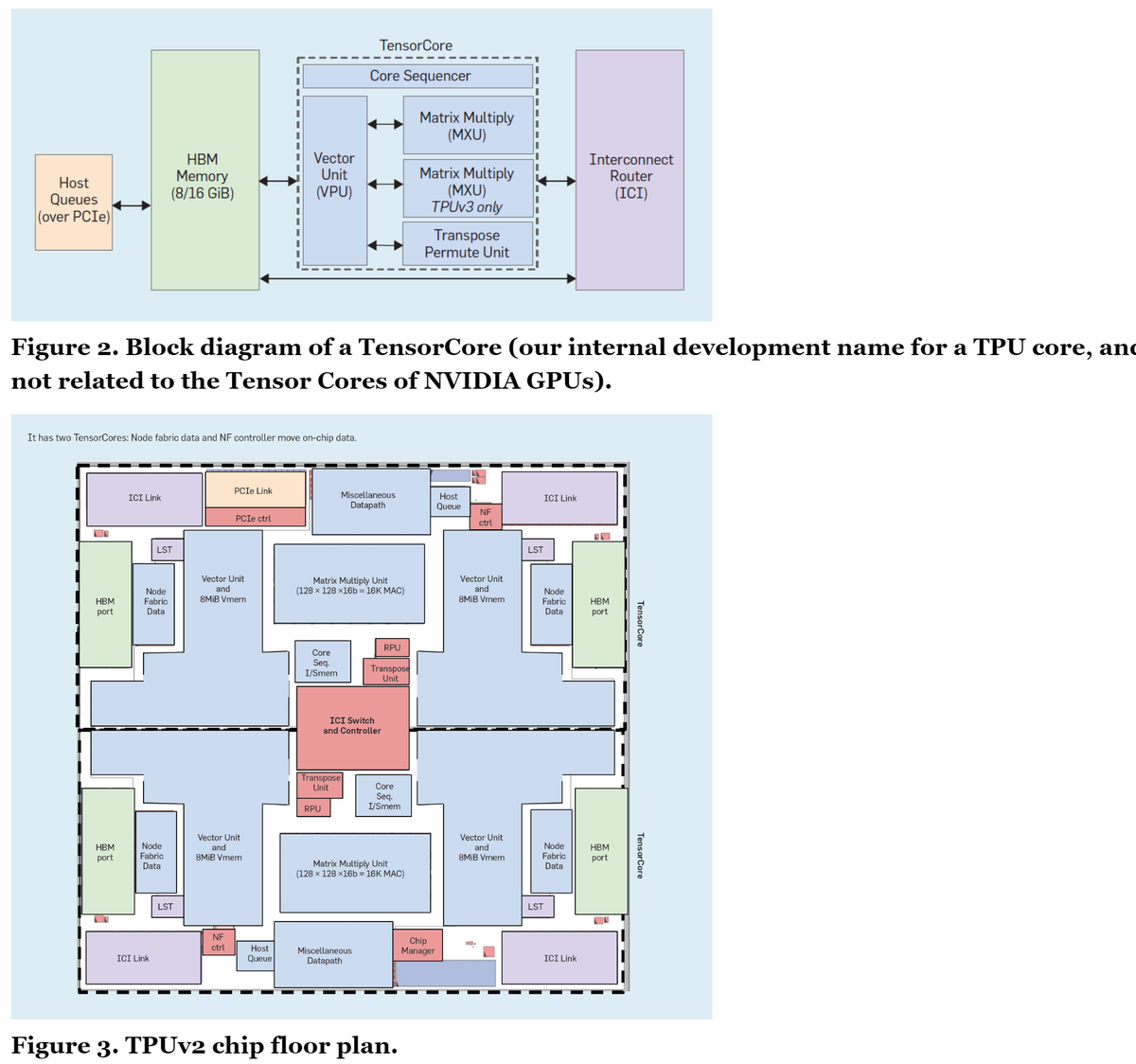

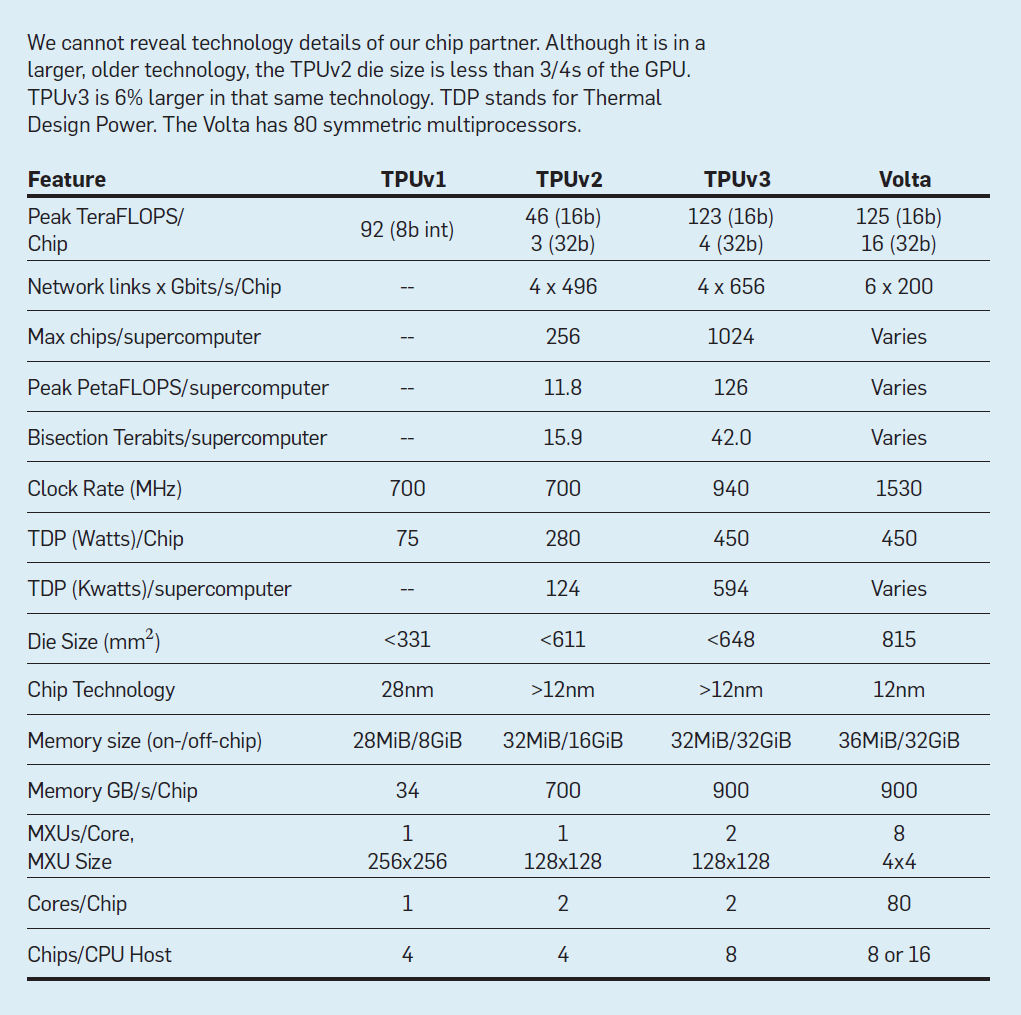

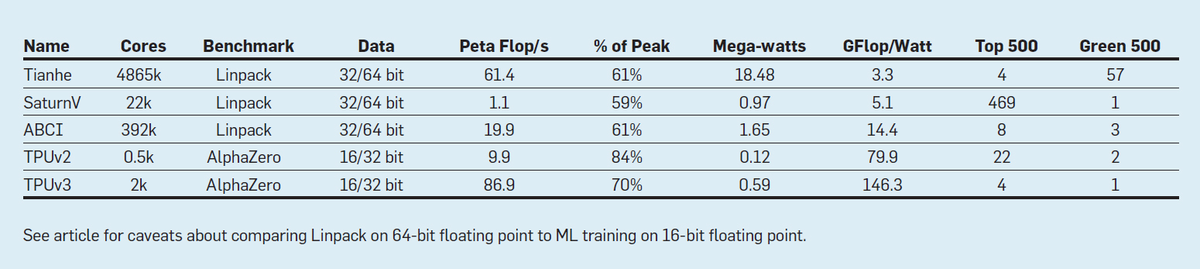

Chip architecture shows at least 32 MiB SRAM and data layout manipulation units in addition to the obvious systolic MMM and vector units (which seem large but are probably mostly SRAM?). @graphcoreai and @CerebrasSystems go extreme here (minimizing I/O cusr with SRAM). Who wins?

Systolic MMM unit size decreased from 256^2 tp 128^2

to get higher data movement efficiency. "The bandwidth required to feed and obtain results from an MXU is proportional to its perimeter, while the computation it provides is proportional to its area."

to get higher data movement efficiency. "The bandwidth required to feed and obtain results from an MXU is proportional to its perimeter, while the computation it provides is proportional to its area."

Comparison with V100 shows similar peak flops and memory size/bandwidth, 2x network bandwidth (good choice!), a smaller die in a larger process (cost!), and high specialization towards tensor contraction workloads (four MMM units with 128^2 instead of V100's 640 units with 4^2).

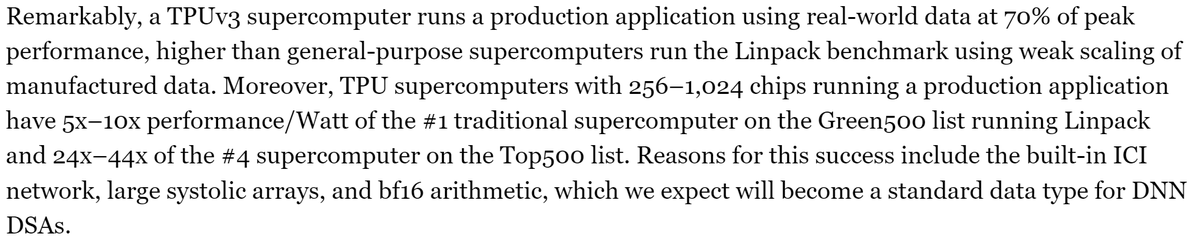

The comparison with @top500supercomp is unfortunately nonsense. HPL is tightly coupled and has very strict rules (disallowing tournament pivoting etc.). Yes, it's antiquated and mostly there for history reasons. Comparing it with DNN training alas AlphaZero is bogus ...

... as DNN computations can be distributed with MUCH smaller cuts and are not as tightly coupled as HPL! And of course FP64 vs. BF16 - gosh, even the "Data" column misleads by hiding proportions! I'm disappointed by the @CACMmag referees that this made it through.

Read on Twitter

Read on Twitter![Network is a 16x16 torus, they insist on bisection bandwidth which is not relavant in #DeepLearning. Global bandwidth may be but rings are key. The "2.5x over [] cluster" seems bogus, just have five lanes per link going into five switches for a 5x speedup. It's really about cost! Network is a 16x16 torus, they insist on bisection bandwidth which is not relavant in #DeepLearning. Global bandwidth may be but rings are key. The "2.5x over [] cluster" seems bogus, just have five lanes per link going into five switches for a 5x speedup. It's really about cost!](https://pbs.twimg.com/media/EeKVMsxWkAA39I7.png)