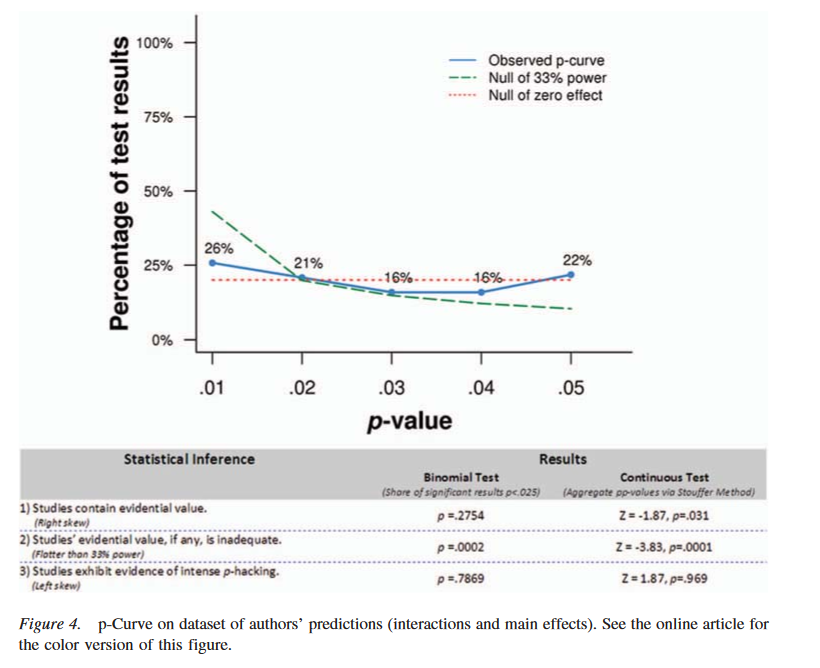

A meta-analysis on behavioral priming effects suggest an meta-analytic effect size of d = 0.35. https://www.apa.org/pubs/journals/features/bul-bul0000030.pdf But it also contains the flattest p-curve analysis you'll see in a while. Guess which of these analyses you should trust more? (Hint: It's the p-curve).

As you would expect, all publication bias alarm bells go off in the study. Regrettably, the bias detection dection is not state of the art. E.g., after trim-and-fill, authors conclude this analysis is "suggesting a significant effect after accounting for publication bias."

However, as the Cochrane handbook reminds us: "there is no guarantee that the adjusted intervention effect matches what would have been observed in the absence of publication bias" https://handbook-5-1.cochrane.org/chapter_10/10_4_4_2_trim_and_fill.htm

It's interesting that in my second MOOC, where I teach about bias correction techniques (week 4) the meta-analysis you will simulate with a true null but massive bias will also yield a d = 0.3 - with small to medium samples, that's the effect size estimate you get with huge bias!

An important question we should ask ourselves is what we need to establish whether an effect is 'real'. People sometimes mistakingly think that a meta-analysis will tell you what the 'real' effect is. But that should rarely be the goal of a meta-analysis.

A more important goal is to examine heterogeneity in effect sizes (Of which there was plenty in the meta-analysis! I squared was 62.45% (95% CI [57.89, 66.51]). It makes no sense there is 'a' effect of d = 0.35 with this much heterogeneity.

With large heterogeneity all bias tests start working less well - so there really is no way to know what a reasonable effect size estimate it. People sometimes wonder what is worth more: A meta-analysis, or a multi-lab replication project. In this field it is clearly the latter!

PS: This is a meta-analysis from 2016. There have been surprisingly little large replication studies in this field over the last 5 years. I wonder why?

Read on Twitter

Read on Twitter