I mentioned this elsewhere but wanted to post here too. Might want to reconsider using old 3 CRT q's for online studies. Much has changed in past few years.

Many current MTurkers have answers memorized/bookmarked. Bots may be programmed w/ answers too. https://www.reddit.com/r/TurkerNation/comments/9nx8wc/lily_pads_and_bats_balls_what_survey_answers_have/

Many current MTurkers have answers memorized/bookmarked. Bots may be programmed w/ answers too. https://www.reddit.com/r/TurkerNation/comments/9nx8wc/lily_pads_and_bats_balls_what_survey_answers_have/

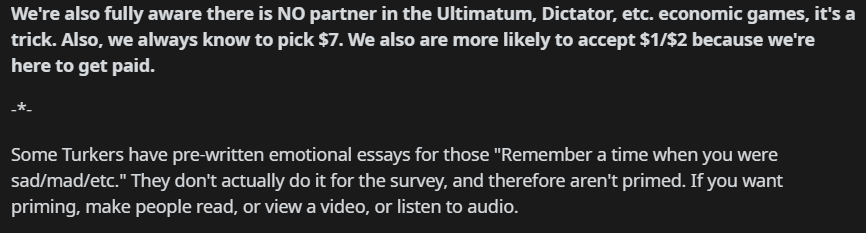

As noted in the post, it's likely that many MTurkers have pre-written answers to "priming" questions and simply copy/paste. Helps explain why many MTurkers finish HITs w/ writing prompts in blazing fast times. From the linked post (from an MTurk subreddit) in my previous tweet:

Here are examples of some of the browser scripts they use to highlight words from commonly asked (i.e., overused) scales, psychometric tasks, and attn chks so they can paste in pre-written answers or pull up bookmarked answers.

https://greasyfork.org/en/scripts/8093-mturk-survey-highlight-words https://chrome.google.com/webstore/detail/multihighlighter/ocifbglmlbpgpbflnkfpclkmckoollbn?hl=en

https://greasyfork.org/en/scripts/8093-mturk-survey-highlight-words https://chrome.google.com/webstore/detail/multihighlighter/ocifbglmlbpgpbflnkfpclkmckoollbn?hl=en

Lastly, many issues w/ online data quality could easily be solved if more/all researchers would compensate workers fairly (i.e., treat them like actual human beings) & not be lazy or sloppy w/ research design.

Shit pay + shit design = shit data https://blog.turkerview.com/2019-sentiment-analysis-mturk-wages-measuring-fairness/

Shit pay + shit design = shit data https://blog.turkerview.com/2019-sentiment-analysis-mturk-wages-measuring-fairness/

Forgot to add that another glaring issue with the CRT (in general) is we still don't know for sure what it actually/specifically measures. https://twitter.com/wimdeneys/status/1273321415111053313

Read on Twitter

Read on Twitter