Bayesian decoders. I really enjoyed my first discussion with the groups I am mentoring @neuromatch #NeuromatchAcademy. However, it seems that Bayesian decoders sound magic to many. It’s not! 1/

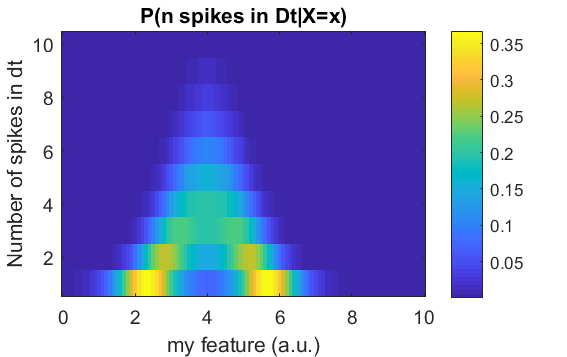

First: to decode, you need to know the encoder P(N = n spikes | X=x) which literally translates to: what is the probability to observe N=n spikes in a given time window knowing your feature X=x (think Contrast = +2 or Position = top left corner). 2/

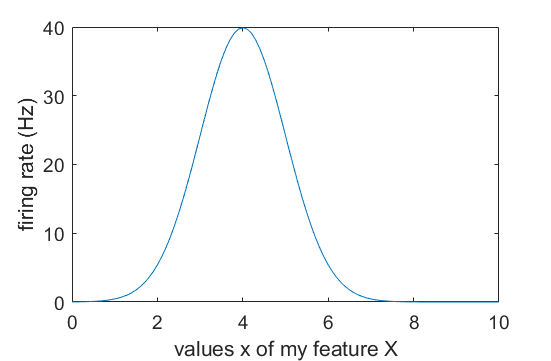

Let’s write this probability P(n|x) for the sake of clarity. It directly depends on the tuning curve of the neuron, you know, the function r(x) which gives the firing rate for every values of your feature X. For example, this: 3/

To get P(n|x) from r(x), you make a first assumption: your neuron emits spikes following a Poisson process. Yes, it’s not always true, but it works fine. Mathematically:

In a time window Dt for X=x, the expected number of spikes is: P(n|x)=(Dt * r(x))^n * exp(-r(x) * Dt) / n!

4/

In a time window Dt for X=x, the expected number of spikes is: P(n|x)=(Dt * r(x))^n * exp(-r(x) * Dt) / n!

4/

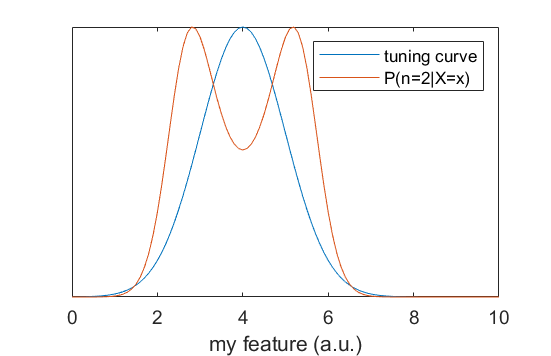

If we observe a lof of spikes, we're at the peak! Why two bumps for small ns? The only place where it is likely to observe one or two spikes is at the two tails of the tuning curve. Better viewed here: 6/

But wait, here, we are already talking about decoding, aren’t we? This probability really looks like “what is the probability to observe X=x knowing the neuron has emitted two spikes in 100ms”. How come? 7/

This is the spirit of Bayes rule. Mathematically, it tells us:

P(x|n) * P(n) = P(n|x) * P(x).

P(x|n) is literally your decoder, that is the probability to observe x knowing n. It is directly related to P(x|n), the encoder we have already calculated. There are two other terms. 8/

P(x|n) * P(n) = P(n|x) * P(x).

P(x|n) is literally your decoder, that is the probability to observe x knowing n. It is directly related to P(x|n), the encoder we have already calculated. There are two other terms. 8/

The first is P(n), basically, it’s the average firing rate. Most of the time you can forget about it because it is enough to renormalize your probabilities so that they sum up to one. 9/

The second is P(x). You can also forget about this one unless the “occupancy” of your feature space is not homogeneous, for example, the animal has not equally explored all spatial locations if you decode position from hippocampal place cells. 10/

Finally, to decode your feature X, just take the “argmax” of P(x|n), that is, for which x is the probability of my decoder maximal? Yes, just the “max” function in most programming languages. 11/

OK, but now, we want to decode from a population of neurons, not just a single one. So we want: P(x|n1,n2..nk) for k neurons emitting n1, n2 and nk spikes respectively (in the same time window). How do we compute this? 12/

Here comes our second assumption: neurons are independent! Again, not always true, but works fine, believe me. In probability, this simplifies our life a looooot, because now we can write:

P(x|n1,n2..nk) = P(x|n1) * P(x|n2) *…* P(x|nk)

Just need individual r1(x), r2(x),... 13/

P(x|n1,n2..nk) = P(x|n1) * P(x|n2) *…* P(x|nk)

Just need individual r1(x), r2(x),... 13/

Eh voila! Just take the argmax of P(x|n1,n2..nk) and you have made a beautiful Bayesian decoder. Of course, you can use tricks to make it faster (e.g. in Matlab, to express this in terms of matrix products, hard to beat) 14/

In summary, for this recipe you need:

some tuning curves

a Poisson distribution (your first assumption)

independent neurons (your second assumption)

a "max" function (available in all good programming languages) 15/

some tuning curves

a Poisson distribution (your first assumption)

independent neurons (your second assumption)

a "max" function (available in all good programming languages) 15/

For reference, read and reread Zhang et al., 1998

https://journals.physiology.org/doi/full/10.1152/jn.1998.79.2.1017

This is where I learned all this!! 16/

https://journals.physiology.org/doi/full/10.1152/jn.1998.79.2.1017

This is where I learned all this!! 16/

Also, some more materials I presented at the Q-life winter school in Paris last February on this topic:

https://github.com/PeyracheLab/Class-Decoding

Have fun decoding!! 17/

https://github.com/PeyracheLab/Class-Decoding

Have fun decoding!! 17/

Read on Twitter

Read on Twitter