I am finally jumping on the bandwagon of GPT-3 and read the 72-page long paper released by @OpenAI. Here is a summary of some technical details:

Model: largely the same as GPT-2, but alternate between dense/space attentions as in Sparse Transformers (OpenAI's 2019 work).

1/n

Model: largely the same as GPT-2, but alternate between dense/space attentions as in Sparse Transformers (OpenAI's 2019 work).

1/n

Model (cont'): sparse factorizations of the attention matrix to reduce computing time and memory use. trained 8 different sizes of models varying from 125M parameters (w/ 12 layers) to 175B parameters (w/ 96 layers). context window is set to 2048 tokens.

2/n

2/n

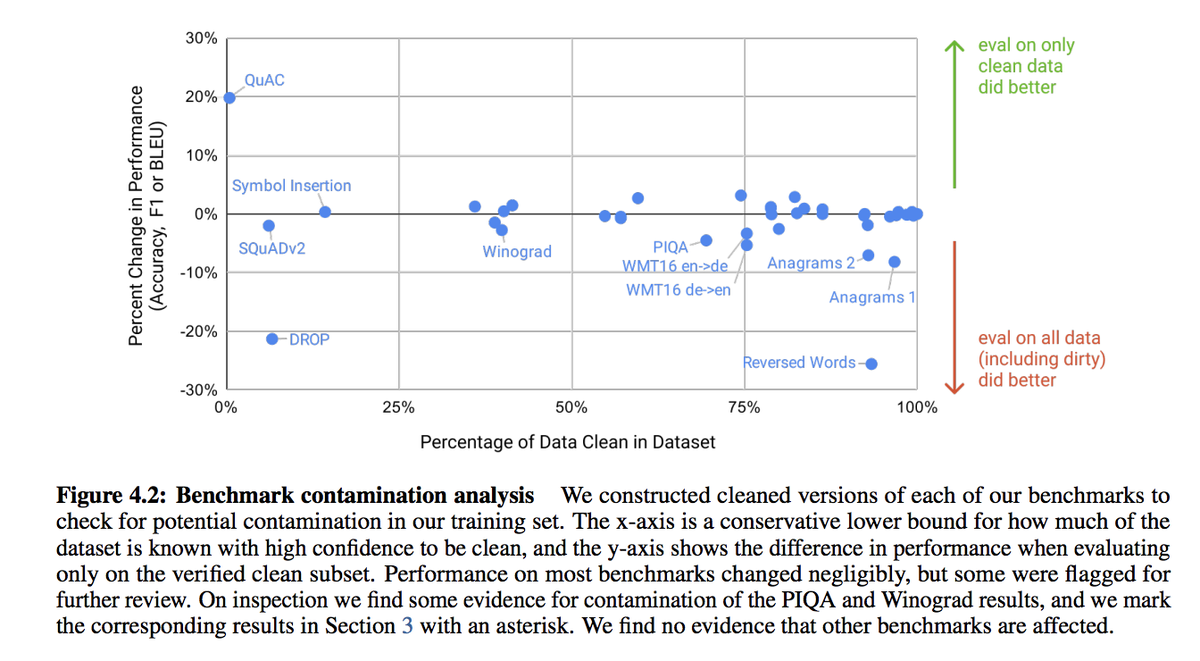

Data: filtered Common Crawl (410B tokens downsampled x0.44) + WebText dataset (19B x2.9) + two Internet-based book corpora (12Bx1.9, 55Bx0.43) + English Wiki (3B upsampled x3.4). efforts were made to remove overlap with evaluation datasets but unfortunately there was a bug.

3/n

3/n

Data (cont'): the authors are very honest at addressing this data leaking issue when reporting the results and offered some detailed analyses. (see figure below). note that this doesn't affect the model's impressive capability, only fairness in downstream task comparison.

4/n

4/n

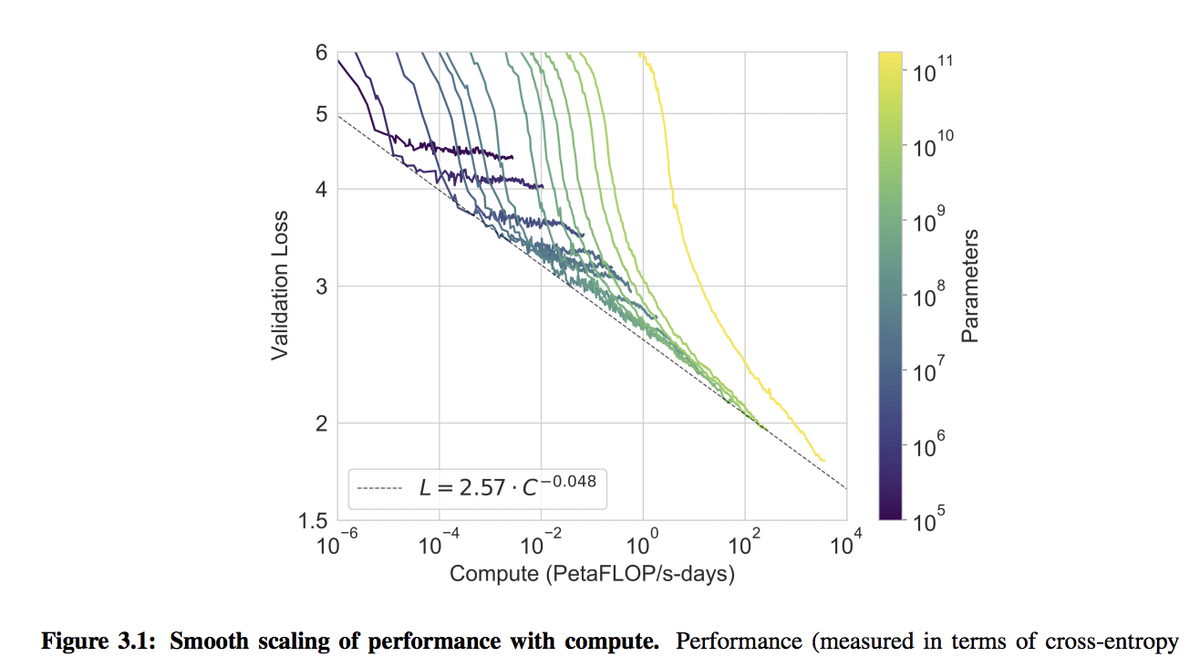

Training: a formidable amount of 3640 petaflop/s-days to train the largest GPT-3 language model (175B parameters). 1 petaflop/s-day is equivalent to 8 V100 GPUs at full efficiency of a day. gradually increased batch size, learning rate warmup/decay, and parallelism.

5/n

5/n

To be continued ...

6/n

6/n

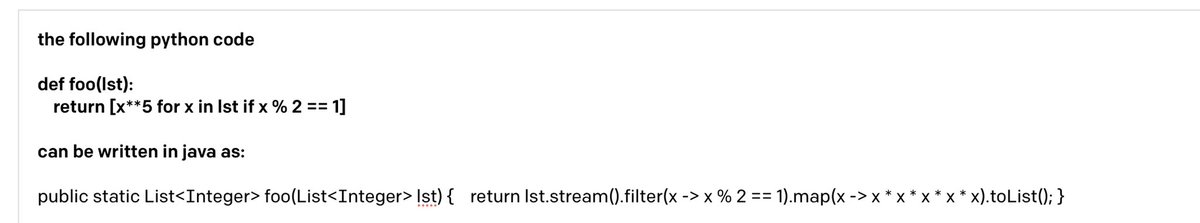

Findings (cont'): the most impressive example is actually not in the paper but this one from @yoavgo -- code translation from Python to Java (bold is prompt given to GPT-3, plain text is output). Thank @alan_ritter for pointing me to this.

https://twitter.com/yoavgo/status/1285357605355954176

8/n

https://twitter.com/yoavgo/status/1285357605355954176

8/n

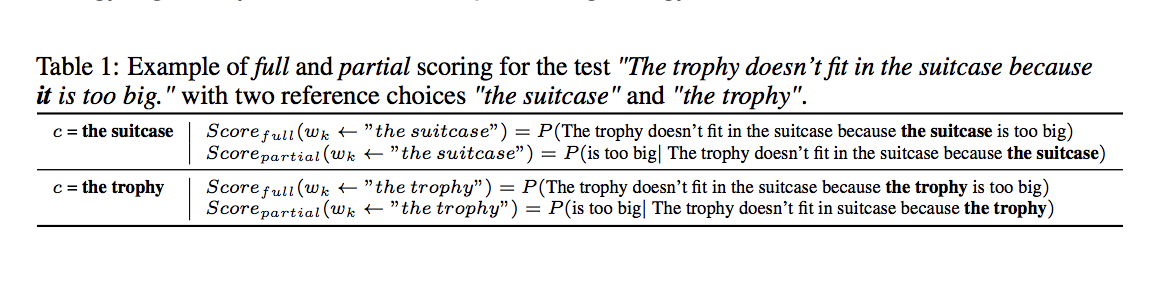

Findings (cont'): to complete Winogram tasks, GPT-3 and other language models can be queried by the method (see figure below) first proposed by @thtrieu_ and @quocleix from @GoogleBrain in 2018.

Extra results that compare XLNet to GPT-3 here: https://twitter.com/joeddav/status/1285238992724787200

9/n

Extra results that compare XLNet to GPT-3 here: https://twitter.com/joeddav/status/1285238992724787200

9/n

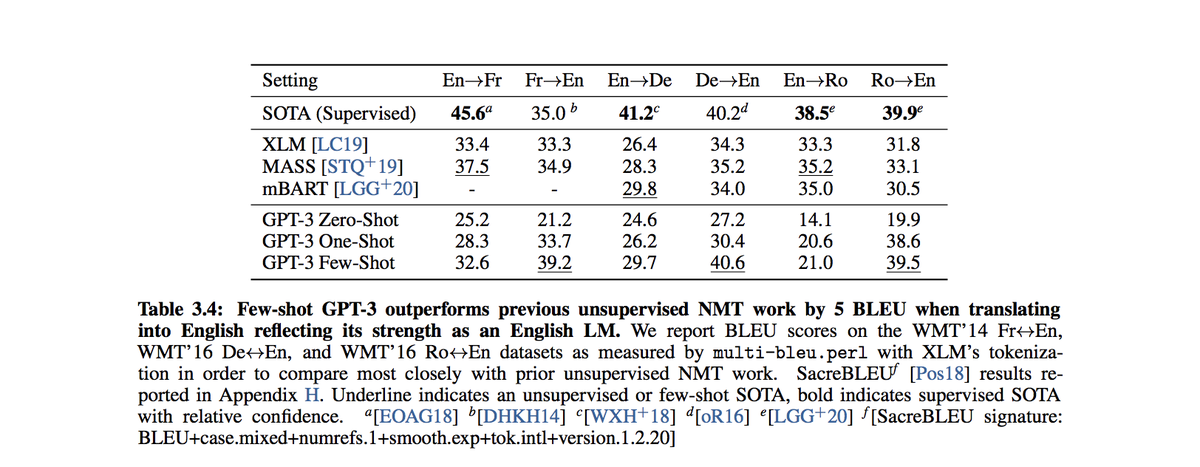

Findings (cont'): MT results look good when translating into English but less so the other way around. Explained by the fact that (1) only 7% of GPT-3's training data is non-English; (2) GPT-3 uses the same BPE vocabulary of 50,257 pieces as GPT-2, which is English only.

10/n

10/n

Bonus point: more amazing examples are on Twitter than in the paper. Here is another one that simplifies legal text into lay language by @michaeltefula:

https://twitter.com/michaeltefula/status/1285505897108832257

11/n

https://twitter.com/michaeltefula/status/1285505897108832257

11/n

Read on Twitter

Read on Twitter