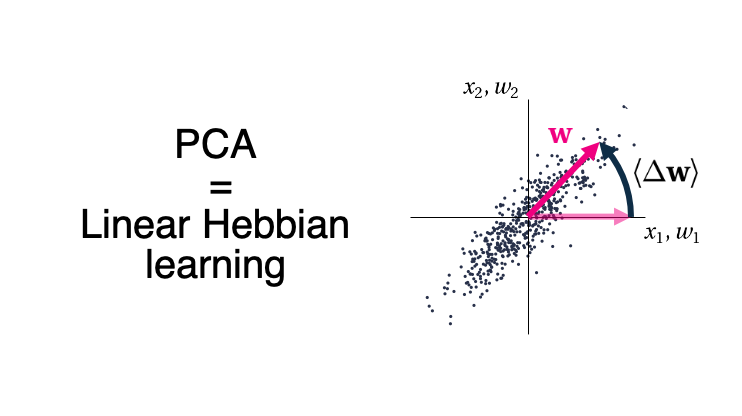

You probably heard of principal component analysis (PCA) as a powerful tool for dimensionality reduction, but did you know that neurons might perform PCA via Hebbian plasticity? (1/6)

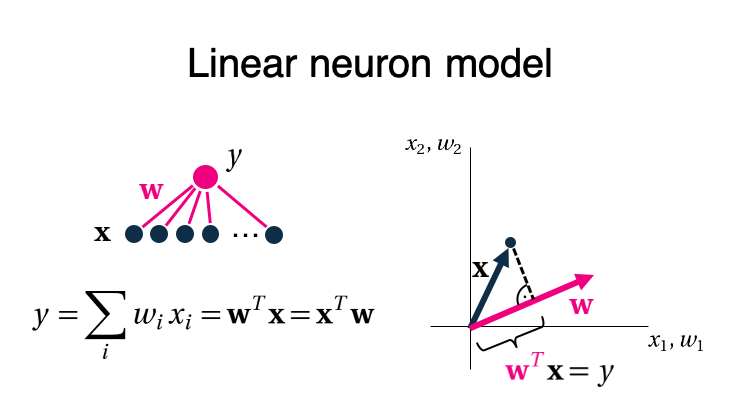

Assume a simple model where the firing rate of a neuron y is given as a weighted sum of its inputs 'x'. This is equal to the scalar product between a weight vector w and a multivariate data point 'x'. (2/6)

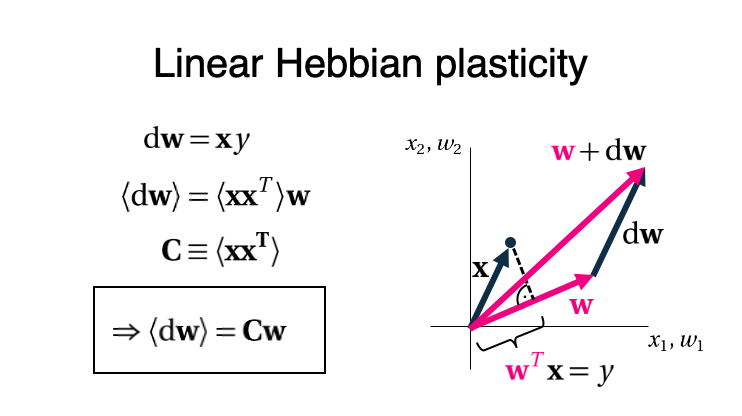

Now lets update our weights according to a Hebbian rule, i.e., the synaptic change ’dw’ is proportional to the product of pre- and post-synaptic activity. Then, the expected change ‘<dw>’ depends on the input covariance matrix ‘C’. (3/6)

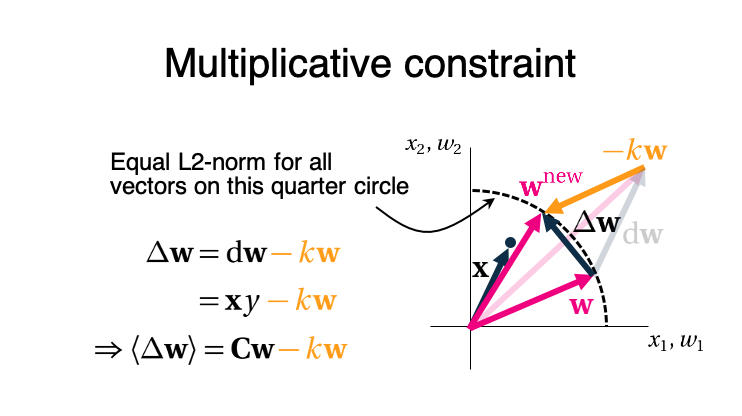

In order prevent unlimited growth, we subtract a multiple 'k' of the weight vector, such that the squared sum (L2-norm) of the synaptic weights remains constant. Observe that the new weight vector now is much closer to the presented data-point 'x'. (4/6)

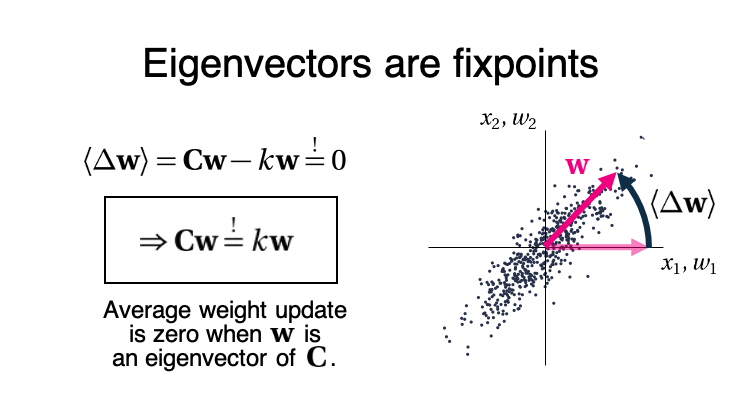

After repeating this update for multiple data points 'x', the weight vector settles into a fixpoint, i.e., a vector for which the average change is zero. Then the weight vector is equal to an eigenvector of the covariance matrix 'C', i.e., a (the first) principal component. (5/6)

Check out (Miller & MacKay, 1994) for all the details: https://www.mitpressjournals.org/doi/abs/10.1162/neco.1994.6.1.100 (6/6)

(7/6) Bonus: If we choose 'y' to be a non-linear function of the weighted sum, we can even do independent component analysis (ICA) (Hyvärinen & Oja, 1996):

http://papers.nips.cc/paper/1315-one-unit-learning-rules-for-independent-component-analysis.pdf

http://papers.nips.cc/paper/1315-one-unit-learning-rules-for-independent-component-analysis.pdf

Read on Twitter

Read on Twitter