Ok, here starts a little tweetorial on everything you should know on 'Gamma Correction" and why it matters... https://twitter.com/loicaroyer/status/1284220315103449089

So why should you care? Well if you already care about adjusting the brightness and contrast of your images, you should care about gamma correction, specially because it partly happens without your knowledge and often without your control. (2/n)

First I would like to point out that I am not talking about gamma correction in the context of image analysis or quantification. I am talking about gamma correction as it pertains to how your images are reproduced on screen or on paper (3/n)

That's important in microscopy because we often need to look at our images, show them, publish them etc... Many of us have experienced the frustration of our images being washed out, too dark, etc.. Some of us roughly know that this has to do with color spaces and gamma. (4/n)

Because gamma correction is a nonlinear operation, it scares some of us into thinking that it is not 'allowed'. Any mathematical operation between raw data and an image on paper can be misused. But let's not throw the baby with the bath water just yet. (5/n)

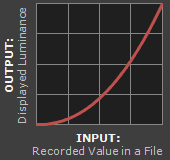

So what is gamma correction then? This article is a good introduction. For historical and technological reasons, most monitors and printers have a non-linear response modeled as power-law. (6/n) https://www.cambridgeincolour.com/tutorials/gamma-correction.htm

That means that if you write your own PNG file and you make a linear ramp of values, your screen or printer will not give you back something linear. (7/n)

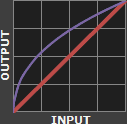

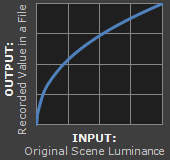

You know what is also not linear? Visual perception. Psychophysics tell us that brightness perception is non linear: our eyes can perceive more differences for darker shades. Our eyes gamma is roughly 0.4 So the curve looks like below. (8/n)

Old school CRT screen from yesteryear have a gamma of roughly 2.5 -- almost exactly the inverse of human perception. Your screen today has probably a gamma around 2.2

However, it is not that simple... (9/n)

However, it is not that simple... (9/n)

It gets worse: file formats such as JPEG also play gamma tricks. According to the sRGB standard, images are encoded with a gamma of 1/2.2 which cancels out the gamma of a 'standard' sRGB screen or printer -- if such a thing truly exists.

(10/n)

http://www.cambridgeincolour.com/tutorials/gamma-correction.htm

(10/n)

http://www.cambridgeincolour.com/tutorials/gamma-correction.htm

At this point it should be clear that'gamma correction' of one kind or another is happening to your images whether you like it or not. This is particularly important if you generate your own image files (Python/Java lib) and don't pay attention to gamma.

(11/n)

(11/n)

So what does this mean? First it means that changing gamma for the purpose of improving the reproduction of your images is entirely legit. If you generate your own files, make sure to apply a gamma between 0.45 and 0.55 to compensate the screens and printers non-linearity.

(12/n)

(12/n)

Second, it means that if you intend to visually compare images you must of course use the same gamma for all,

as well as use same brightness and contrast. The common gamma value can be set -within reason- so as to maximize the information rendered on paper or on screen.

(13/n)

as well as use same brightness and contrast. The common gamma value can be set -within reason- so as to maximize the information rendered on paper or on screen.

(13/n)

Third, If you are in the business of building microscopes and pushing resolution, for example, well you cannot use gamma correction for quantifying resolution but you can use it to obtain a high-fidelity restitution of your images...

(14/n)

(14/n)

... as long as you stay within the range of inverse gamma values needed for pre-correcting the gamma of screens and printers. In the end, always disclose what you have done. Even if for gamma it is sometimes futile given the little control you have over end-user gamma.

(15/n)

(15/n)

Read on Twitter

Read on Twitter