There's been a ton of major work over the last month on Zebra, the @ZcashFoundation's Zcash node, and I'm really excited about it! Some highlights in this thread:

@yaahc_ implemented a persistent state system, which acts as the source of truth on the current chain state. It's currently backed by `sled`, but it's presented to the rest of the node by a generic `tower::Service` interface that asynchronously maps state queries to responses.

Because the node state is the source of truth, it should only ever contain verified data. This way we have a clear validation boundary (ingesting new state data), rather than scattering consensus checks throughout the code.

But, the requirement to contain only verified data poses a problem, because we plan to checkpoint on Sapling activation rather than requiring a dependency on libsnark. And checkpoint verification requires having a complete chain of blocks up to the checkpoint hash.

We could do a one-off hack, but because we're programming asynchronously, there's a much more elegant solution using futures, implemented by @twbtwb.

Instead of hardcoding a single checkpoint hash, we have a `CheckpointVerifier`, a `tower::Service` that asynchronously maps blocks to verification results. Internally, it stores a list of `(height, hash)` checkpoints in a BTreeMap.

When a purported block comes into the verifier, its verification does not resolve right away. Instead, the verifier constructs a oneshot channel, stores the sender end with the block in a queue, and returns the receiver end to the caller.

https://docs.rs/tokio/0.2.21/tokio/sync/oneshot/index.html

https://docs.rs/tokio/0.2.21/tokio/sync/oneshot/index.html

Then it checks whether there's an unbroken chain of queued blocks from the previously-verified checkpoint up to a subsequent checkpoint. If not, nothing happens, and the previous callers continue to wait.

When enough blocks arrive to form an unbroken chain, it walks backward along the chain from the new checkpoint to the previous one, verifying all the hashes. If this segment of the chain matches the checkpoint, it uses the saved oneshots to send the verifications to callers.

This is really cool for a couple of reasons!

First, it means that blocks can arrive out-of-order but still be verified in order, which means that we can download blocks in parallel. This fits really well with our network stack, which lets us load-balance requests over hundreds of peers: https://twitter.com/hdevalence/status/1222295809380667392

Second, having an extensible checkpointing mechanism rather than a single hardcoded checkpoint means that in addition to the mandatory Sapling checkpoint, we could optionally have a fast-sync method that checkpoints all the way up to a few thousand blocks prior to each release.

These two things set us up to be able to do very fast initial chain syncs, since the bulk of the work becomes the block downloads themselves, and in some previous scratch testing of the network stack, it's been able to sustain > 100MB/s download rates: https://twitter.com/hdevalence/status/1226658659213561857

But there's one more thing we need to do to make this work: the node has to actually figure out what blocks to download. And here there's another obstacle.

Bitcoin (and Zcash) nodes determine what blocks to download roughly as follows: the node picks out some hashes along its current chain, starting with its current chain tip, and asks peers whether they know further hashes or chain forks.

This works when you download blocks and sequentially verify them one at a time, but in our case, we have our verified chain tip, and *then* a potentially large number of blocks pending verification.

So instead, we designed an algorithm (implemented by @yaahc_ ) for pipelineable block lookup, that chases down prospective chain tips in advance of verification and handles forks.

This could expose a DoS vector, if we could be led into downloading a bunch of off-chain blocks just because some peer fed us garbage data.

But again our network design makes this easy: requests are randomly load-balanced over potentially hundreds of peers, so if one bad peer feeds us bad hashes, we'll be attempting to download them from unrelated peers and will probably fail. https://twitter.com/hdevalence/status/1229912362955620352

A nice way to think about all of this is trying to make sure that we have some out-of-order execution of the verification logic (using futures to express semantic dependencies) and a bunch of infrastructure to try to keep the pipeline that feeds it full.

In the above discussion, our OoO verification is just checkpointing. But when we do verification for real, we have all kinds of work to do -- verifying signatures, zk-snark proofs, etc, and we want to do all of that work as efficiently as possible too.

One thing we'd like to do is pervasive batch verification, but conventional batch verification APIs are awkward to use. Back in January, I wrote up a sketch of how asynchronous programming could be a good model for batch processing: https://twitter.com/hdevalence/status/1223434261891448832

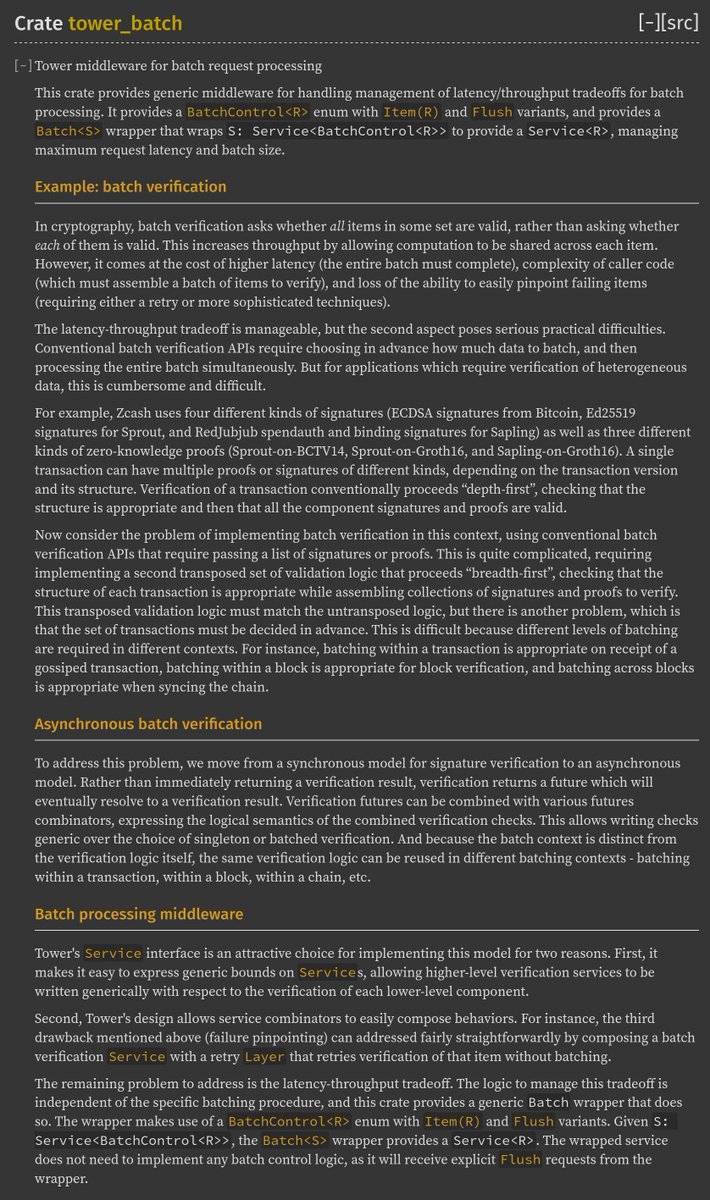

Doing batch processing in practice requires managing latency/throughput tradeoffs, and this logic is essentially similar regardless of what processing is being done. Using Tower, I was able to spin it out into some generic batch-processing middleware: https://doc.zebra.zfnd.org/tower_batch/index.html

@durumcrustulum implemented batch verification in the `redjubjub` crate for the spend authorization and binding signatures used in Zcash, then wrote an async wrapper that lets callers receive verification results asynchronously.

This works similarly to the checkpoint verifier: callers get the receiver end of a oneshot channel, and the verifier sends out verification results when the batch is complete.

This gets wrapped in a `tower-batch` wrapper that uses a request latency bound and a batch size bound to control when to flush the batch. The result is a sharable verification service that automatically batches contemporaneous signature verifications.

Passing this service around as part of the verification context means that we can have one codepath and one set of verification logic that automatically batches signature verification between transactions, between blocks, etc., depending on what other work is available.

And, because we have generic middleware, this isn't limited just to signature verifications, but can be applied to all the other batchable work we have to do (most importantly, zk-snark proofs).

Read on Twitter

Read on Twitter