Thought I'd take a leaf out of @ProfMattFox's book and use some 2x2 tables to illustrate this https://twitter.com/GidMK/status/1278468849294168064

So, here's our table. We've got positive and negative results for our test compared with the truth

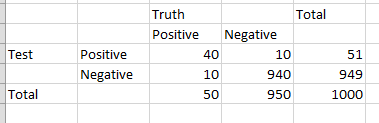

Here, I've plugged in the numbers for a prevalence of 5% (i.e. 5% of people have had COVID-19)

Here, I've plugged in the numbers for a prevalence of 5% (i.e. 5% of people have had COVID-19)

Now, we know that sensitivity is 80.9% and specificity is 98.9%. Plugging those in, we get this table

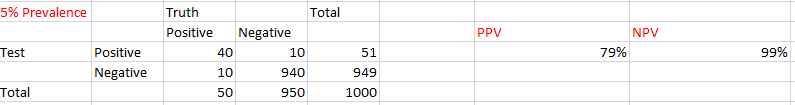

From this, we can work out the

Positive Predictive Value (PPV) = likelihood of a positive test having actually had COVID-19

Negative Predictive Value (NPV) likelihood of a negative test actually not having had COVID-19

Positive Predictive Value (PPV) = likelihood of a positive test having actually had COVID-19

Negative Predictive Value (NPV) likelihood of a negative test actually not having had COVID-19

Here's what that looks like for a population prevalence of 5%

Of the people who test positive, only 79% actually have the disease. Of the negatives, 99% have never had it

Of the people who test positive, only 79% actually have the disease. Of the negatives, 99% have never had it

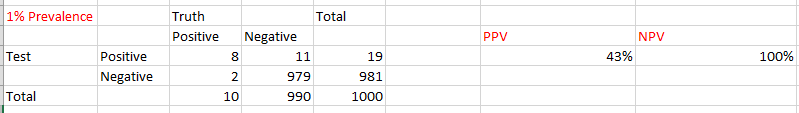

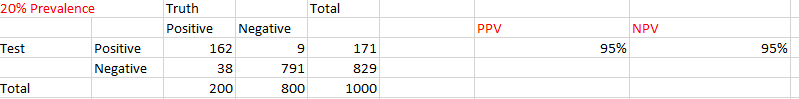

But if we vary the prevalence, the PPV and NPV change a lot!

At 1%, PPV = 43% NPV = 100%

At 20%, PPV = 95% NPV = 95%

At 1%, PPV = 43% NPV = 100%

At 20%, PPV = 95% NPV = 95%

What this means is that if you run the test in a population where very few people who've had the disease, MOST of your positive tests will be false positives

This means that your prevalence estimate might be double the true one (or more)

This means that your prevalence estimate might be double the true one (or more)

If instead you run the test in a population where many people have had COVID-19, you'll underestimate the prevalence by at least 10%

Both of these aren't great scenarios

Both of these aren't great scenarios

Read on Twitter

Read on Twitter