Super interesting paper by @ccullen_1 now @wb_research WP - for all those #VAW #GBV measurement geeks!

Compares intimate partner & non-partner SV measures in #Nigeria & #Rwanda:

face-to-face

face-to-face

audio CASI

audio CASI

list randomization

list randomization

@SVRI @UniofOxford https://openknowledge.worldbank.org/handle/10986/33876

@SVRI @UniofOxford https://openknowledge.worldbank.org/handle/10986/33876

Compares intimate partner & non-partner SV measures in #Nigeria & #Rwanda:

face-to-face

face-to-face  audio CASI

audio CASI list randomization

list randomization

@SVRI @UniofOxford https://openknowledge.worldbank.org/handle/10986/33876

@SVRI @UniofOxford https://openknowledge.worldbank.org/handle/10986/33876

We know #IPV measures are widely under-reported, not just to formal sources but also informally [to neighbors, friends, family ... interviewers]

Study of 24 nationally-rep data shows only 40% of women had previously disclosed to anyone @TiaPalermo:

2/n https://academic.oup.com/aje/article/179/5/602/143069

Study of 24 nationally-rep data shows only 40% of women had previously disclosed to anyone @TiaPalermo:

2/n https://academic.oup.com/aje/article/179/5/602/143069

This means women may be telling someone about #IPV for the very first time in a survey & understandably many will not report

Not just due to social desirability bias, but also shame/embarrassment, stigma, fear of repercussions ...

3/n

Not just due to social desirability bias, but also shame/embarrassment, stigma, fear of repercussions ...

3/n

This paper is a neat new take allowing comparison of 2 methods aimed at reducing under-reporting

Overall take away is the list outperforms audio-CASI & face-to-face by up to 100% in #Rwanda & up to 39% in #Nigeria [audio-CASI > face-to-face, but often difference is insig]

4/n

Overall take away is the list outperforms audio-CASI & face-to-face by up to 100% in #Rwanda & up to 39% in #Nigeria [audio-CASI > face-to-face, but often difference is insig]

4/n

Other studies have also used the list method for #IPV, however this is the first I've seen comparing several methods & also asking *men* the list [in #Rwanda]

@TiaPalermo & I talk about some of the previous efforts in this @UNICEFInnocenti blog 5/n: https://blogs.unicef.org/evidence-for-action/measuring-taboo-topics-list-randomization-for-research-on-gender-based-violence/

@TiaPalermo & I talk about some of the previous efforts in this @UNICEFInnocenti blog 5/n: https://blogs.unicef.org/evidence-for-action/measuring-taboo-topics-list-randomization-for-research-on-gender-based-violence/

Direct comparisons between the three methods also show that differences vary by country & by type of violence

This is super interesting & @ccullen_1 posits this may be due to differences in acceptance of violence typologies in each setting [& how much stigma is attached]

6/n

This is super interesting & @ccullen_1 posits this may be due to differences in acceptance of violence typologies in each setting [& how much stigma is attached]

6/n

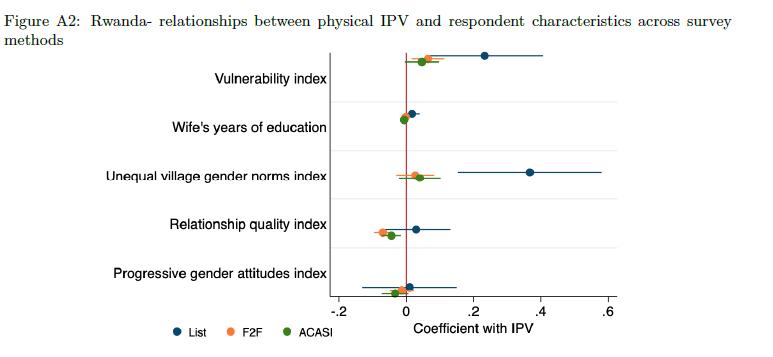

A further analysis by background characteristics shows some groups of vulnerable women are more likely to under-report - however my take away is that this is selective [many tests done here & most show no differences]

7/n

7/n

However, this *is* very important in the context of an IE if a program is specifically trying to change vulnerability factors - ie. empower women or change gender norms

This is when you'd worry the evaluation itself may change under-reporting & lead to wonky results

8/n

This is when you'd worry the evaluation itself may change under-reporting & lead to wonky results

8/n

List randomization appears to reduce under-reporting the most ... what's the catch?

For one, potentially large efficiency losses. Standard errors on lists quite large in comparison to other methods

Double lists can help, but increases time to implement lists

9/n

For one, potentially large efficiency losses. Standard errors on lists quite large in comparison to other methods

Double lists can help, but increases time to implement lists

9/n

Another draw back are ??s around design/validity of lists - in this paper the sexual violence list measures in #Rwanda do not pass standard tests for design effects [respondents may have modified answers based on the inclusion of the sensitive statement]

10/n

10/n

Third, standard #IPV modules measure multiple [often 7+] questions to get at one IPV typology, w/ lists we often measure only 1-2 Qs, not the full battery,

This is important for measurement & understanding of program impacts ... & frequency [intensive margin] also imp!

11/n

This is important for measurement & understanding of program impacts ... & frequency [intensive margin] also imp!

11/n

Finally, some interesting ethical questions around mounting a survey w/ only lists, do you follow full ethical protocol & offer women referrals? Do we lose this benefit when using more anonymous methods?

12/n

12/n

Overall really happy to see more experimentation to get at measurement issues in this field - a lot we can do & firmly believe policy & programming will benefit with advancements & innovation!

end/

end/

Read on Twitter

Read on Twitter

![This paper is a neat new take allowing comparison of 2 methods aimed at reducing under-reportingOverall take away is the list outperforms audio-CASI & face-to-face by up to 100% in #Rwanda & up to 39% in #Nigeria [audio-CASI > face-to-face, but often difference is insig]4/n This paper is a neat new take allowing comparison of 2 methods aimed at reducing under-reportingOverall take away is the list outperforms audio-CASI & face-to-face by up to 100% in #Rwanda & up to 39% in #Nigeria [audio-CASI > face-to-face, but often difference is insig]4/n](https://pbs.twimg.com/media/Eb3GXboUEAAbNSi.png)

![Direct comparisons between the three methods also show that differences vary by country & by type of violence This is super interesting & @ccullen_1 posits this may be due to differences in acceptance of violence typologies in each setting [& how much stigma is attached]6/n Direct comparisons between the three methods also show that differences vary by country & by type of violence This is super interesting & @ccullen_1 posits this may be due to differences in acceptance of violence typologies in each setting [& how much stigma is attached]6/n](https://pbs.twimg.com/media/Eb3IMVQVAAAE_sg.png)

![A further analysis by background characteristics shows some groups of vulnerable women are more likely to under-report - however my take away is that this is selective [many tests done here & most show no differences]7/n A further analysis by background characteristics shows some groups of vulnerable women are more likely to under-report - however my take away is that this is selective [many tests done here & most show no differences]7/n](https://pbs.twimg.com/media/Eb3J9ghVcAA9tuM.png)