If you (like me) see a 600B model, and shriek, let me try to give you some consolation. Why should we care about ultra-large models?

1/n https://twitter.com/lepikhin/status/1278125364787605504

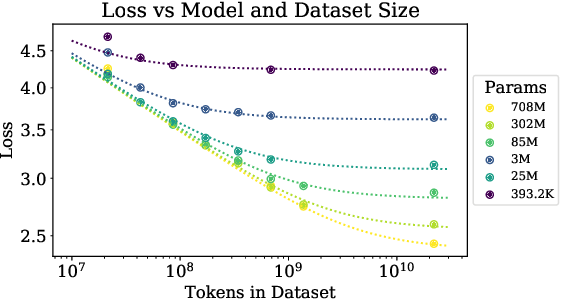

LM performance improves if we increase the size of a model and data simultaneously, but enters a regime of diminishing returns if one is kept fixed. That is, increasing amount of data, while using a smaller model is not helpful eventually 2/n https://api.semanticscholar.org/CorpusID:210861095

Since large LMs converge faster, training a large model, but stop training early actually saves *training* compute. Don’t be bummed about the *inference* time, because large models are more compressible! 3/n https://twitter.com/Eric_Wallace_/status/1235616760595791872

So large models have these nice properties, but they are accessible to the broader community only if they are compressed as well. Is now the time that every ultra-large model is released together with its compressed, matching version? 4/4 https://roberttlange.github.io/posts/2020/06/lottery-ticket-hypothesis/

Read on Twitter

Read on Twitter