Interested in trying out offline RL? Justin Fu's blog post on designing a benchmark for offline RL, D4RL, is now up: https://bair.berkeley.edu/blog/2020/06/25/D4RL/

D4RL is quickly becoming the most widely used benchmark for offline RL research! Check it out here: https://github.com/rail-berkeley/d4rl

D4RL is quickly becoming the most widely used benchmark for offline RL research! Check it out here: https://github.com/rail-berkeley/d4rl

An important consideration in D4RL is that datasets for offline RL research should *not* just come from near-optimal policies obtained with other RL algorithms, because this is not representative of how we would use offline RL in the real world. D4RL has a few types of datasets..

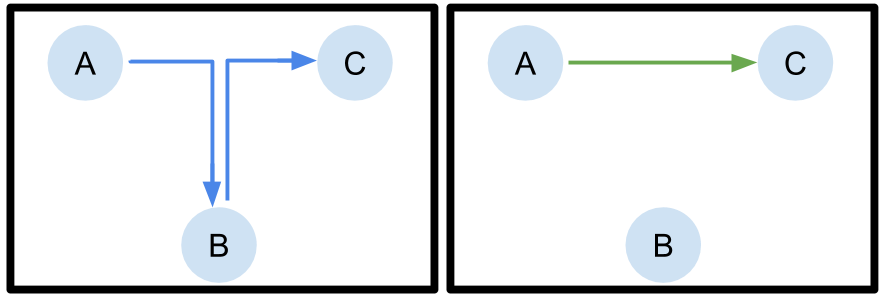

"Stitching" data provides trajectories that do not actually accomplish the task, but the dataset contains trajectories that accomplish parts of a task. The offline RL method must stitch these together, attaining much higher reward than the best trial in the dataset.

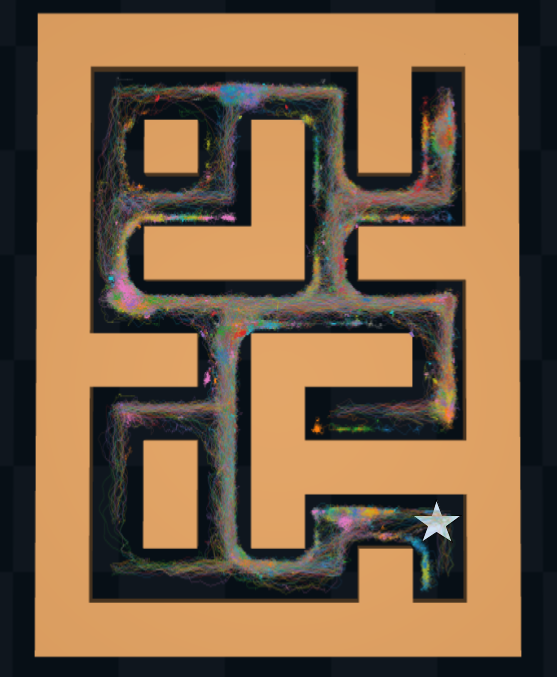

D4RL presents multiple navigation tasks that allow studying this type of data, including navigation with the ant robot through a maze, and driving with Carla. Here is an example visualization of the trajectories for the ant maze.

D4RL also includes tasks that reflect realistic use cases of offline RL: control of a robotic hand with human demonstrations (from @aravindr93's DAPG paper), vision-based driving in Carla, traffic control with the Flow traffic simulator, etc.

Read on Twitter

Read on Twitter