Bias in AI has been a big topic for years: Because biased humans make algorithms, which do the work of many people (+ ppl trust computers to spit out truth), biases magnify. Often “simple” algorithms are the least tested, and irrelevant data (race, gender) can be used against you

This week, I reported on what bias in AI means for fashion. I was surprised that while this topic has being well-documented broadly, it hadn’t been written about much in this context. Here’s what I learned (thread): https://www.voguebusiness.com/technology/as-fashion-resets-its-algorithms-should-too

Data needs to be updated. If your AI has seen discriminatory content and thinks it's acceptable, it will reproduce that. AI must adapt as the business adapts. — @LadyAshBorg, CEO @vue_ai

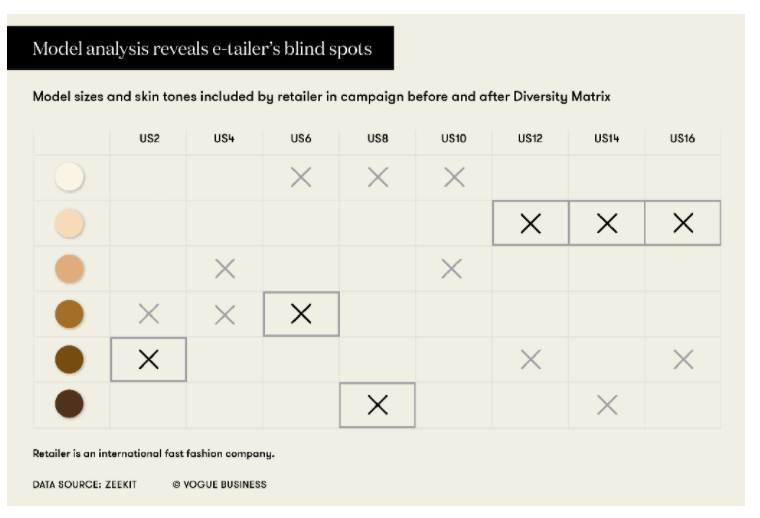

Product images matter. If you ask Google what the average customer looks like, it thinks 1/25 is plus-size or has dark skin, due to brand catalogues. This is not reality, but these visuals represent the data of the internet. — Yael Vizel, @zeekit_app

Even brands that try to represent diverse models have blind spots. Zeekit's Diversity Matrix charts representation of body type and skin tone on ecommerce sites. Here’s a before/after from one brand’s model analysis (the boxes are gaps in representation that they discovered).

Consumers can reinforce biases in search engines, so companies need to monitor and adjust automated content. Ex: If searches for “love” only result in straight people, this reinforces the notion that only heterosexual couples are “normal”. —Jill Blackmore Evans, @pexels

Marketing based on demographics like race or gender can introduce bias, especially if it isn’t relevant data (unless customers specifically say they want this incorporated). Focusing on customer behavior is more effective, and makes more money. —Jessica Graves, @sefleuria

Simply avoiding explicit use of gender or skin tone data (“fairness through unawareness”) may not be sufficient; it ignores implicit correlations. Data inputs need to be diverse to avoid a biased feedback loop. —Nadia Fawaz, fairness in AI, @Pinterest

Still, data and tech can’t solve biased teams. Plus, diverse representation drives loyalty. “In this day and age, [loyalty] is super important and diminishing quickly, so whatever brands can do on the tech side and the human side is really important.” —Zena Hanna, @zenadigital

“It comes down to who is building these systems. If the diversity around the table who is testing and training them is all homogeneous? Good luck. You are almost guaranteeing that you’ll end up with a biased system.” @falonfatemi, @nodeio

The full story is in @voguebusiness, and if you have more questions about this topic for the AI experts, write to us at [email protected] and we’ll answer them on our Instagram at the end of the week. https://www.instagram.com/voguebusiness/?hl=en

And thank you to all those who shared insights, both named and unnamed, for the piece, including @karinnanobbs, @Dstillery chief data scientist Melinda Han Williams, @techwithtaz and others. Way more to say but here's the link https://www.voguebusiness.com/technology/as-fashion-resets-its-algorithms-should-too

Read on Twitter

Read on Twitter