I'm excited to report our new preprint (w/ @YuliaOganian and Edward Chang) is now out! This study uses a rare dataset of high-density grid recordings in both primary auditory cortex and STG to investigate speech processing. https://www.biorxiv.org/content/10.1101/2020.06.08.121624v1

I am grateful to our participants and my collaborators for their contributions. The purpose of our study was to understand how speech features are represented across the entire auditory cortex, including what representations exist where, and differences in temporal dynamics.

While many groups have looked at these questions using noninvasive methods, it is difficult to obtain the spatiotemporal resolution necessary to look at precise feature maps and their temporal response properties.

In humans, the primary auditory cortex is typically not easy to record from with ECoG grids because of its location. Grids can only be placed there when it's clinically necessary to dissect the lateral fissure (e.g. opercular or insular tumors on the language dominant side).

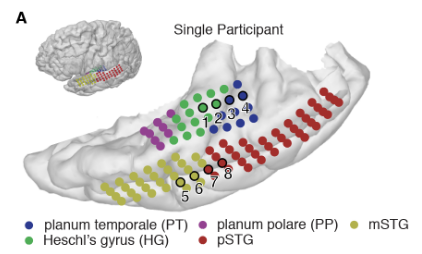

Here, we were able to record from 9 participants with simultaneous coverage of the temporal plane and lateral STG, allowing us to sample from a broad region encompassing primary auditory and parabelt regions. An example is shown below of coverage for one participant.

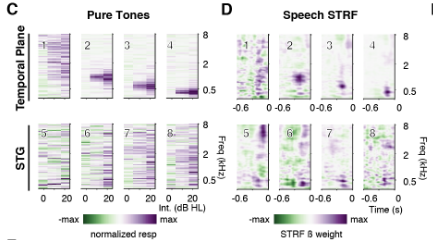

One of the questions we had was to what extent responses in "higher order" auditory cortex (for example, on the superior temporal gyrus or STG) were similar to responses to simple stimuli such as pure tones.

For a subset of participants, we recorded responses to both speech and pure tones. We found beautiful narrow-band tuning curves on the temporal plane whose tuning was recapitulated in our speech-derived spectrotemporal receptive fields.

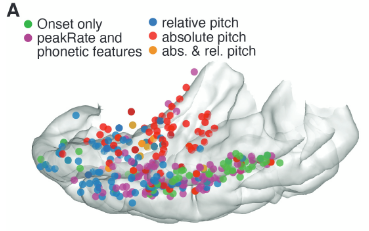

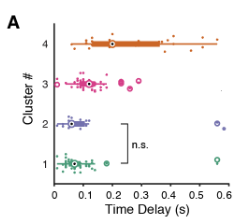

We then did a comparison of feature tuning across all of the electrodes across all brain areas, using multivariate linear models that incorporated both acoustic and linguistic features of speech.

We found an anatomical separation of regions encoding absolute pitch on the temporal plane, while relative pitch was more diffusely localized and was represented more laterally. This extends findings in Tang et al 2017 ( https://doi.org/10.1126/science.aam8577), which were in lateral STG.

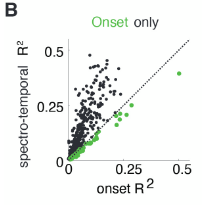

Interestingly, the sentence onset region previously observed in Hamilton et al 2018 ( https://doi.org/10.1016/j.cub.2018.04.033) was *only in the pSTG*, and not observed on the temporal plane. These electrodes are better explained by *one feature* (sentence onset) than larger spectrogram models.

Even more strikingly, the onset region is anatomically separate from primary auditory cortex, but has response latencies that are just as fast. We speculate that this region may receive parallel feedforward inputs rather than just inheriting responses from medial primary areas.

What does this all mean? We think this suggests that acoustic and linguistic feature representations constitute a distributed mosaic of specialized processing units that work together in parallel to allow us to perceive speech.

This project has been many years in the making. I helped collect the first dataset in 2014 when I first started my postdoc. We have iterated over many questions with this dataset with help, support, and feedback from many talented lab members, collaborators, and mentors.

Any questions, please let me know!

Read on Twitter

Read on Twitter