OK I'm going to weigh in on the tagging/content moderation conversation happening right now regarding Archive of Our Own. To be clear, this is me as a content moderation researcher who has also studied the design of AO3, NOT me as a member of the OTW legal committee. [Thread]

To clarify the issue for folks: Racism is a problem in fandom. In addition to other philosophical and structural things regarding OTW, there have been suggestions for adding required content warnings or other mechanisms to deal with racism in stories posted to the archive.

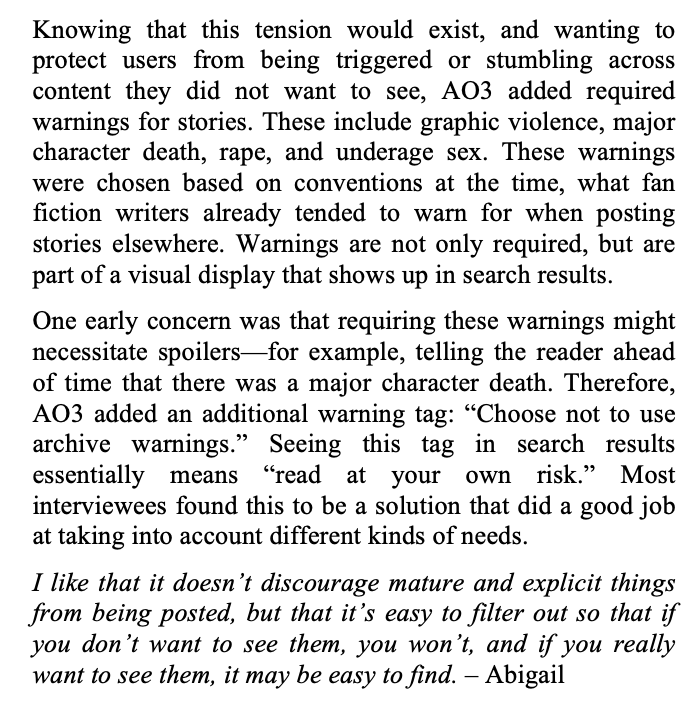

Here's a description of the content warning system from a paper I published about the design of AO3, in which I used this as an example of designing to mitigate the value tension of inclusivity versus safety. https://cmci.colorado.edu/~cafi5706/CHI2016_AO3_Fiesler.pdf

The idea is certain kinds of objectionable content is allowed to be there as long as it's properly labeled. Content will not be removed for having X, but it can be removed for not being tagged properly for having X. This allows users to not have to see content labeled with X.

My students and I have studied content moderation systems in a number of contexts, including on Reddit and Discord, and I actually think that this system is kind of elegant and other platforms can learn from it. That said, it relies on strong social norms to work.

One of the big problems with content moderation is that not everyone has the same definition of what constitutes a rule violation. Like... folks on a feminist hashtag almost certainly have a different definition of what constitutes "harassment" than on the gg hashtag.

A few years ago we analyzed harassment policies on a bunch of different platforms and usually it's just like "don't harass people" but okay what does that mean? I guarantee you there are people on twitter who think rape threats aren't harassment. https://dl.acm.org/doi/abs/10.1145/2957276.2957297

So a nice thing about communities moderating themselves--like on subreddits--is that they can create their own rules and have a shared understanding of what they mean. So you can have a rule about harassment and within your community know what that means. https://cmci.colorado.edu/~cafi5706/icwsm18-redditrules.pdf

I've talked for a long time about the strong social norms in fandom and how this has allowed in particular for really effective self regulation around copyright. In fact I wrote about this really recently based on interviews conducted in 2014. https://cmci.colorado.edu/~cafi5706/group2020_fiesler.pdf HOWEVER -

Generally, I think social norms are not as strong in fandom as they used to be, in part because it's just gotten bigger and there are more people and also some generational differences and we're more spread out. ( @BriannaDym & I wrote about this: https://journal.transformativeworks.org/index.php/twc/article/view/1583/1967 )

So the point here is that without those very strong shared norms, definitions differ - across sub-fandoms, across platforms, across people. Whether that thing we're defining is commercialism, harassment, or racism.

This isn't to say that there *shouldn't* be a required content warning for racism in fics, but I think it's important to be aware how wrought enforcement will be because no matter how it is defined, a subset of fandom will not agree with that definition.

That said, design decisions like this are statements. When AO3 chose the required warnings, it was a statement about what types of content it is important to protect the community from. I would personally support a values-based decision that racism falls into that category.

Design also *influences* values. An example of this was AO3's decision to include the "inspired by" tag which directly signaled (through design) that remixing fics without explicit permission was okay.

A design change to AO3 that forces consideration of whether there is racism in a story you're posting could have an impact on the overall values of the community by signaling that this consideration is necessary AND that you should be thinking of a community definition of racism.

Also important: content moderation has the potential to be abused. And I know that this happens in fandom, even around something as innocuous as copyright, since I've heard stories of harassment-based DMCA takedown campaigns.

There's a LOT of tension in fandom around public shaming as a norm enforcement mechanism, and I would want to see this feature used as a "gentle reminder" and not a way to drum people out of fandom. (See more about that here: https://cmci.colorado.edu/~cafi5706/group2020_fiesler.pdf)

That said, I think these reminders are needed. There will be a LOT of "uh I didn't know that was racist" and that requires some education. Which is important but also an additional burden on volunteer moderators who may be grappling with this themselves.

This is more a thread of cautions than solutions, unfortunately. Because before there can be solutions, we need: (1) an answer to a very hard question, which is "what is racism in fanfiction/fandom?" and this answer needs to come from a diverse group of stakeholders, and

(2) a solution for enforcement/moderation that both makes sure that folks directly impacted by racism are involved AND that we're not asking for burdensome labor from already marginalized groups

All of this comes down to: Content moderation is HARD for so many reasons, both on huge platforms and in communities. AO3 is kind of a unique case with its own problems but I am optimistic about solutions because so many people care about the values in fandom.

I'll add more as ideas and thoughts come to me, but I'd love to hear thoughts from others on this situation.

Thanks to everyone for the response to this thread! Very interesting conversations. I've reproduced this thread on Tumblr for sharing there + easier reading. https://cfiesler.tumblr.com/post/621473604874469377/thoughts-on-ao3-from-a-content-moderation

Read on Twitter

Read on Twitter