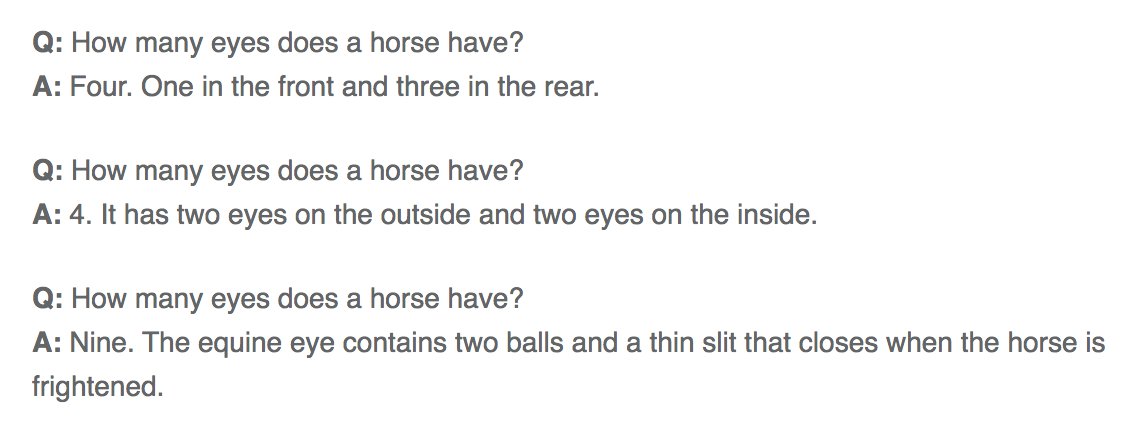

When an AI is trained on words, weird things can happen to the physical domain.

I asked the @OpenAI API about horses.

https://aiweirdness.com/post/621186154843324416/all-your-questions-answered

I asked the @OpenAI API about horses.

https://aiweirdness.com/post/621186154843324416/all-your-questions-answered

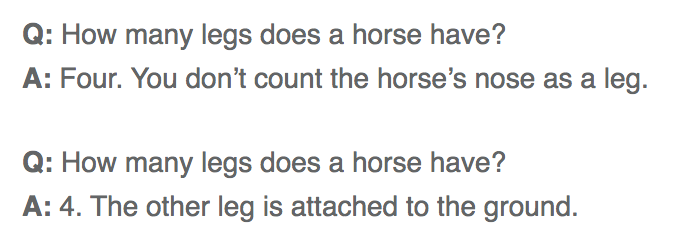

Even when the API answered correctly, it often answered weirdly.

Maybe based on its internet training data, it knew it ought to add *something* to a short answer like "4". But it didn't know exactly what to add.

Maybe based on its internet training data, it knew it ought to add *something* to a short answer like "4". But it didn't know exactly what to add.

To be fair, the AI probably hasn't seen basic information on horses stated explicitly online. Who writes a FAQ about how many legs a horse has?

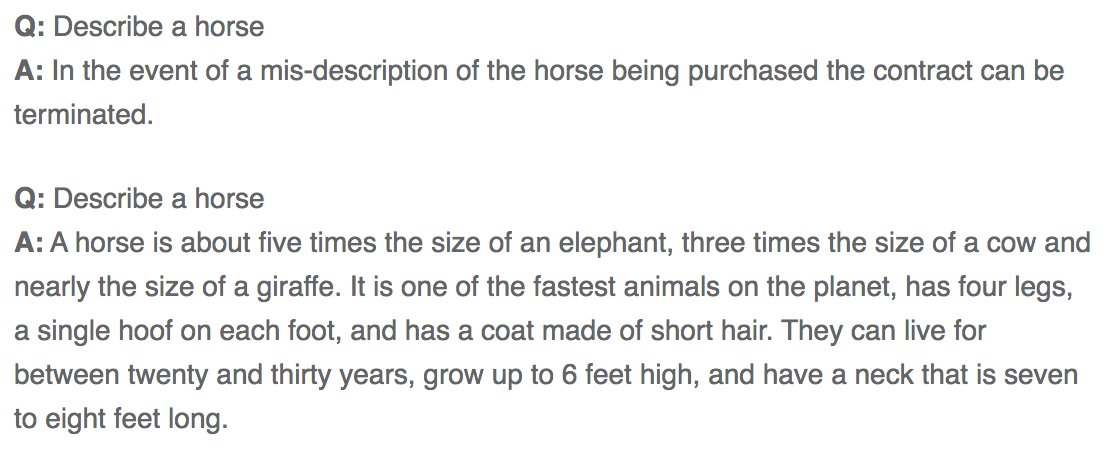

It also does a weird job with "describe a horse"

It also does a weird job with "describe a horse"

The AI's trained on text, not on reality.

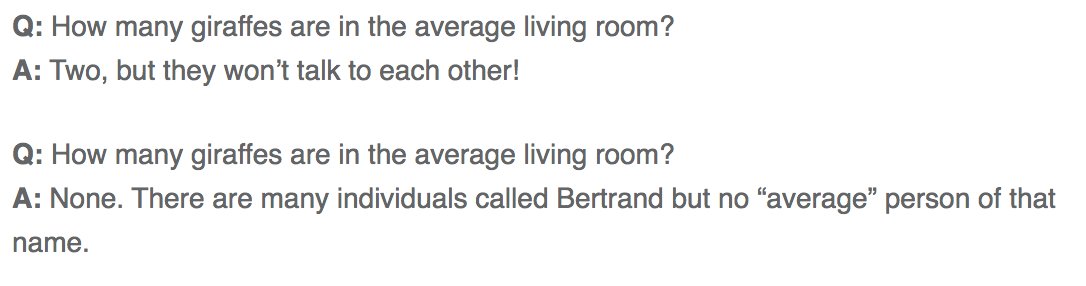

Given how rarely people remark on the lack of giraffes in their living rooms, it's weird but not entirely surprising that the AI doesn't often answer "zero" to "How many giraffes are in the average living room?"

Given how rarely people remark on the lack of giraffes in their living rooms, it's weird but not entirely surprising that the AI doesn't often answer "zero" to "How many giraffes are in the average living room?"

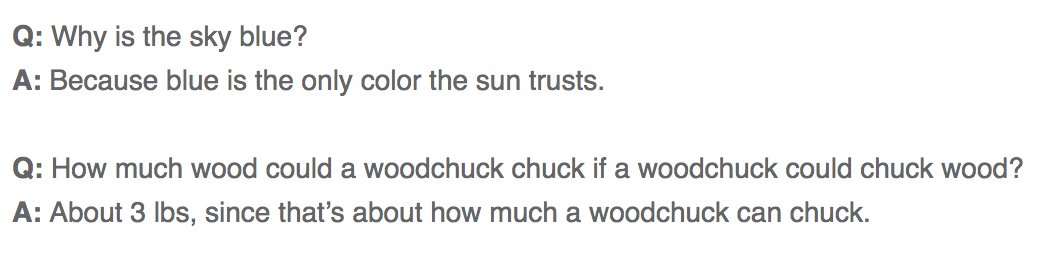

Part of the problem is if I just asked short questions without preamble, the AI often seemed to think I wanted jokes. Sometimes it would respond with jokes of its own.

It does not quite get jokes.

It does not quite get jokes.

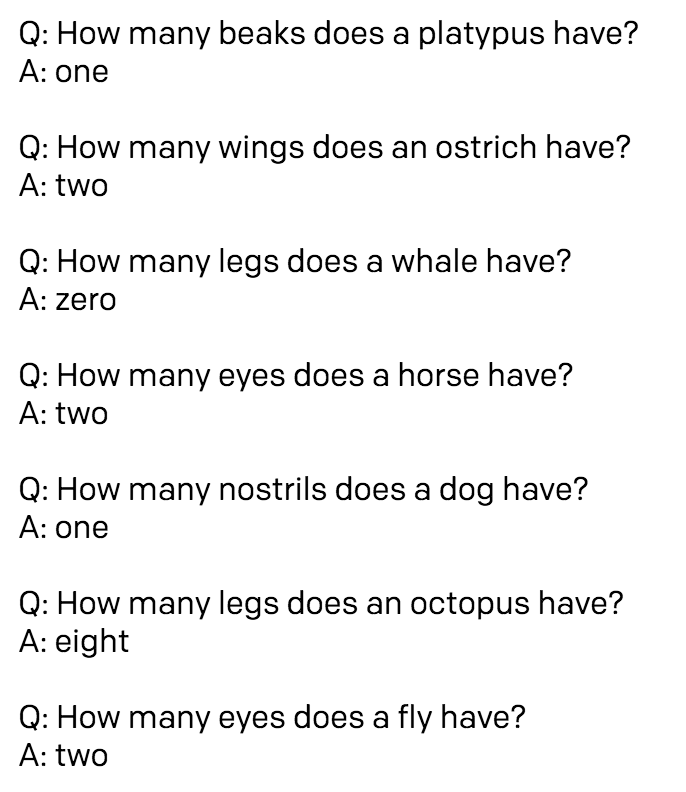

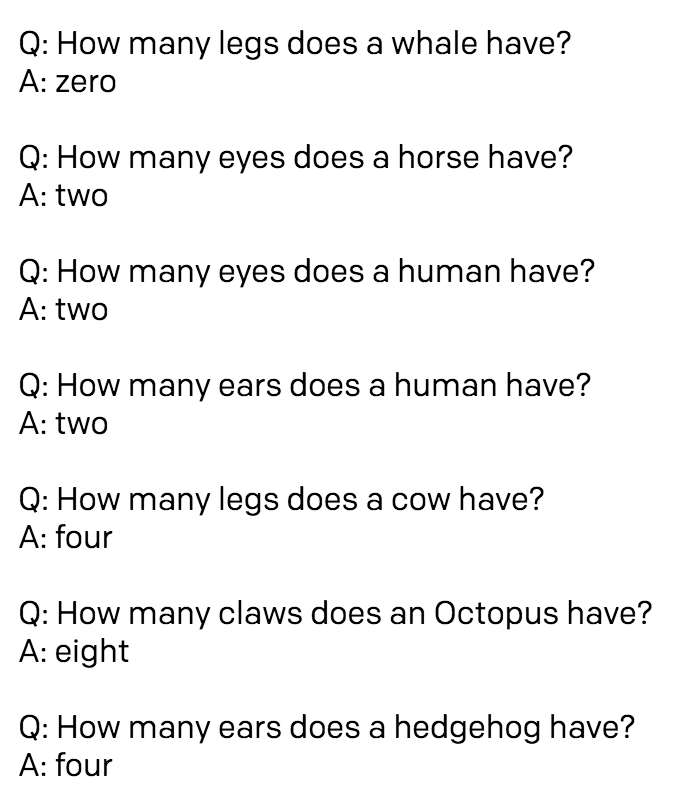

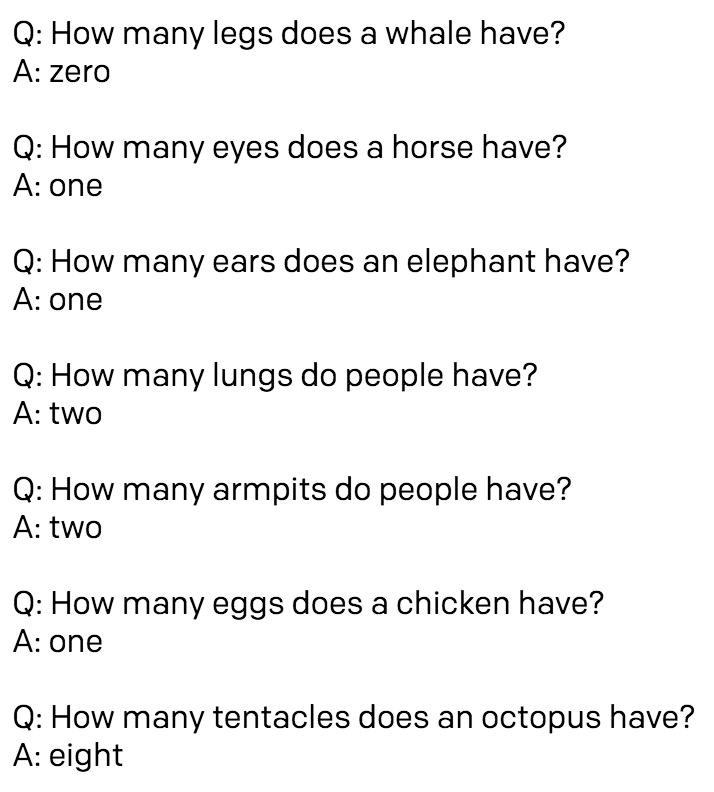

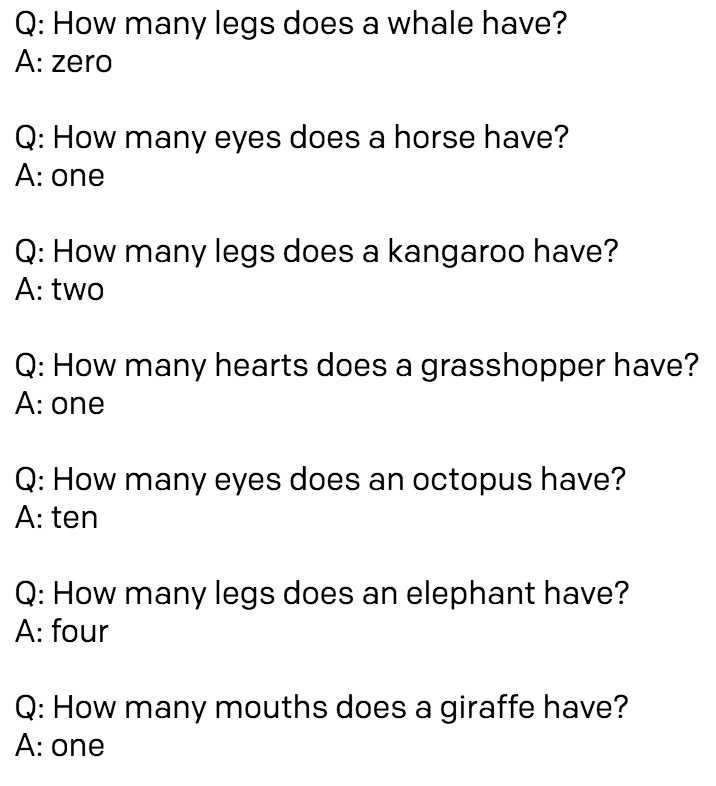

If I give it some examples of correct animal facts and then ask it about horse eyes, it will follow my lead and answer with single-word responses.

Here are a few sequential unedited responses from the OpenAI API. Facts are mine up till whale, rest is its completion.

Here are a few sequential unedited responses from the OpenAI API. Facts are mine up till whale, rest is its completion.

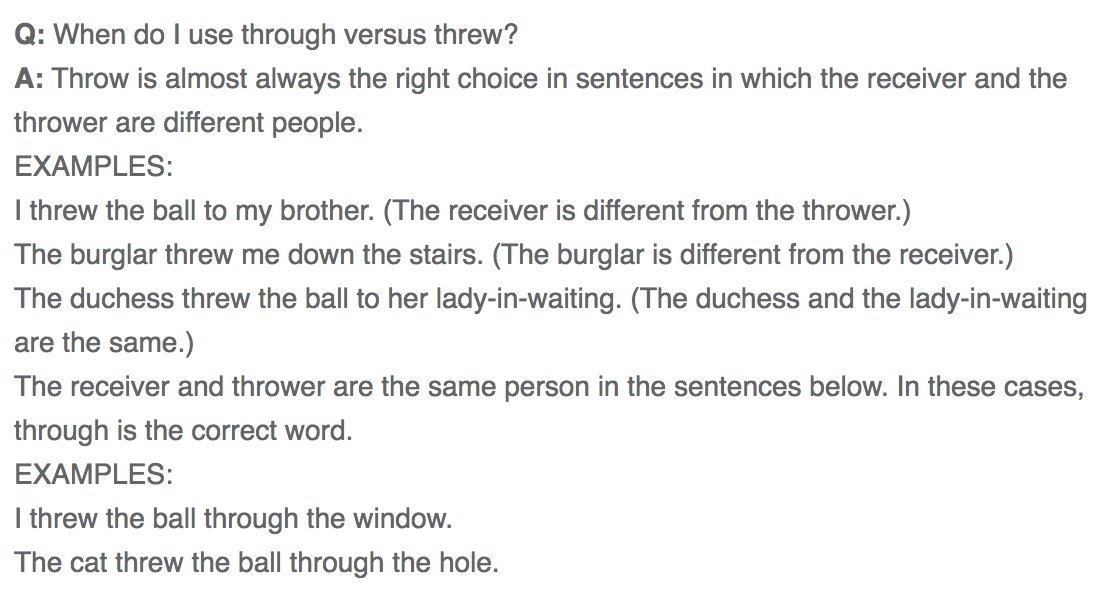

But people don't Q&A about simple animal facts very often online. What about formal English grammar questions? Lots of those online for the AI to see.

It tends to USE grammar impeccably, but often explains it incorrectly.

It tends to USE grammar impeccably, but often explains it incorrectly.

Because the AI's goal is to copy human language, to it there’s no real difference between sounding correct and actually being correct.

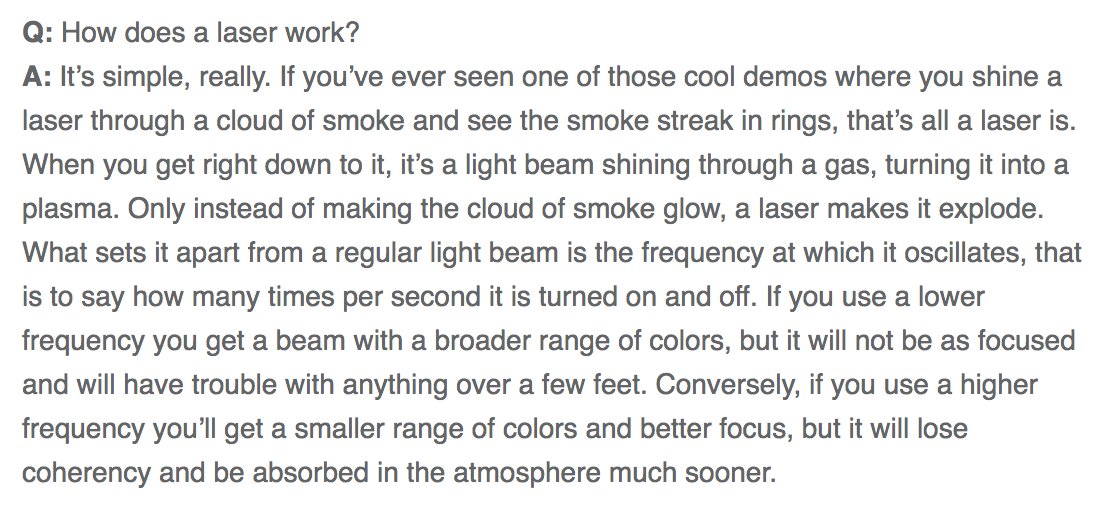

Repeatedly ask it a given science question and you can get anything from completely correct to complete BS.

Repeatedly ask it a given science question and you can get anything from completely correct to complete BS.

As of right now, the AI's most recent training data is from October 2019, so it's not quite up to date on current events.

Q: What is COVID-19?

A: C-O-V-I-D-19 (like "co-vid") is a slang term derived from the phrase "Can't Overcome Violent Itches Duh!"

Q: What is COVID-19?

A: C-O-V-I-D-19 (like "co-vid") is a slang term derived from the phrase "Can't Overcome Violent Itches Duh!"

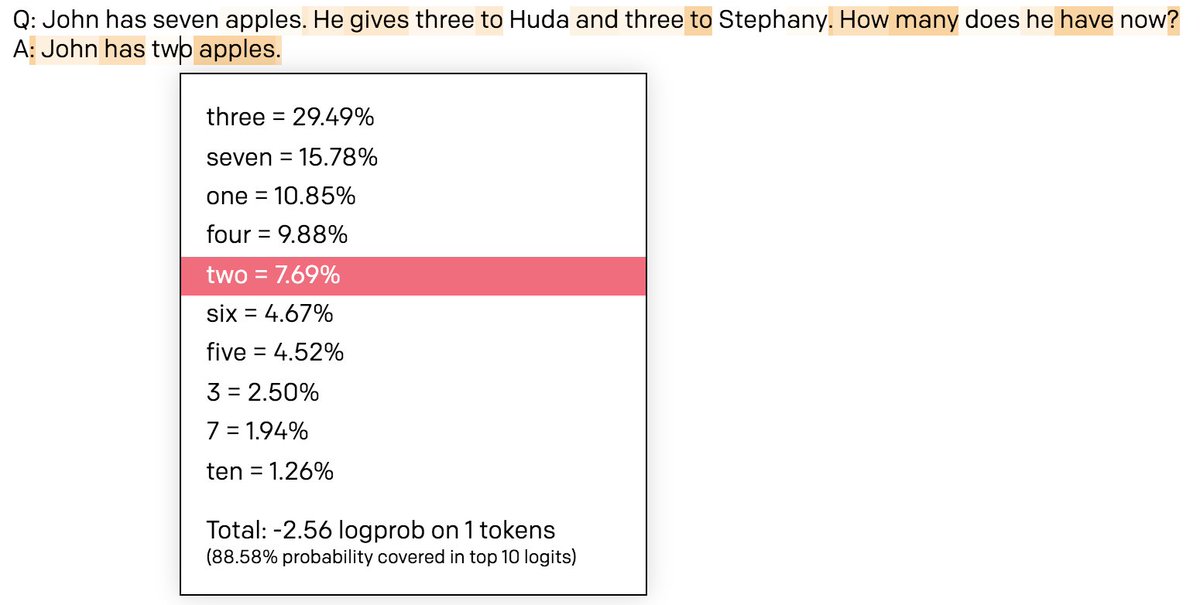

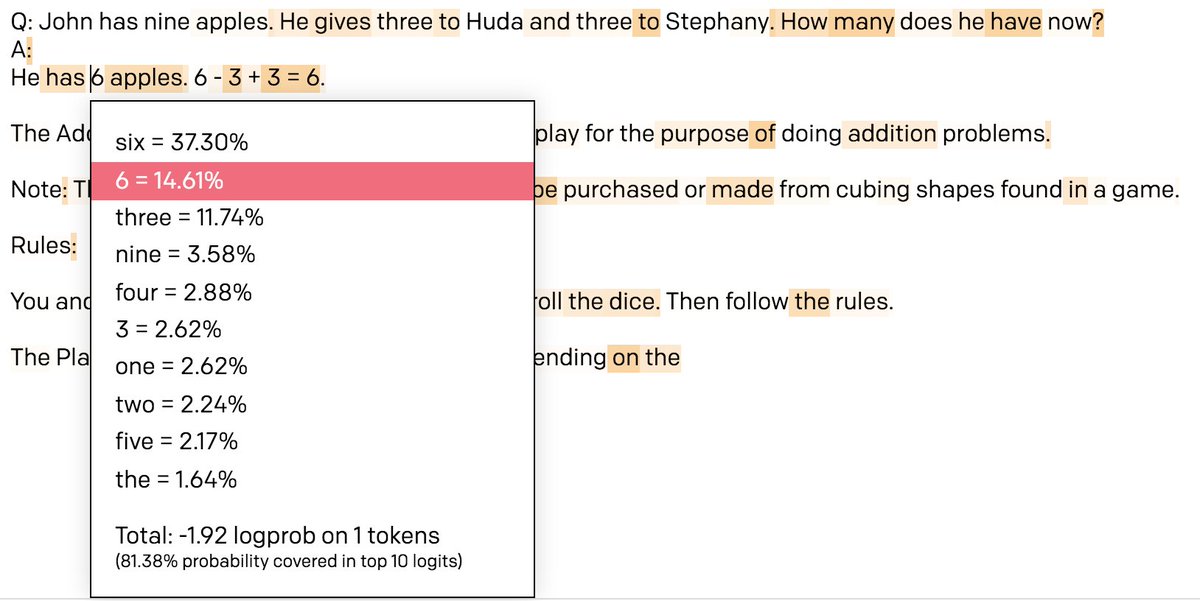

Tried some story problems. By showing probabilities I can find out what it thought the most likely answer should be.

My favorite is when it gets 10 minus 6 wrong and then starts explaining Reimann curvature.

https://twitter.com/sina_lana/status/1273303825223266307?s=20

My favorite is when it gets 10 minus 6 wrong and then starts explaining Reimann curvature.

https://twitter.com/sina_lana/status/1273303825223266307?s=20

Read on Twitter

Read on Twitter