New study in @PNASNews on MOOC persistence- 2.5 years, 3 institutions ( @harvard, @mit, @stanford), 250 courses, over *250,000* participants. New insights on scale, global achievement gaps, open science, & personalization. A Thread 1/ https://www.pnas.org/content/early/2020/06/11/1921417117 ( @whynotyet, @emyeom)

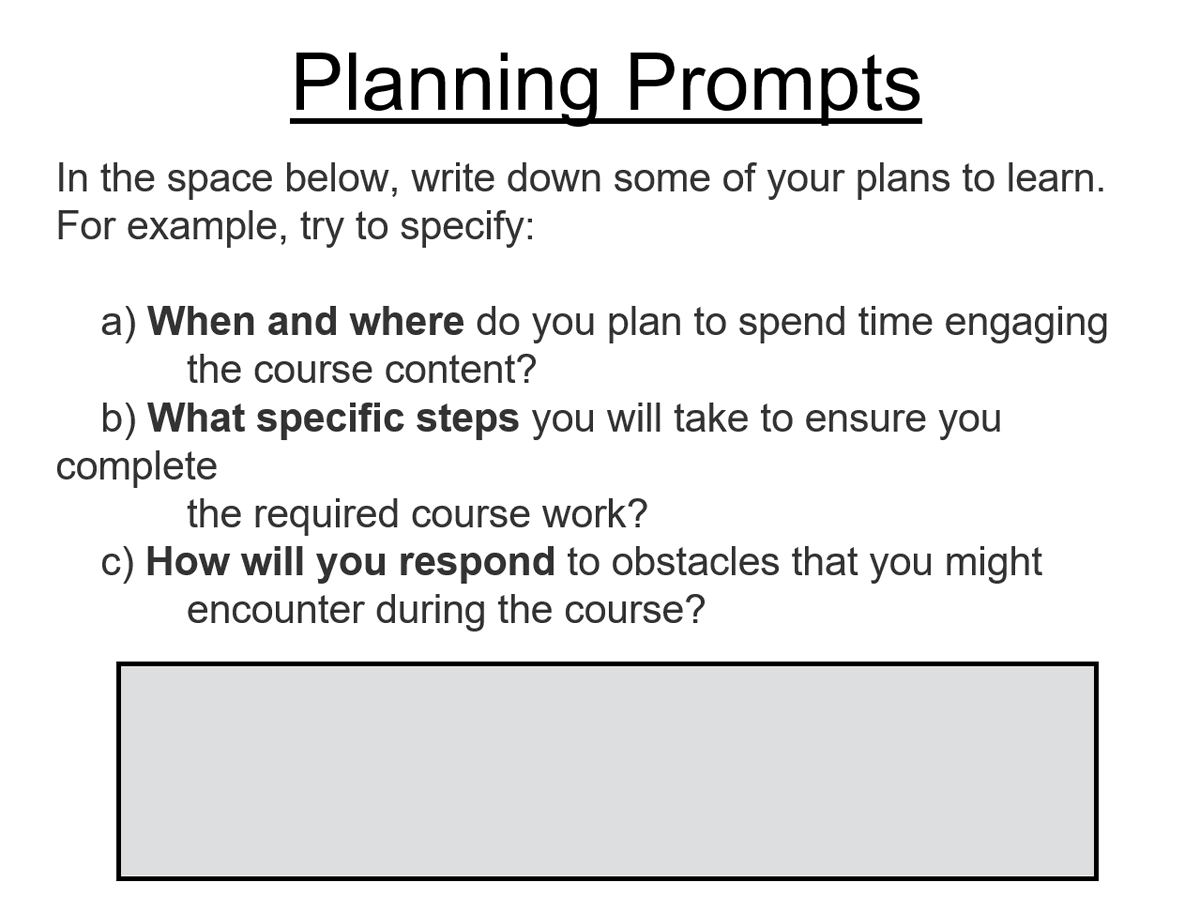

Five years ago, I met a postdoc at Harvard, @emyeom, interested in behavioral science. We decided to try out a planning prompt in a few MOOCs. The planning prompt is pretty straightforward-- in a precourse survey, we ask folks to think through how they will complete the course 2/

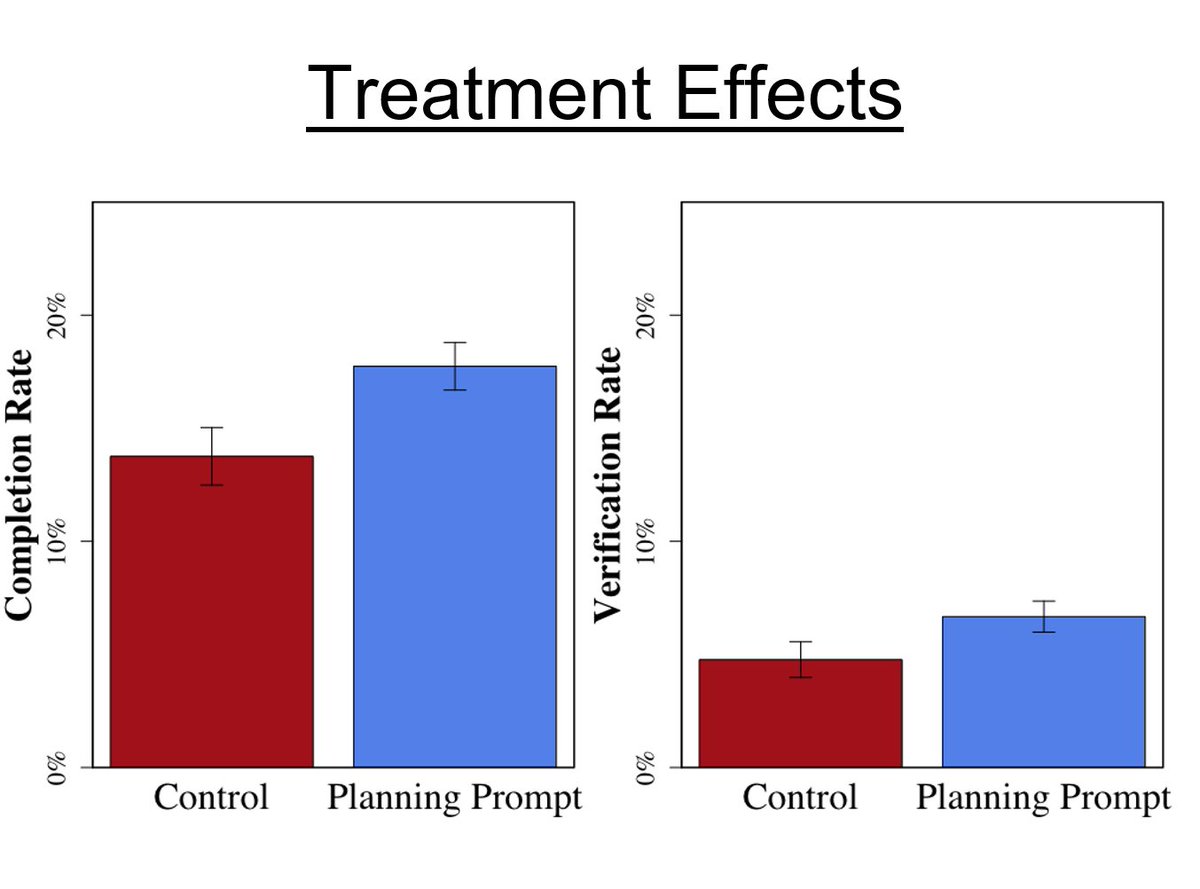

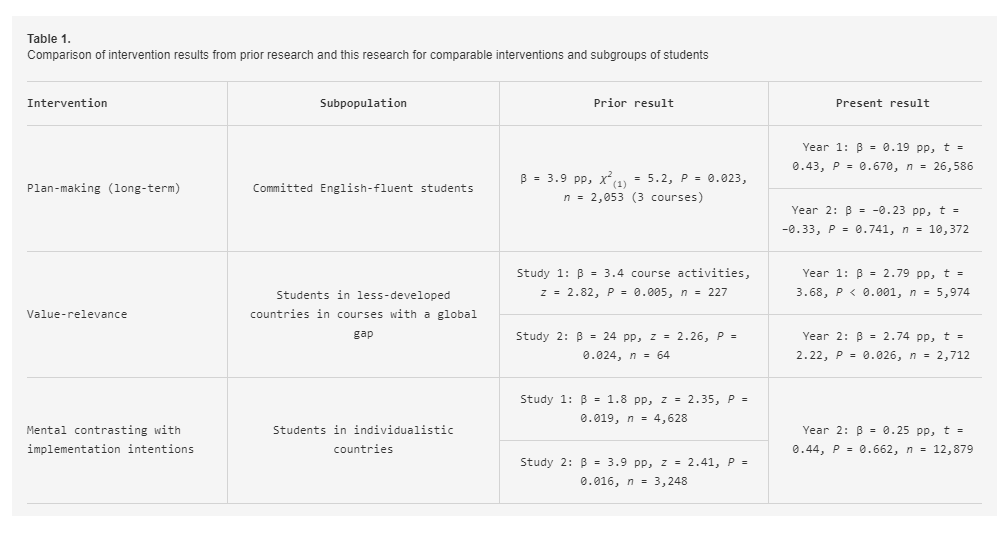

We implemented the study in three MOOCs with about 60,000 people, and over 2,000 met all of our inclusion criteria as serious learners intending to complete the course. We saw a big bump in completion rates and verification rates among those randomly assigned to planning. 3/

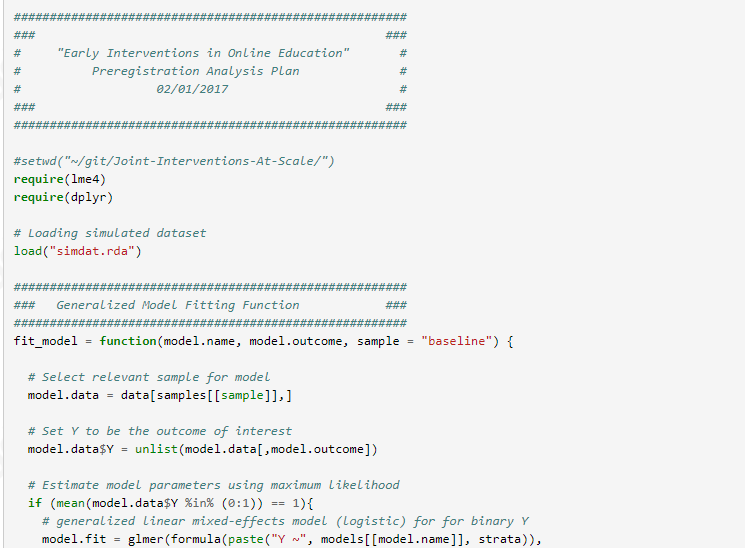

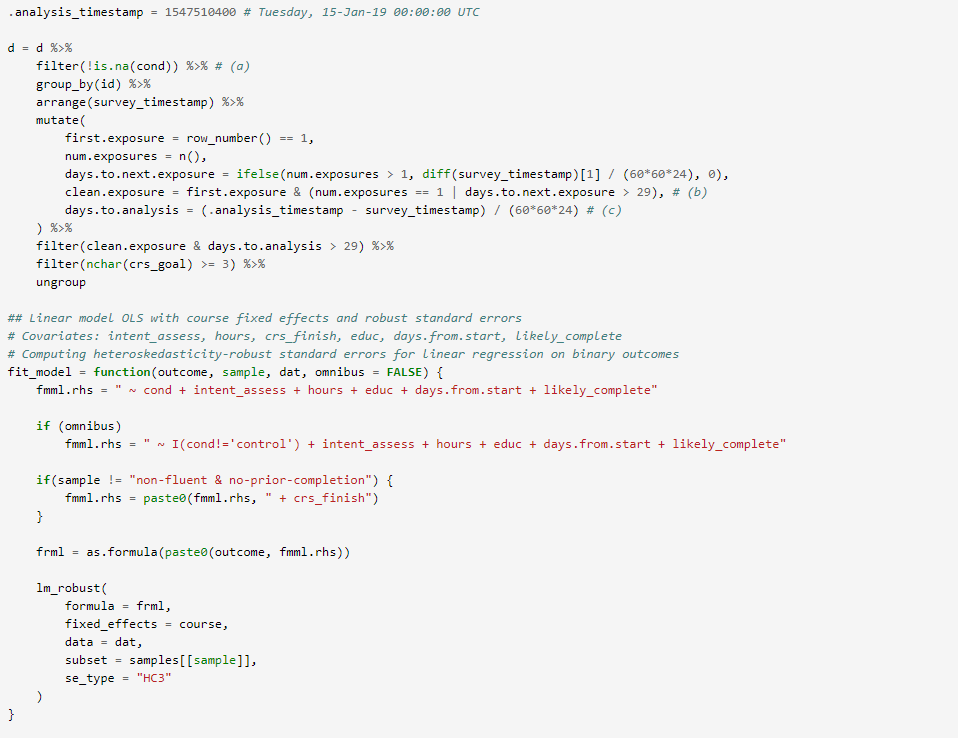

We tried to follow some emerging best practices in open science by pre-registering our hypotheses and analysis plans, you can see the timestamped plans at https://osf.io/mky8n/ . 4/

We publish the findings. https://www.researchgate.net/publication/314101810_Planning_prompts_increase_and_forecast_course_completion_in_massive_open_online_courses We're feeling good. Big study, pre-registered, solid results. 5/

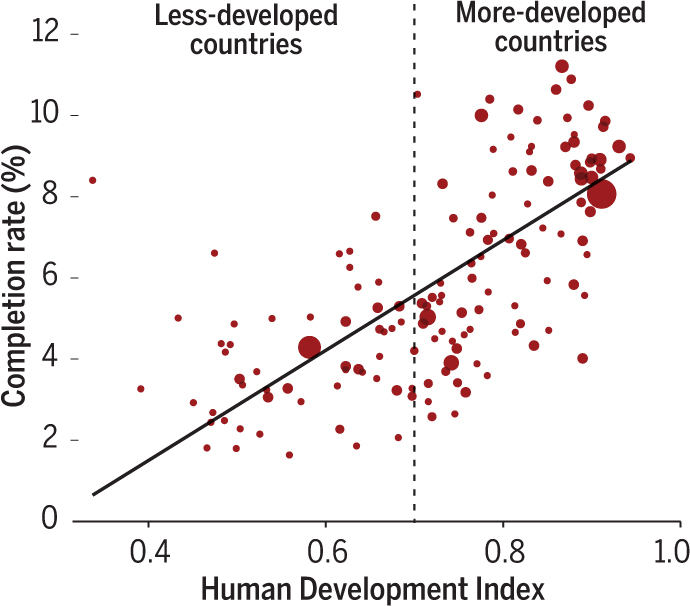

As that study is going on, I talk with @whynotyet and he tells me about another behavioral science intervention to address global achievement gaps. Both at Harvard/MIT and Stanford, we've found big gaps in completion between learners from different countries. 6/

Based on research into social identity threat, @whynotyet hypothesized that a value relevance affirmation--where learners identify personal values and write about how completing a course aligns with those values--could benefit learners from less developed countries. 7/

Rene tries these things at Stanford, and we replicate in a course at Harvard. Again, pretty solid effects on completion rate for our targeted group of learners in low-income countries. ( https://science.sciencemag.org/content/355/6322/251?ijkey=KZ/y7LGbH0qes&keytype=ref&siteid=sci $$) 8/

So now we have a paper in @SoLAResearch and a paper in @ScienceMagazine showing good effects from two interventions-- zero cost, minimal learner time investment, and high quality experimental studies showing medium to large effect sizes. We decide to go big. 9/

With @dustintingley, we get an @NSF EAGER grant ( https://www.nsf.gov/awardsearch/showAward?AWD_ID=1646976&HistoricalAwards=false) to test these two interventions at much greater scales. With support from Harvard, MIT, and Stanford, we embed these interventions in every MOOC from all three institutions. 10/

Once again, we pre-register our hypotheses ( https://osf.io/mtvh8 ), but this time, we go a step further. Since we know the @edXOnline so well, we pre-register the exact analytic code that we plan to run. https://osf.io/5kvqf/ 11/

We crack open the data after about a semester, and none of our hypotheses pan out. On average, the planning intervention and value relevance affirmation don't improve completion. We have just failed to replicate our own findings. 12/

So we start doing some exploratory analysis, and we find some issues. Our exclusion criteria--designed in the pilot studies-- are excluding nearly half the sample. Add them back in, and things look better. 13/

We find that planning helps short-term engagement measures, even if not long-term completion. In olden times, we might have just hid our pre-analysis plans, prettied up our data as best we could, and tried to publish. But instead... 14/

We decided that we'd continue to run our experiment, and pre-register a new set of hypotheses and analysis plans ( https://osf.io/7cq4s/ ), and see if we could replicate the best version of our results. We started calling our first data collection Wave 1, and our new set Wave 2.

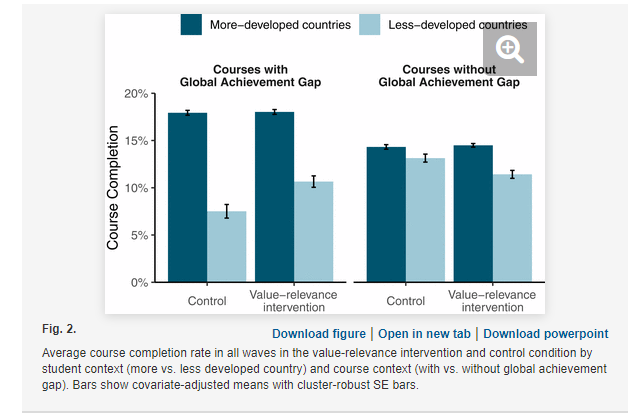

We wait another semester, gather new data, run our code, and again, our results are not very satisfying. While doing some post-hoc analysis, Rene realizes something interesting: while the global achievement gap is large on average, it doesn't appear in every course. 16/

In some courses, people from developing and developed countries complete at about the same rates, and in others there are big differences. Rene theorizes that the value relevance affirmation should only work in courses with a gap. That seems to hold true. But again... 17/

We decide that the principled way forward is to pre-register that hypothesis and keep running the study. We want to be able to predict in advance the conditions where an intervention works. So run Wave 3, affectionately called the ThreeRegistration. https://osf.io/qkx3a/ 18/

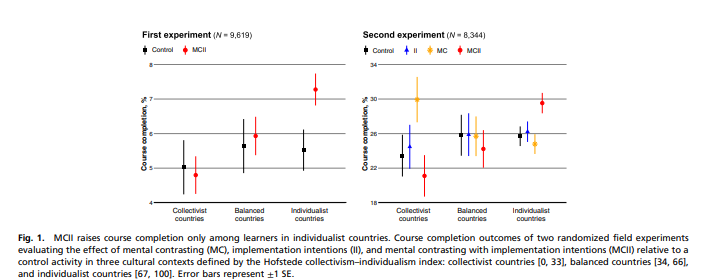

Along the way, Rene publishes another study with other co-authors testing another kind of self-regulation intervention: mental contrasting with implementation intentions. Again, good results in a few courses, but only in individualist countries. https://rene.kizilcec.com/wp-content/uploads/2017/05/kizilcec2017mcii.pdf 19/

So in Wave 3, we add the MCII intervention and a social accountability intervention. MCII asks people to imagine an obstacle and how they would get around the obstacle. Social accountability asks people to name someone who can help them persist in the course. 20/

We get the Wave 3 data back, and the MCII findings don't replicate. And remember, these pilot studies are not small; they have many thousands of people assigned to treatment in each study! 21/

By the end of Wave 3, we're pretty sure that we've got things more or less figured out. But we run out a 4th Wave so we can get a second pre-registered confirmation of our Wave 3 findings and two+ full years of data to work with. https://osf.io/mp53d/ 22/

So in the end, we found that some of our interventions worked when replicated at scale, but with much smaller effect sizes. Planning prompts help nudge a little learner engagement for a few weeks. 23/

The value relevance affirmation (and, in post-hoc examination, several others) improved completion rates for learners from developing countries in courses with a global achievement gap 24/

When compared head to head with our original studies, we have a trifecta of big, solid pilot studies that failed to replicate at their original effect sizes. 25/

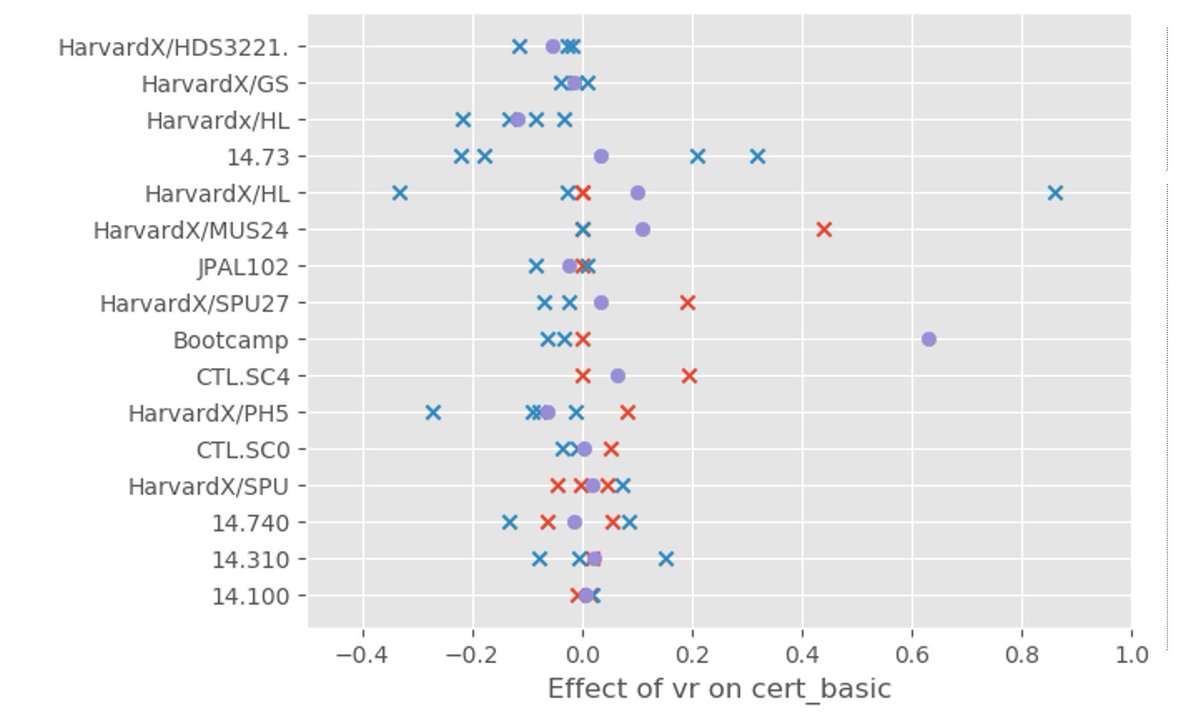

So, we have some interventions that work a little. Why don't we just, say, put the value relevance affirmation in courses with a global achievement. Well, it turns out, it's impossible to predict which courses will have a global gap! (red xs with gap, blue without) 26/

We tried to predict the presence of a global achievement gap by institution, by department, by subject, by course, and by using a giant machine learning prediction model with everything we could think of thrown in. Nothing worked. 27/

Even the same course run multiple times may have a gap in one run, but not the next. 28/

So then, for good measure, we took everything that we learned about the interventions and when they worked in Y1 data, and tried to simulate a personalized intervention strategy in year 2 (where we know the real answers). Put another way, 29/

We simulated an artificially intelligent, personalized intervention assignment algorithm that gave each student the best intervention based on their characteristics & their course characteristics. We have years of data, hundreds of courses, hundreds of thousands of students...30/

And that didn't work. We had brilliant ML folks working with reams of data, rich with features, and couldn't find a way to personalize behavioral supports for students. 31/

So, what are the takeaways. 1) Our interventions help a little. That's OK, they are free! But when we took some pretty big (n=2000+) studies and ran them across multiple contexts at really big scale (n=250000), we failed to find the same magnitude of effects. 32/

2) Context matters! Our interventions worked better in some places than others, and we're still not totally sure how or why. In part, that's because we have a huge n for individuals, but still a smallish n (250) for context. 33/

Now, along the way, we didn't really study context. And part of the reason for that is that research norms encourage large-scale studies to be hands-off to prove that a study that works in one context can replicate 34/

And our contexts were extremely homogeneous-- they were all edX courses!!-- but still we found context variation. We encourage funders to revisit norms that provide the biggest funding for hands-off replication, and instead emphasize contextual examination and adaptation. 35/

So, go read the paper: https://www.pnas.org/content/early/2020/06/11/1921417117. When folks tell you that AI-based personalized learning is right around the corner, send them that. 36/

Big thanks to all the institutions involved, and especially to @whynotyet, @emyeom @EmmaBrunskill @dustintingley who were a pleasure to work with over the last five years on this mega project. 37/

Read on Twitter

Read on Twitter