I became aware of this paper late last week. It's very concerning, for a number of reasons. I believe that Study 2's results are consistent with a true null effect, and a statistically biased analysis. 1/n https://twitter.com/PsychScience/status/1271494967257677830

I read critiques by @wgervais , @RebeccaSear , @Kane_WMC_Lab , @StatModeling that bring up major concerns with the quality of the data. So then, why the positive results? And how were they so consistent across multiverse models? 2/n

I wanted to immerse myself in the data to see if I could find a reasonable explanation for Study 2's results. (I couldn't figure out how to re-analyze Study 1, credit to @Kane_WMC_Lab for his work there) 3/n

Science needs adversarial re-analysis. Scientists carry their own biases into the lab, and these can leak into results. "Real" results should be robust to someone analyzing them in good faith, but looking for alt explanations. 4/n

I was ready to agree with the authors' results, !HUGE IF! their data and analyses proved to be valid and unbiased. However, upon exploring their data, I believe their data/analyses suffer from serious COLLIDER BIAS, and are consistent w a true null effect. 5/n

I actually learned abt collider bias from Twitter a couple years ago! It's not well-known in psych stats, but I think is more often considered in health stats. I like this explainer blog post, though there are many papers on this: http://www.the100.ci/2017/03/14/that-one-weird-third-variable-problem-nobody-ever-mentions-conditioning-on-a-collider/

Here's an example from Elwert & Winship (2014): https://www.ssc.wisc.edu/~felwert/causality/wp-content/uploads/2014/07/Elwert-Winship-2014.pdf Say beauty and talent are not correlated irl. A plot of beauty vs. talent among the population is a nice round point cloud. But maybe high beauty OR high talent are sufficient for Hollywood success. 7/n

Bc beauty and talent are uncorrelated, then Hollywood actors are more likely to be high-beauty+low-talent or low-beauty+high-talent, but not as likely high-beauty-high-talent (bc they are unrelated in population!). 8/n

THUS, beauty and talent are spuriously neg correlated in Hollywood actors, because beauty and talent are correlated with the selection variable, Hollywood status. 9/n

This holds if you either subset your analysis on Hollywood actors, or if you include the whole population but include Hollywood status as a covariate. Hollywood status is a "collider" for beauty and talent, and including it in your analysis will bias your results. 10/n

How can we identify possible collider bias, in a world where we don't know for sure what causes what? We look for the following pattern of correlations between variables: 11/n

If all of the following are true of vars X1, X2, and Y, then X2 is a COLLIDER between X1 and Y:

X1 is UNCORRELATED with Y

X1 is correlated with X2

Y is correlated with X2 in the same direction as X1 (so both pos or both neg) 12/n

X1 is UNCORRELATED with Y

X1 is correlated with X2

Y is correlated with X2 in the same direction as X1 (so both pos or both neg) 12/n

If X2 is a collider bw X1 and Y, a regression of Y ~ X1 should yield 0 relationship (correctly). A regression of Y ~ X1 + X2 should yield a negative relationship between X1 and Y (INCORRECTLY) 13/n

That collider-red-flag correlation structure? The authors' Study 2 data show it big-time:

religiosity (X1) and homicide rate (Y) are not correlated

natl IQ (X2) is neg correlated w religiosity (X1)

natl IQ (X2) is neg correlated w homicide rate (Y)

14/n

religiosity (X1) and homicide rate (Y) are not correlated

natl IQ (X2) is neg correlated w religiosity (X1)

natl IQ (X2) is neg correlated w homicide rate (Y)

14/n

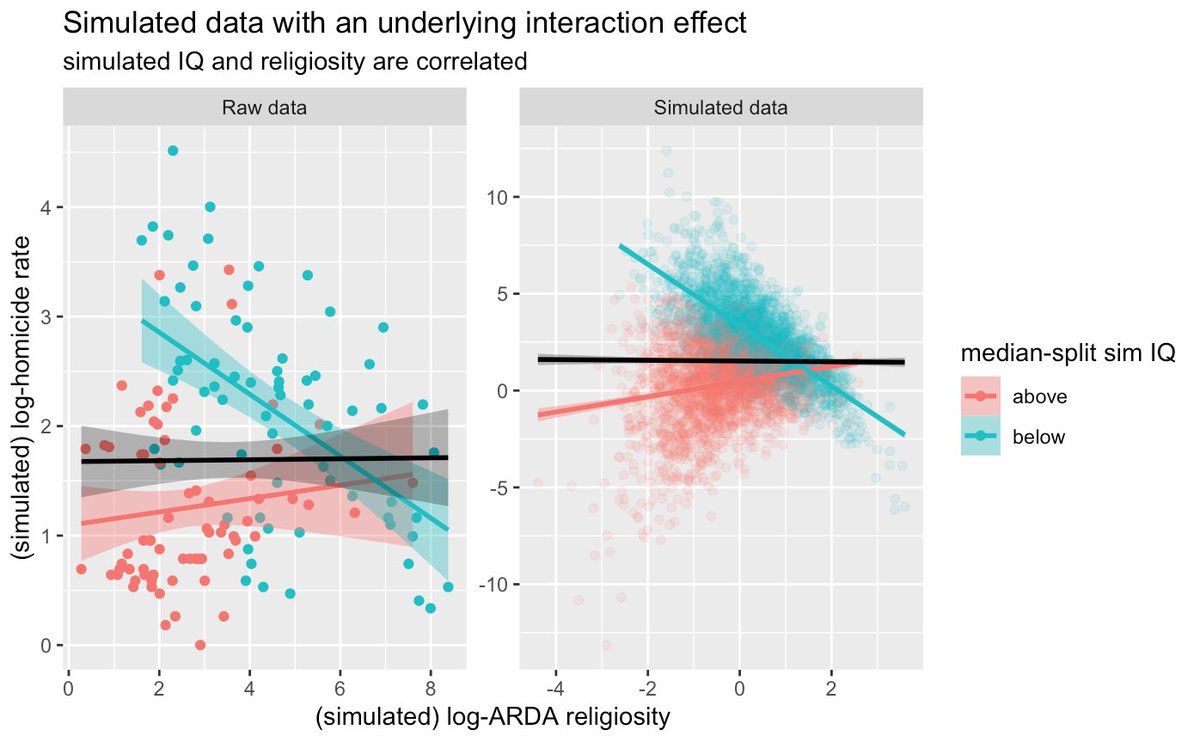

accordingly, I can reproduce the general structure of their data by simulating data that are mathematically collided. In the model (right side), there is a NULL EFFECT of religiosity on homicide--no main effect, no interaction. No effect. 15/n

Now, you may have seen from the links above that classically, collided data produces spurious negative correlations that are equal in both groups of the collider variable. That means both spurious negative slopes are parallel, and no interaction. What's up here then? 17/n

It turns out the authors' Study 2 data violate several assumptions of linear regression. I simulated the data above to violate these assumptions in the same way, and I reproduce their interaction effect from a true null. What are these violations? 18/n

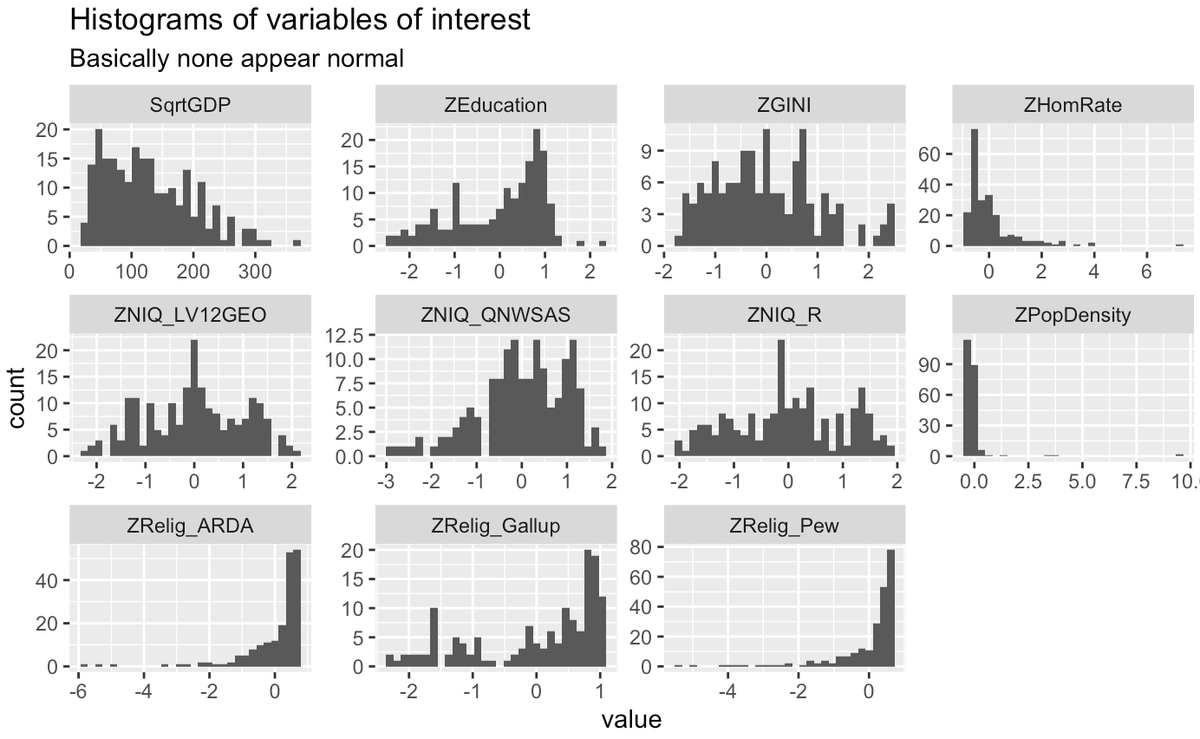

1. Normality: Linear regression expects data to be normally distributed. Here's the Study 2 data. Note the Big Skew Energy, specifically in homicide rate (ZHomRate) and religiosity (ZRelig, there are 3 measures) 19/n

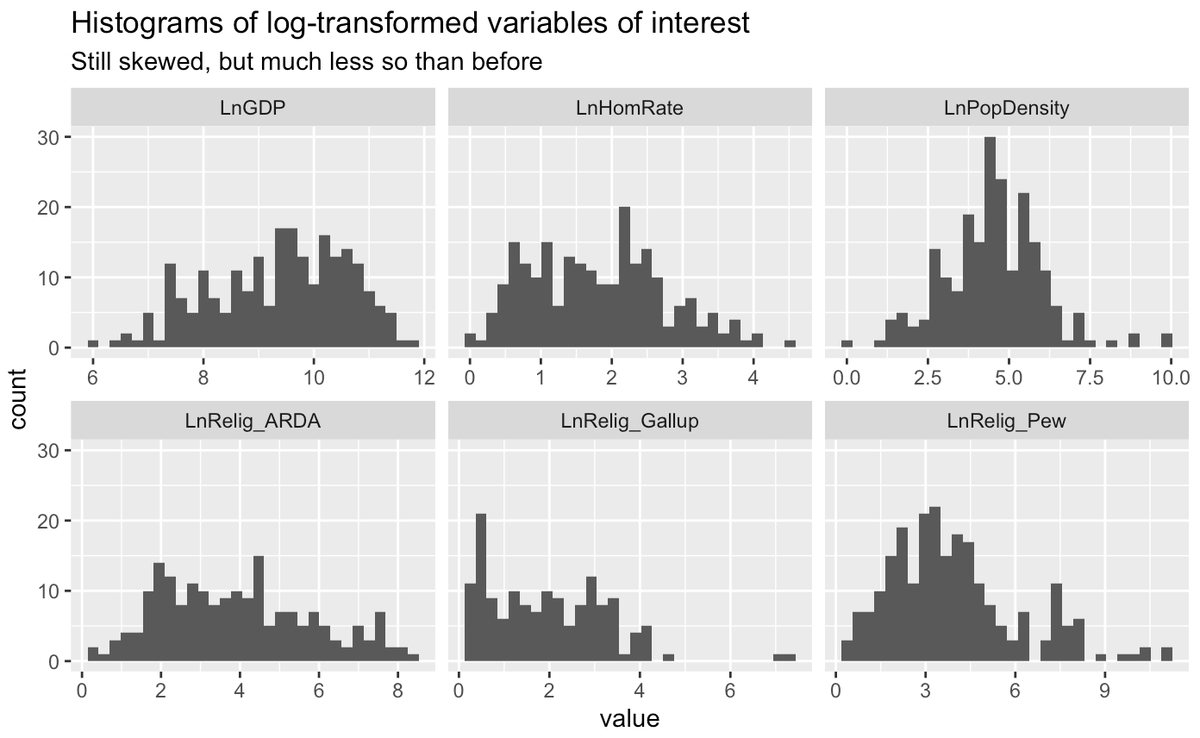

The authors do NOT correct this skew in their analyses. To give the benefit of the doubt, I log-transformed the really skewed data before doing my re-analyses. It helped some, but still not perfect. 20/n

2. Homoscedasticity. Linear regression expects the error variance around the regression line to be equal at all points on the line. Visually, you can ID UNEQUAL error variance by TRIANGULAR scatterplots. Here's every IQ var against every relig var and homicide rate: 21/n

That last graph is kinda crazy I know. All the BLUE panels, where natl IQ is related to religiosity or homicide rate, show a TRIANGLE around the black regression line. Thus, the error around the line is bigger at one end and smaller at the other. 22/n

3. Orthogonality. Multiple regression, as the authors report, expects predictor variables to be UNCORRELATED with one another. As addressed above, def not the case in the Study 2 data. This doesn't always imply collider bias, but with these data it does. 23/n

If you combine the data skewness and unbalanced error variance into a mathematical model of collided data, you induce an interaction between X1/X2 on Y. AGAIN, where NO INTERACTION TRULY EXISTS. Here's my R code to produce the collided data. 24/n

Religiosity and homicide rate are simulated with a gamma dist to give them a slight right tail, and the error SD of simulated IQ gives bigger errors for higher religiosity/homicide rates, per the raw data. And the correlation structure is collided. 25/n

So, the Study 2 data are consistent with collided data, where NO REAL EFFECT EXISTS. But is there a model where a REAL EFFECT DOES EXIST, that produces the same correlation structure and data spread/interaction pattern? I couldn't find one. 26/n

If you put a real interaction into the mathematical model, you can EITHER match the correlation structure of Study 2, OR you can match the data spread/interaction pattern, but NOT BOTH. 27/n

If I model a real interaction, and set simulated IQ to be correlated with religiosity (per the real data), the outbound data technically show the interaction effect, but with a definite U-shaped relationship bw religiosity and homicide rate that the real data don't have. 28/n

If I model a real interaction, and allow simulated IQ to be UNCORRELATED with religiosity, I can get the interaction effect, but at the cost of not recovering the correlation patterns in the real data. 29/n

Thus, I believe Study 2's results are CONSISTENT with a NULL EFFECT, and INCONSISTENT with a TRUE INTERACTION. Does this mean there IS no effect? I don't know. I can only look for all possible explanations, and go with the one I think fits best. I'll never know for sure! 30/n

I DO think that others' concerns about construct validity of natl IQ feed into this. If natl IQ were really measuring something independent, these bias concerns wouldn't be there. More likely that some other latent var drives natl IQ, not "intelligence". 31/n

And finally, @keith_lohse makes a great point about being wary of the conclusions one draws from one's analysis. Sloppy science is bad science, and unethical science is bad science. Even if we disagree on ethics, the results do not convince me, and I hope not you either. 32/32

Read on Twitter

Read on Twitter