Good news, everyone, I figured it all out! Yes, all of it: free energy, the entropic brain, mental disorders, postmodernism, rationality, and psychedelics. Now y'all can wait a couple of weeks for me to write a careful post with links. Or, you can strap in for a manic tweetstorm.

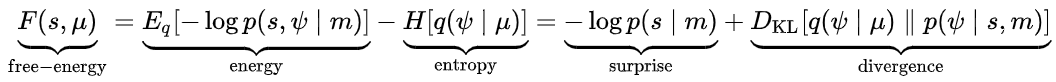

When you go to the wiki page on free energy, you see weird formulas likes this. Free energy = surprise + divergence = complexity + accuracy. What in Friston's name does it all mean?

https://en.wikipedia.org/wiki/Free_energy_principle

https://en.wikipedia.org/wiki/Free_energy_principle

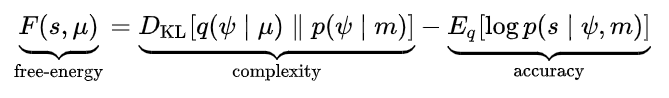

Let's start with a simpler diagram: you have a cell/brain with an internal state "I". It receives sensory inputs "S" from the outside world, which are caused by some hidden state of the world "H". And the world can be affected through actions "A".

A cell or brain can be generalized to any pattern that wants to persist in a world ruled by the second law of thermodynamics. How can anything resist entropy? By creating a local island of order. To do that, it needs to anticipate inputs "S" and shape them with actions "A".

To persist for a long time, it needs to anticipate inputs, make "S" match what "I" predicts it would be, over a long time. You do it in three ways:

1. Accept whatever "S" comes and update "I".

2. Act on the world to shape "S".

3. Ignore "S" when it deviates from "S|I".

1. Accept whatever "S" comes and update "I".

2. Act on the world to shape "S".

3. Ignore "S" when it deviates from "S|I".

Example: you expect to see red ("S|I" = red) but "S" = green. Option 1: accept that the world is just green, "I" = green always. It's a simple model and it predicts current inputs well.

But, some part of you knows that the world is really complex. That part is your hyperprior given to you by evolution and the genes that shaped your brain. "I"=green is too fragile and bound to run into big prediction errors if "S" deviates from green.

You can come up with a very complex model: the color you see is the result of an endless war in the faerie realms... But a model that's too complex also bodes poorly for future prediction accuracy. There's no way you can keep track of all those faeries and how they affect colors.

So this is the first tradeoff in free energy minimization: model complexity vs. model accuracy. You want the simplest model that still accounts for all the data so far, because it's the one likeliest to predict sensory inputs well in the future.

Option 2 is acting on the world to make "S" equal red. Let's say you can't do that for now (or don't know how, since your model was just ["S|I"=red] and it had no terms for actions and their consequences.

Option 3 is ignoring the input. Just say that it's a reddish kind of green. If your brain does that, you will literally perceive yellow instead of green, since perception is a combination of what you predict and what you see.

But yellow is still not red. This is the 2nd trade-off: updating to a new model means divergence from the model that did well so far, but refusing to update keeps you surprised at seeing the wrong color. It's the same trade-off, just focusing on a point in time.

Suddenly you see "S" = red. If you updated to "I" = green, you're now way off. If you stuck with "I" = red you're good, but you suffered before. If you went with the faeries, you're doing a bit better but paying a complexity penalty.

The best is to acquire a simple and true model that predicts both the past and the present. You're watching a rotating apple! This feels like insight, and it feels great. It's the feeling that you'll predict "S" very well in the future and minimize free energy!

How do you decide in general whether to update your internal model in response to surprising inputs or ignore the inputs? That depends on your belief on how predictable the world is in general, your hyperprior.

Predictable world = it's worthwhile to explore and learn and acquire better models since they'll pay off in great predictions in the future. It's worth trying to do things because you'll learn how your actions shape the world in predictable ways.

Unpredictable world = there's no point in exploring, you'll never learn anything and keep being surprised by a chaotic world. It's better to stick to non-complex models and not try things.

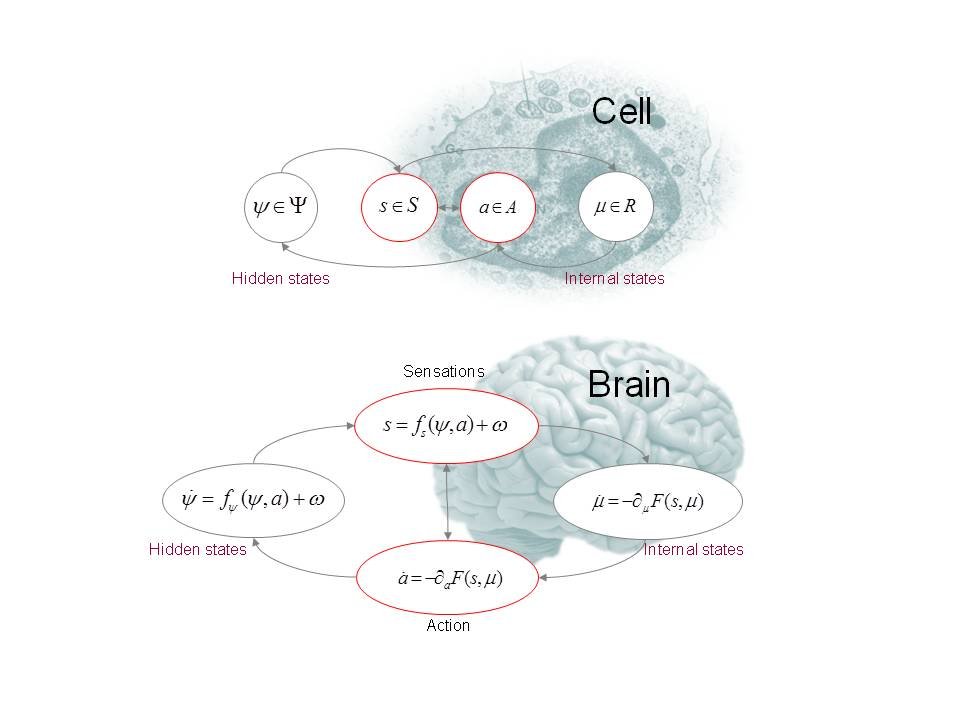

Then there's the feeling of how good your current models are relative to the best that is possible in the world you're in. Whether you know all there is to know or whether you know very little. We have two axes, time for a 2x2 (that Friston came up with): https://slatestarcodex.com/2018/03/08/ssc-journal-club-friston-on-computational-mood/

Unpredictable world + current model is good = depression. You know that the world is hopeless and gray (a mix of all possible colors), there's no point in updating. You see colors washed out, move slowly, refuse to try things, definitely don't try to explore and learn things.

Predictable world + good model = mania. Feeling of power, every action will have its intended consequence. Not a strong desire to learn and explore but to exploit - the world is your oyster and is all figured out. Not a lot of updating on contradictory evidence.

Unpredictable + bad model = anxiety. You don't have it figured out, and feel like you never will. You seek out new information but always fear that it will be bad and not useful for learning and making good decisions. External locus of control, your actions aren't meaningful.

And finally the missing mood: predictable world + current model bad = CURIOSITY. Strong desire to learn and to explore. It's worth updating and building complex models, you know it will pay off handsomely in the future. Don't act purposefully to shape the world yet, only learn.

On a society level, top right mood = mania = modernism. An overreaching ideology that says that everything is figured out and can be controlled and shaped top down. The Vatican, planned cities on grids, standard curricula with standardized tests, rigid roles, etc.

A rejection of that is postmodernism, which feels kind of like anxiety. Truth is an illusion, no one knows anything, it's all power plays in a chaotic world that everyone can interpret as they wish.

What is rationality? It teaches that the world is understandable, but that it's complex and takes a lot of hard work. It's about rejecting simple models (politics = green tribe bad) with complex ones (politics = game theory + economics + myths + tribalism + identity +...)

It's about overcoming confirmation bias (hallmark of mania and the top right) and helplessness (anxiety and the bottom left) and to actually try and figure things out. The first virtue of rationality is... CURIOSITY.

Aight, let's talk evolution of brains. A worm has simple models of the world and few actions: temperature/sugar/salt, crawl forward/crawl back. A hedgehog's world is more complex. A human's is more complex still - it has a lot of other humans in it.

We all live in the same *physical* world, but a human needs a more complex *representation* than a hedgehog than a worm. And it needs to be flexible, so we can learn complex models and adapt. Enter the neocortex. Also, enter the entropic brain. https://www.frontiersin.org/articles/10.3389/fnhum.2014.00020/full

You can measure brain entropy from the outside with EEG: how predictable is its activity from one minute to the next. Entropy is very low in anesthesia, low in deep sleep, high in waking consciousness, even higher on psilocybin (!) We'll get back to this.

It's also low in other mammals, higher in primates, higher in adult humans, highest in babies. Baby brains are super flexible, don't have rigid patterns, and update and learn super fast in reaction to new sensory inputs. But humans can't stay flexible and fast-updating forever.

So as we mature we develop a brain structure that suppresses entropy and locks brain patterns in place: the default mode network (DMN). DMN is highly activated when you make assertions about yourself (ego) and in depressed people. DMN inhibits updating your models of the world.

What's "ego" or "self"? It's a very useful model you learn only on. If you think about a pen floating up it doesn't, but if you think about your hand going up it does. So you model the world as having a particular set of things that you're in direct control of: your self.

So Friston (of the free energy hypothesis) and Carhart-Harris (of the entropic brain hypothesis) think that psychedelics can help with most mental health issues, which are really disorders of brain rigidity, by suppressing the DMN and letting you update: http://pharmrev.aspetjournals.org/content/71/3/316?fbclid=IwAR36UzFla5Lfx7-4LTr6R8N0XdUSOnbg3gnRPXn806cPKO7Zsas2EsJJhDs

Depression - stuck on feeling hopeless and worthless. Addiction - stuck in a loop of craving and indulgence. Trauma/PTSD - stuck with a defense mechanism to a world that has since become safe. Autism, schizophrenia, they all fit.

Going back to our 2x2, psychedelics throw you hard left. A bad trip is pure anxiety. But a good trip is pure curiosity: you feel like you're learning and updating and by the end of the trip you're hopefully ending up with a more adaptive model.

On a society level, psychedelics are the enemy of modernism, of rigid control structures and totalitarian ideologies. In a society indoctrinated into these ideas by 12-16 years of modernist education, it's both scary and exciting to unlearn that doctrine.

And finally, rationality is also about curiosity, about resisting your rigid biases and learning new things. So should all Rationalists take acid and read the Sequences or watch a Feynman talk or practice Rationalist self-improvement techniques? I'm not saying they shouldn't...

Acknowledgements and inspirations: Karl Friston, @RCarhartHarris, @fluffycyborg, @slatestarcodex, @ESYudkowsky, @Aella_Girl, @Meaningness, @jameswjesso, my wife, and the friend who gave me mushrooms.

Stay curious, my friends.

Stay curious, my friends.

Oh, I forgot to thank @Conaw! A week ago I switched all my notes and thoughts on these subjects to @RoamResearch. Did the interconnected link system of Roam help me make all these concept connections in my brain? Who knows, brains work in mysterious ways :)

Read on Twitter

Read on Twitter