AI researchers now claim they can infer emotions from how you walk: happy, angry, sad, or neutral. I don’t think this “works” in a literal sense, but let’s chat a bit about using body language to claim someone’s being aggressive or threatening. https://venturebeat.com/2019/07/01/ai-classifies-peoples-emotions-from-the-way-they-walk/

Last week the @ACLU released a paper on AI/surveillance. There’s a whole section on inferring emotions using AI. But this isn’t about inferring emotions, it’s about inferring *intentions* -- trying to figure out what you’re going to do before you do it. https://www.aclu.org/sites/default/files/field_document/061819-robot_surveillance.pdf

If we accept that AI can “prove” aggression, could that become a legal defense for use of force? The researchers claim that “automatic emotion recognition” could be useful to police. How? By using AI to infer someone's emotions/intentions and, it follows, act before they do.

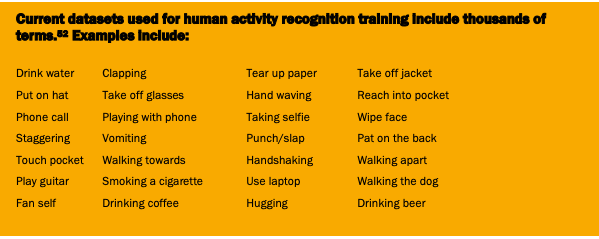

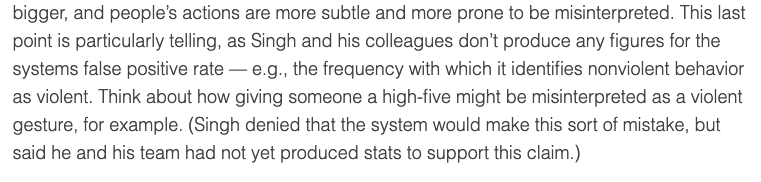

Researchers have made this claim before. A researcher at Cambridge claimed he trained drones to spot violent behavior in crowds based on body language, recognizing poses like punching, kicking, and stabbing. Potentially life saving, if true. https://www.theverge.com/2018/6/6/17433482/ai-automated-surveillance-drones-spot-violent-behavior-crowds

How often do the drones misidentify a nonviolent person as a threat? Who knows! The researchers didn’t include a false positive rate. Imagine if police were making decisions on whether to disperse protestors based on these kinds of "predictions." Imagine what it could justify.

Important to note this is a pre-print of a paper, not an actual finished project. *But* when research hits the web, it’s there forever. Just last month, @adamhrv found datasets from Duke research ended up in the hands of Chinese surveillance firms. https://www.theatlantic.com/technology/archive/2019/06/universities-record-students-campuses-research/592537/

Read on Twitter

Read on Twitter